7 Helm alternatives to simplify Kubernetes deployments

Helm has long been the go-to tool for managing Kubernetes applications, often described as the “package manager” for Kubernetes. It allows you to bundle up Kubernetes YAML manifests into reusable charts, templatize them, and deploy them with a single command.

In fact, Helm is the beloved package manager for Kubernetes – it uses charts (collections of YAML templates) to define even the most complex apps. By filling in a values file and running helm install, teams can deploy applications ranging from a simple web service to an entire WordPress stack with a database. It’s no surprise Helm became a cornerstone of the Kubernetes ecosystem, simplifying manifest creation and handling tasks like upgrades (even database migrations) that would be tedious to script by hand.

But despite its popularity, many developers have a love-hate relationship with Helm. Its powerful templating comes at the cost of complexity and a steep learning curve.

This article explores 7 Helm alternatives that can simplify Kubernetes deployments.

Before you ditch Helm, it’s worth understanding what it actually does. Helm packages Kubernetes manifests into charts—directories of YAML templates (using Go templating) plus a values.yaml for customization.

Think of a chart as a recipe with placeholders for things like image tags and replica counts. You fill them in via the values file or CLI flags. Helm renders these into standard YAML, applies them to the cluster, and tracks the result as a release. That release metadata enables upgrades, rollbacks, and deletes.

Helm took off because it simplified deploying complex apps. Instead of juggling 20 YAML files, you run helm install. Upgrades are easy too—just run helm upgrade and it applies the diffs. Many charts also include logic for migrations, CRDs, and setup scripts, making ops less painful.

If Helm is so great, why do people seek Helm alternatives?

Well, Helm is not all sunshine and rainbows, especially when you’re the one writing or maintaining the charts. Here are some of the pain points that often come up:

Helm’s templating language is based on Go templates embedded in YAML. This can get confusing fast. You end up with double-braces and sprinkles of logic right inside your YAML files.

There’s truth in that tongue-in-cheek complaint above – Helm templates can be hard to read and debug. Newcomers face not only Kubernetes’ learning curve but Helm’s own syntax and quirks. If you peek into a complex chart’s templates, it can feel like deciphering hieroglyphs (lots of {{ if ... }} and template includes). Small mistakes can lead to big deployment issues, and it’s not always obvious which value controls what without extensive chart docs.

Helm tracks each deployment as a release by storing state in Kubernetes secrets. This enables rollbacks and upgrades, but can cause issues if something fails mid-upgrade—you might need to manually rollback or delete the release secret. Helm also blocks reuse of a release name, which complicates CI/CD for ephemeral environments. Large releases can even hit Kubernetes size limits. Managing releases across multiple environments or clusters adds more overhead—many teams use tools like Helmfile just to keep it all in sync.

With plain Kubernetes manifests or Kustomize, you can often see exactly what YAML you’re applying. With Helm, what you apply is generated from templates + values, which means you often don’t see the final YAML until Helm renders and deploys it.

A tiny tweak in values might enable some hidden part of the chart (i.e, turn on an ingress you didn’t expect), and suddenly you’ve deployed something surprising. The advice to run helm template --dry-run and check the output diff is common, but it’s an extra manual step to avoid unpredictable results.

Helm charts depend heavily on their maintainers. Some are solid, others are buggy or misconfigured—missing RBAC rules, lax security settings, etc. You often end up forking or patching them yourself. Helm 3 removed Tiller (which had broad cluster access), but supply chain concerns remain: pulling a random chart and deploying it to your cluster requires trust. In multi-tenant setups, blindly installing third-party charts is risky—Helm will render and apply whatever YAML is inside.

For very simple deployments (say you have a couple of Deployments and Services), introducing Helm might add more complexity than it removes. If you don’t need reusability or dynamic templating, plain manifests or a lighter-weight tool might suffice. Using Helm in CI/CD for a simple app can feel like using a chainsaw to cut butter – lots of sharp edges, little benefit.

Given these pain points, it’s no surprise that Kubernetes users have explored many Helm alternatives.

This might be extreme, but it encapsulates a real frustration that exists in the DevOps community. Not everyone hates Helm – but many have found simpler or more specialized solutions to specific problems Helm has.

The top 7 Helm alternatives, ranked

Northflank is a modern platform that can be seen as an alternative approach to using Helm – instead of templating YAML at all, you deploy your apps via Northflank’s interface or API and let it handle the Kubernetes under the hood. Northflank essentially provides a PaaS-like layer on Kubernetes (it’s been described as “Heroku for your own clusters”), so developers get the benefits of K8s without having to write Helm charts or even raw manifests. The idea is to take away the “YAML hell” (and Helm) and replace it with a smoother deployment experience.

How does Northflank simplify things? A few key points:

With Northflank, you don’t write Kubernetes YAML directly, nor do you need a templating language. You define your services, jobs, databases, etc., through Northflank’s UI or configuration, and it handles generating and applying the Kubernetes resources. One might say Northflank gives you the power of Kubernetes without needing to write any YAML or Helm charts. Instead of wrestling with Chart.yaml and values, you push your code or container image and let the platform take care of deployment definitions.

Northflank allows you to manage environment variables, secrets, and other config per environment through its dashboard or API. Need different settings for dev vs prod? That’s handled with straightforward configuration profiles, not separate values files and template logic. This addresses the multi-environment issue by providing a cleaner interface to customize settings for each deployment stage without duplicating config in many places.

Northflank can automatically build your code (integrating with your Git repo) and deploy on merge – basically CI/CD out-of-the-box. It sets up “golden path” pipelines so that every commit can go through tests, builds, and end up in a preview or production environment. This removes the need to script helm upgrade in your CI; instead, Northflank is continuously deploying your app based on Git events. For teams, this means no manual helm commands or custom Argo workflows – it’s baked in. (Northflank even supports a GitOps mode if you prefer, syncing from your Git like ArgoCD would.)

Northflank supports BYOC (Bring Your Own Cloud), allowing you to attach multiple Kubernetes clusters (on AWS, GCP, Azure, on-prem, etc.) to the platform. It presents a unified interface to deploy across them. Want to deploy Service A to cluster X and Y? It’s a matter of selecting the target in Northflank, not manually configuring two Helm contexts. This is great for enterprises running in multiple regions or cloud providers – you get one pane of glass. Under the hood, Northflank’s control plane orchestrates the workloads on your clusters, handling all the Helm/manifest grunt work.

Northflank doesn’t shun Helm entirely – it actually can consume Helm charts for certain use cases. For example, Northflank’s “bring your own addon” feature allows you to deploy a Helm chart (say for a third-party service like Redis) inside Northflank if it’s not natively supported. Northflank will run that Helm chart for you. This means you can still leverage the Helm ecosystem for things like databases, but you don’t have to manage Helm yourself; Northflank acts as the operator. In essence, Northflank cherry-picks the benefits of Helm (reuse of community charts) without exposing you to Helm’s complexity directly.

The Northflank approach is aimed at improving developer experience. All a developer needs is a Docker image (or source repo). You point Northflank to it, and it deploys it. All your developers need is a Dockerfile or Buildpack. Northflank picks up changes from Git and can automatically kick off builds. This is a far cry from requiring every developer to understand Kubernetes internals or Helm. It’s easier to onboard new projects and team members since there’s less bespoke YAML to learn.

In summary, Northflank replaces Helm by abstracting away Kubernetes deployment configuration entirely. It trades some flexibility (you’re using the platform’s way of doing things) in exchange for massive simplicity.

No more Helm client, no values.yaml, no debugging failed template renderings.

One could joke that Northflank’s alternative to Helm is: don’t even give developers a chance to write Kubernetes YAML. 😄

If your goal is to deploy apps with minimal ops headache, Northflank’s all-in-one approach might be the #1 Helm alternative to consider.

| Tool | Learning Curve | Flexibility (templating power) | Multi-cluster/Env support | CI/CD integration |

|---|---|---|---|---|

| Helm | 🔴 High – must learn Helm syntax and chart structure. | 🔴 High – Go templating (loops, conditionals), many community charts with built-in configurability. | 🟠 Moderate – can deploy to any cluster, but managing many releases/environments requires extra tooling (Helmfile, etc.). | 🟠 Medium – works in CI but requires scripting; GitOps tools support Helm charts natively. |

| 🥇 Northflank | 🟢 Low – use UI or simple config; no Kubernetes knowledge needed. | 🟢 High – covers most app configs (services, jobs, addons) | 🟢 High – built-in multi-cluster and multi-env management via a unified platform. | 🟢 High – built-in pipelines and Git integrations for automated build/deploy (CI/CD as a first-class feature). |

| 🥈 Kustomize | 🟢 Low – just YAML with a few extra files (kustomization.yaml). | 🟠 Medium – can patch/overlay any field, but no arbitrary logic or packaging. | 🟠 Medium – great for multi-env overlays; for multi-cluster, usually used with GitOps or scripts (not automatic across clusters by itself). | 🟠 Medium – easily used in GitOps (just commit YAML); in CI, just build kustomize and apply. |

| 🥉 Skaffold | 🟢 Low – simple YAML config for pipeline; mostly convention-driven. | N/A – not a config tool (relies on manifests or Helm charts). | 🔴 Low – focused on single-cluster dev workflow (for multi-cluster, you’d run separate Skaffold or use other CD tools). | 🟢 High – purpose-built for CI/CD and dev loops; great integration with local dev and CI pipelines. |

| Argo CD | 🟠 Medium – need to learn GitOps concepts and Argo specifics (Application CRs, etc.). | N/A – no templating (uses whatever manifests you give it). | 🟢 High – can manage deployments to multiple clusters/environments easily via Git sources. | 🟢 High – it is a CD tool; integrates with Git repos for automated deployment (supports Helm, Kustomize, etc.). |

| Jsonnet | 🔴 High – new language to learn; requires JSON mindset. | 🟢 Very High – essentially a programming language for config (conditions, loops, modularization all possible). | 🟠 Medium – can generate configs for any env/cluster, but you must handle applying to clusters (often used with other tools). | 🟠 Medium – usually used with companion tool (like Tanka or CI scripts) to integrate into deployment process. |

| Kapitan | 🔴 High – complex tool with multiple features and options to learn (inventory, Jsonnet/Jinja, etc.). | 🟢 Very High – supports Jsonnet, Jinja2, and more; can template across multiple systems (K8s, Terraform, etc.). | 🟢 Very High – explicitly designed to manage many environments/clusters with a single inventory (solves multi-env thoroughly). | 🟠 Medium – you run Kapitan compile in CI and then apply; no built-in CD, but can be paired with GitOps or CI scripts. |

| CDK8s | 🟠 Medium – must know a general-purpose language (TypeScript/Python) and CDK8s API. | 🟢 High – full programming capabilities in familiar languages; extremely flexible patterns. | 🟠 Medium – can write code to target multiple envs, but output is static manifests (multi-cluster handled via separate configs or git branches). | 🟠 Medium – integrates via code build steps; use with GitOps or manual apply; no native controller (which some consider a pro). |

Kustomize is often the first word out of anyone’s mouth when discussing Helm alternatives. It’s built into

Kustomize is often the first word out of anyone’s mouth when discussing Helm alternatives. It’s built into kubectl (kubectl apply -k <directory>), emphasizing a “patch and overlay” approach rather than templating. With Kustomize, you write your Kubernetes YAML as usual (called a base) and then define overlays that modify that base for different contexts (like different envs or clusters). Overlays can add or override fields using strategic merge patches or JSON patches. For example, your base Deployment might set replicas: 1, and your production overlay patch changes replicas to 3 – when you build with Kustomize, the prod YAML has 3 replicas.

Pros:

- Built into

kubectl(kubectl apply -k) - Easy to learn—no templating language, just YAML

- Great for multi-environment setups via overlays

- Keeps configs DRY and easy to reason about

Cons:

- No packaging/reuse mechanism like Helm charts

- No dynamic logic or value injection (limited to patches)

- No versioning or chart repos—everything lives in Git

- Often used with other tools to handle more complex needs

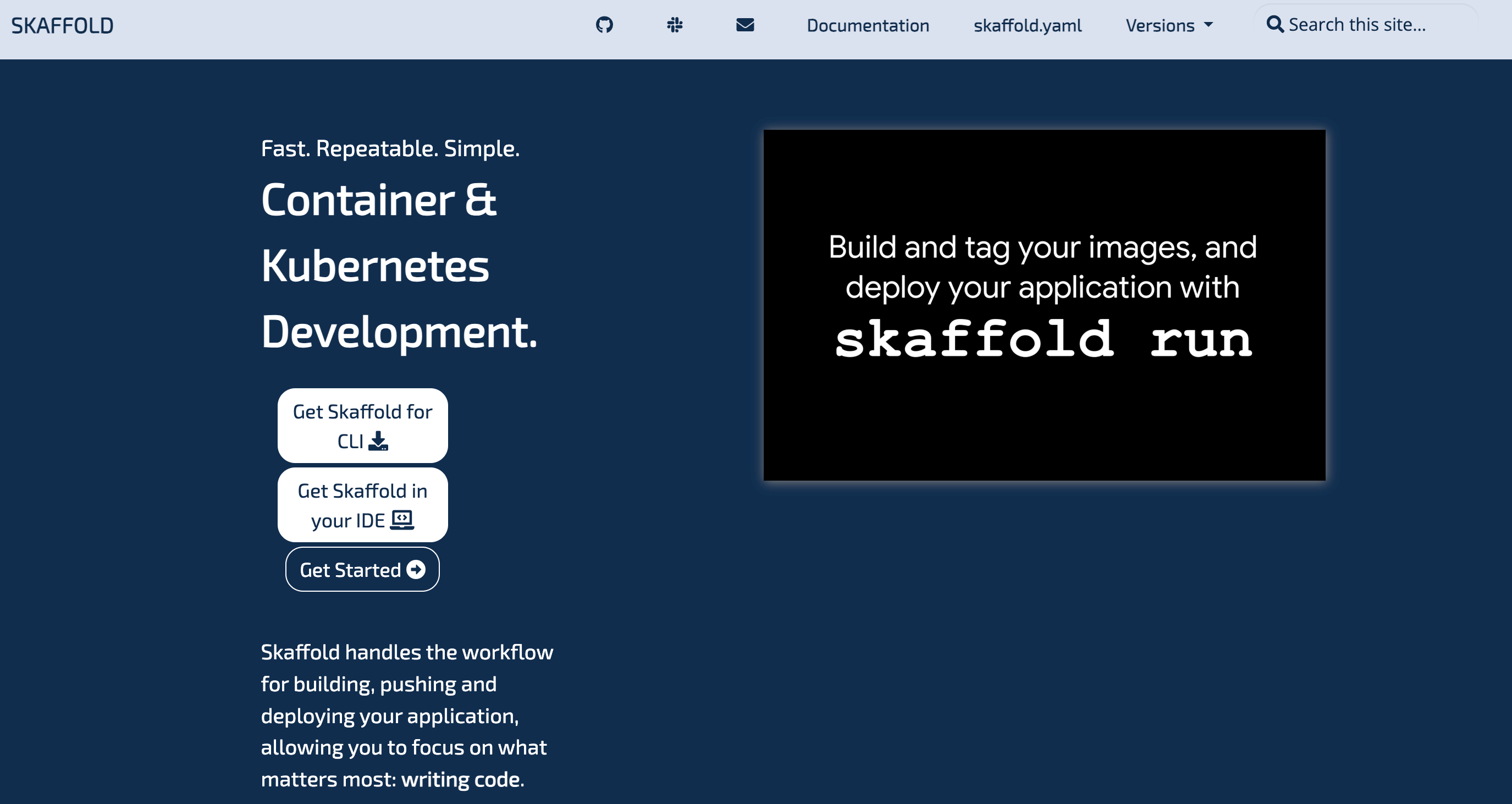

Google’s Skaffold is another tool that often enters the conversation, though it serves a different purpose. Skaffold is all about streamlining the development and CI/CD workflow for Kubernetes applications. It automates the build-push-deploy cycle so you don’t have to run a bunch of commands manually. Importantly, Skaffold can work with Helm or with raw manifests (or Kustomize). So it’s not an “either/or” replacement at the configuration level, but it can reduce your reliance on Helm’s CLI for deployment.

Google’s Skaffold is another tool that often enters the conversation, though it serves a different purpose. Skaffold is all about streamlining the development and CI/CD workflow for Kubernetes applications. It automates the build-push-deploy cycle so you don’t have to run a bunch of commands manually. Importantly, Skaffold can work with Helm or with raw manifests (or Kustomize). So it’s not an “either/or” replacement at the configuration level, but it can reduce your reliance on Helm’s CLI for deployment.

Pros:

- Automates image builds, tagging, pushing, and deployment

- Supports Helm, Kustomize, or plain YAML out of the box

- Tightens dev feedback loop with

skaffold dev(auto-redeploy on code changes) - Reduces CI scripting by handling the full deployment pipeline

- Great for local development and simple CI setups

Cons:

- Not a templating or config management tool

- Doesn’t replace Helm charts—just automates their usage

- Not ideal for multi-cluster or GitOps workflows

- Extra tooling developers need to install and learn

Next up is Argo CD, a popular GitOps continuous

delivery tool. Argo CD’s motto could be “stop running

Next up is Argo CD, a popular GitOps continuous

delivery tool. Argo CD’s motto could be “stop running helm upgrade and let Git drive your deployments.” It watches a Git repository containing your desired Kubernetes manifests and ensures your cluster state matches it, automatically applying any changes. Now, importantly, Argo CD is tool-agnostic about the manifests – you can store plain YAML, Kustomize overlays, or even Helm charts in Git and ArgoCD will apply them. So you can use Argo CD with Helm (many do!), but you can also use Argo CD as a Helm replacement by switching to Kustomize or plain manifests.

Pros:

- Git is the source of truth; deployments happen via

git push - Great for managing multi-env and multi-cluster setups

- Automatic syncing, rollback support, and diffing built-in

- Supports Helm, Kustomize, and more without requiring CLI tools

- Works well with teams adopting GitOps workflows

Cons:

- Requires installing and operating Argo CD in your cluster

- Has its own learning curve (Application CRs, syncing behavior)

- Not a templating tool—still relies on Helm/Kustomize/etc. for config generation

Moving further from Helm, we get into territory of treating Kubernetes manifests not as Helm templates or YAML patches, but as code in a programming language. Jsonnet is a JSON templating language (created by Google) that has been embraced by some Kubernetes folks as a more robust way to generate manifests. Jsonnet isn’t Kubernetes-specific, but you can use libraries (like

Moving further from Helm, we get into territory of treating Kubernetes manifests not as Helm templates or YAML patches, but as code in a programming language. Jsonnet is a JSON templating language (created by Google) that has been embraced by some Kubernetes folks as a more robust way to generate manifests. Jsonnet isn’t Kubernetes-specific, but you can use libraries (like kube-libsonnet) to easily produce Kubernetes objects. Tools like Grafana Tanka build on Jsonnet to provide a CLI and structure for Kubernetes config.

Pros:

- Full programming power: conditions, loops, functions, inheritance

- Ideal for DRY, reusable config and complex abstractions

- Safer than raw YAML (type-checked, structured)

- Tanka provides a solid workflow for applying and diffing changes

- Great for teams with engineering-heavy infra practices

Cons:

- Steep learning curve; you need to learn a new language

- Smaller ecosystem and community support vs. Helm/Kustomize

- Adds a build step (Jsonnet → YAML), not kubectl-native

- Can be overkill for simple apps or small teams

Kapitan is a lesser-known but very interesting tool that can serve as a Helm alternative. It was developed at DeepMind for managing configuration at scale. Kapitan is often described as a “configuration orchestration tool” or an “infrastructure compiler.” It supports multiple templating engines under one roof: you can use Jsonnet, Jinja2, or even pure Python (Kadet) to generate YAML. It introduces the concept of an inventory – a hierarchical set of parameters (think of it like a tree of values for different environments, clusters, etc.). Kapitan compiles your templates using the inventory, and can output configs for Kubernetes, Terraform, or anything else.

Pros:

- Supports multiple templating languages: Jsonnet, Jinja2, Python

- Inventory model makes multi-env/cluster config clean and DRY

- Built-in secrets management (encrypt/decrypt at compile time)

- Can generate configs for more than just Kubernetes

Cons:

- Steep learning curve; not beginner-friendly

- Heavy for small teams or simple projects

- Smaller community and ecosystem compared to Helm or Kustomize

- Requires learning Kapitan’s compilation model and inventory structure

Last but not least, CDK8s (Cloud Development Kit for Kubernetes) offers a fresh, developer-friendly take on Kubernetes configuration. Inspired by the AWS CDK, CDK8s lets you write your Kubernetes manifests using familiar programming languages (TypeScript, Python, Java, etc.) and then synthesize those into YAML for application.

Last but not least, CDK8s (Cloud Development Kit for Kubernetes) offers a fresh, developer-friendly take on Kubernetes configuration. Inspired by the AWS CDK, CDK8s lets you write your Kubernetes manifests using familiar programming languages (TypeScript, Python, Java, etc.) and then synthesize those into YAML for application.

Pros:

- Write manifests in code (TypeScript, Python, etc.) instead of YAML

- Supports full programming features like loops, functions, and classes

- Great IDE support and testability

- Easy to reuse and abstract config patterns

- No controllers needed—just generates YAML you can apply or commit to Git

Cons:

- Steeper learning curve for teams unfamiliar with code-based IaC

- Not ideal for ops teams who prefer YAML or GUI workflows

- Smaller community and ecosystem compared to Helm

- Risk of inconsistent patterns if not well-structured across teams

Helm can be useful, but it also adds a lot of overhead. The templating is hard to read, managing releases is clunky, and it’s easy to lose track of what’s actually being deployed. That’s why so many teams start looking for alternatives.

Some tools are better for specific use cases:

- Kustomize works well if you want to keep things in plain YAML

- Skaffold is great for speeding up development and CI

- Argo CD makes Git-based deployment easier to manage

- Jsonnet or CDK8s give you more control if you prefer writing config in code

- Kapitan is built for large setups with lots of environments

But if your main goal is to make Kubernetes deployment less painful and you're not interested in mastering Helm's templating quirks, Northflank is the way to go.

It handles configuration, CI/CD, and multi-cluster deployment in one platform. You don’t need to wire together half a dozen tools just to ship a container. You define your services, jobs, and environments the way normal people do, through clean config or a UI that doesn’t make you feel like you’re defusing a bomb.

Yes, we’re biased. You’re on the Northflank blog. But there’s a reason we built it this way: because we were tired of fighting the same tooling battles over and over again. Helm made things easier, for a while. Then it made them harder. We wanted a system that didn’t break under the weight of its own abstractions.