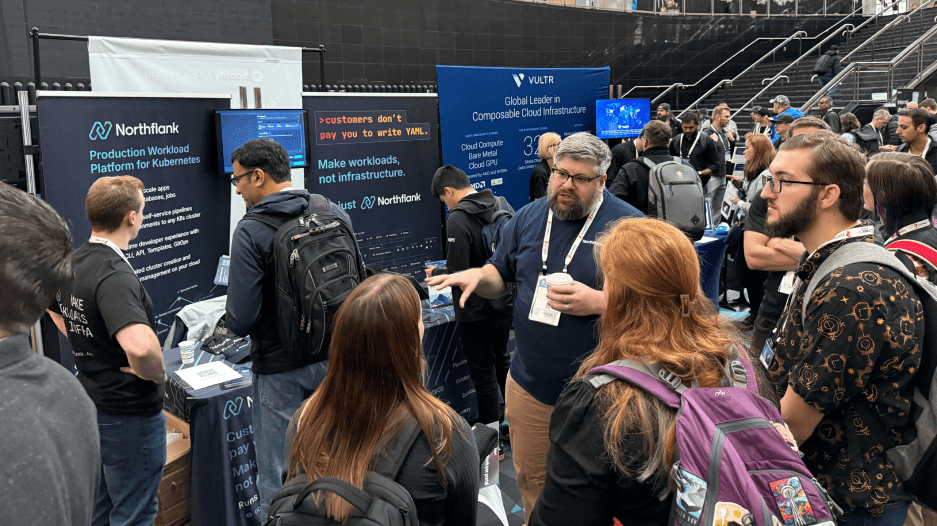

KubeCon NA 2024 SLC, Themes from the Hallway track

Informal conversations are NOT something you will see on the CNCF’s YouTube channel. If you’re curious about what we heard among attendees at KubeCon, this post is for you.

It was nice to see that platform engineering day was popular.

KubeCon is a great time for sharing war stories and learning from others. You can get some of that from talks, but a whole lot more by striking up conversations. That dynamic and unplanned sharing of perspectives is often affectionately called the Hallway track. Consider this Northflank's roundup from the Hallway track.

Have you ever sat down at a Buffet for a meal, and been overwhelmed with 200+ menu options? I hear that’s a pretty good approximation of what figuring out the bazillion fields in k8s manifests and Helm charts feels like.

YAML is still a pain for just about everyone, it seems. Files are extremely long, highly structured, and sensitive to silly issues like whitespace. Are you ready for Helm 4?

Finding new ways to wrangle YAML seems almost like a tradition for the k8s community. From the early days of KSonnet, to approaches like Kustomize and now YAMLscript. Are templates or overlays the way to go? When I talk to attendees who have customised Helm charts, I hear stories about the mind-numbing task of combing through hundreds of fields. Every so often you stumble across a field that sounds familiar, but you can’t quite remember what it means. That means you get to go on a journey to that package’s docs to figure it out.

There are many solutions around YAML currently, but none completely remove the pain and complexity. In other words, there’s no silver bullet. The current solutions do a noble job of fighting cloud complexity. The fact that we are all still discussing pain points just indicates there’s still room to do more.

Talk to any infrastructure team, and you’ll find a new way to deploy code. Kubernetes deployments are usually bespoke, and almost always unique to the teams and organisations that create them.

The uniqueness makes sense when you think about Conway’s Law. Communication structures are reflected in the code you create. Each team has its slight variance in how they communicate and accordingly, app deployment methods inherit that variance.

This is good when it comes to adapting the cloud-native world to your internal business processes. It’s bad when you look at long-term maintainability. Nearly everyone I spoke to at the Northflank booth shared stories about obscure parts of their infrastructure that might only really be understood by the one engineer who built it.

Everyone seems to have a team for specialised parts of their distributed system. I’ve heard of teams dedicated to service mesh, observability, and databases. With that kind of logistical overhead, good luck getting anything done! At least, that's the way it feels when teams revert from collaborators back to gatekeepers. I appreciate that in large organisations this is currently a necessary evil. It’s also an area where people feel pain, and I believe we are likely to see continued refinement.

The result of this wide variety of deployment strategies is lower team velocity, and an enormous duplication of effort. That’s basically the opposite of what you hope for in an open source community.

Pausing this honest review, just to shill Northflank a little bit… The ability to standardise deployments is the feature that people are most excited about after trying Northflank.

The new Reference Architecture Initiative from the CNCF is one possible sign of relief. More companies sharing how they deploy and run workloads is one way the community can start to identify common patterns. Reference architectures are great, but they’re more likely to be just one part of the solution. The goal we’re all working towards is fewer bespoke handcrafted solutions, in favor of more standard deployment practices.

Because everyone’s deployments are unique, the reference architecture is likely to work less like a sure-fire recipe for success, and more like a place to look for inspiration. These architectures also took the best part of a decade to refine and implement, and you should expect that copying parts of them to take time, as well.

From my conversations it sounds like the lack of good deployment standards, best practices, and a pervasive fear of being too opinionated are all likely contributing factors.

Distributed systems like Kubernetes need better abstractions in order to get better standards. I predict that just like programming languages push developers towards certain idioms and best practices, the CNCF community should also expect to see more idiomatic Kubernetes abstractions in the future.

I heard about more than one cool observability trends at the Northflank booth. One of which is continuous profiling. The other is improvements in how the standards for cross-referencing and correlating data. Finally, the release of Prometheus 3.0 is big news in the observability space.

Continuous profiling is a word many coders are probably at least partially familiar with. Profiling tools have been around since the dawn of programming. You set up your program in profiling mode, and data about the flow and performance of every function pours out.

Profiling usually just happens on a developer’s local machine, since in addition to the glut of profiling data, the impact on performance is high. Continuous profiling gives developers the same ability to track function-level performance, with as little as a 1% impact to system resources. It does so with stochastic sampling (in other words random) of eBPF data as your programs run in production. With enough random samples nice looking approximations of performance emerge. Continuous profiling is another observability solution to add to the usual mix of metrics, logs, and traces.

Jinkies! Continuous Profiling was just eBPF wearing a mask all along.

Pyroscope from Grafana Labs, and the eponymous Polar Signals are two names I hear are worth checking out for continuous profiling.

When it comes to improvements in how to cross reference and correlate data, I’ve heard this presentation from Apple on enabling exemplars is a great talk to catch. Exemplars are a standard in the Open Telemetry spec for showing disparate types of data in a unified UI. Imagine if while looking at metrics, you could click a button and also see traces and logs for the same set of requests? That’s what exemplars bring to the table.

Prometheus 3.0 was released along with a nice presentation and deep dive of the new UI and features. It’s already a standard of many Kubernetes stacks, so there’s something for everyone here. The Prometheus team also made a post about the 3.0 release. One big item worth checking out is the performance gains since Prometheus 2.0. Some of us might see more than 200% improvement in resource usage compared to 2.0.

In conversation, I'm hearing that the challenge of deployments also involves a bit of Not Invented Here (NIH). Every shop operates in its own unique way. That’s often seen as one of the benefits of Kubernetes. What’s not unique, and not a benefit, is the fact that developer experience (DX) is still broken. In other words, the majority of solutions around Kubernetes work great for Ops teams, but are still considered broken for Dev teams. No wonder so many teams spent years building a platform, only to find that their developers loathe it.

If the point of DevOps is to unite Dev and Ops teams, then why are so many of us still stuck here? Why engineering orgs happy with poor DX as the status quo? Conversations with attendees have made it clear most are still struggling when it comes to DX.

KubeCon attendees frequently noted that in addition to understanding the structure of YAML manifests, developers are also saddled with the responsibility of learning what the various K8s resources do and how Kubernetes works. It’s a lot, and when I heard from attendees who were happy with their DX, it was usually because they reduced or wholly removed this burden from developers. In other words, better abstractions were put to use.

KubeCon was a lot, and I'm sure I missed some interesting topics in this post. One attendee, Nathan Bohman, shared that his smartwatch tracked his walk around the just vendor area as 6.5+ miles in total. There was a lot of ground to cover at KubeCon.

What about the event itself? I'm empathetic to the challenge of making a lot of meals at scale. Jokes aside, the lunch bags were pretty good, although the screen printed cookies were an eyebrow raiser. Funnily, it was easier to get coffee than it was to grab water. Maybe the CNCF were expecting a massive number of pagers to go off in unison?

Overall there was too much for any one post to cover. If you think I missed something major in this walk through, let me know. Or, take it as an opportunity to share your own personal highlights from KubeCon.

At Northflank we’re on a mission to reduce the effort and investment required to build in the cloud. The conversations we had were a nice validation of that mission. I appreciate everyone who chatted with us at the booth, gave a talk, or otherwise shared the technologies and techniques that drive their approach to the cloud.

Until next time! I'll see you in London for the next KubeCon EU.