Top Modal Sandboxes alternatives for secure AI code execution

If you're building AI agents, code interpreters, or platforms that execute untrusted code, Modal Sandboxes might be on your radar. But depending on your needs, self-hosting, microVM isolation, flexible deployment, or enterprise features, you may need to explore alternatives.

This guide examines the leading Modal Sandboxes alternatives, comparing isolation technologies, deployment options, pricing models, and production readiness.

We wrote a detailed explanation of container isolation and everything you need to know about it here. Use it as a primer before going deeper into Modal Sandboxes alternatives.

Northflank delivers production-proven microVM isolation (Kata Containers/CLH) plus gVisor, accepts any OCI container image, offers unlimited sandbox duration, BYOC deployment, and complete platform capabilities. Handles 2M+ workloads monthly.

- E2B.dev uses Firecracker microVMs with excellent AI agent SDKs but limits sessions to 24 hours

- Modal provides gVisor containers with persistent storage but requires SDK-defined images, Python-centric platform

- Daytona.io offers sub-90ms provisioning for AI workflows, newest in the space

- Vercel Sandbox leverages Firecracker for dev environments, 45-minute session limits

- Cloudflare Workers uses V8 isolates for instant edge execution, no persistent state

Modal Sandboxes let you dynamically create containers and execute arbitrary code inside them. Built on gVisor isolation, they support:

- Running code in containers defined through Modal's SDK

- Persistent data across sessions via network filesystems

- Network tunneling and port exposure

- Streaming input/output for interactive processes

While Modal Sandboxes can execute any language, you must use Modal's Python SDK to define custom images, you can't bring arbitrary OCI images. This locks you into their image building process.

Modal excels at secure code execution, but teams often need:

- Any OCI image support: Use existing containers without SDK requirements

- Self-hosting or BYOC: Run in your AWS/GCP/Azure accounts

- MicroVM isolation: Hardware-level isolation beyond gVisor

- Non-Python orchestration: SDKs in other languages

- Enterprise features: audit logs, compliance tools

- Transparent AND affordable pricing: Clear cost structure at scale

- Complete infrastructure: Databases, APIs, and more beyond sandboxes

| Platform | Isolation | Images | Persistence | Deploy options | Best for |

|---|---|---|---|---|---|

| Northflank | microVM (Kata/CLH) & gVisor | Any OCI image | Unlimited | Managed or BYOC | Complete platform + sandboxes |

| E2B.dev | microVM (Firecracker) | Pre-built + custom | 24hr max | Managed only | AI agent tools |

| Modal | gVisor | SDK-defined only | Yes (network FS) | Managed only | ML/AI workloads |

| Daytona.io | Docker/Kata | Docker images | Limited | Managed only | Quick AI demos |

| Vercel Sandbox | microVM (Firecracker) | Limited | 45 min max | Vercel only | Dev previews |

| Cloudflare Workers | V8 Isolates | N/A | No | Cloudflare only | Edge functions |

Northflank stands out by offering multiple isolation technologies and deployment flexibility. Since 2021, we’ve processed millions of workloads for companies like Writer and Sentry.

- Any OCI image: Bring any container from Docker Hub, GitHub, etc.

- The most complete isolation: Kata Containers (microVM) or gVisor per workload

- True BYOC: Deploy in your cloud accounts with full control

- Multi-language SDKs: Not locked to Python orchestration

- Complete platform: Run databases, APIs, cron jobs alongside sandboxes

- Transparent pricing: Simple usage-based billing

Northflank

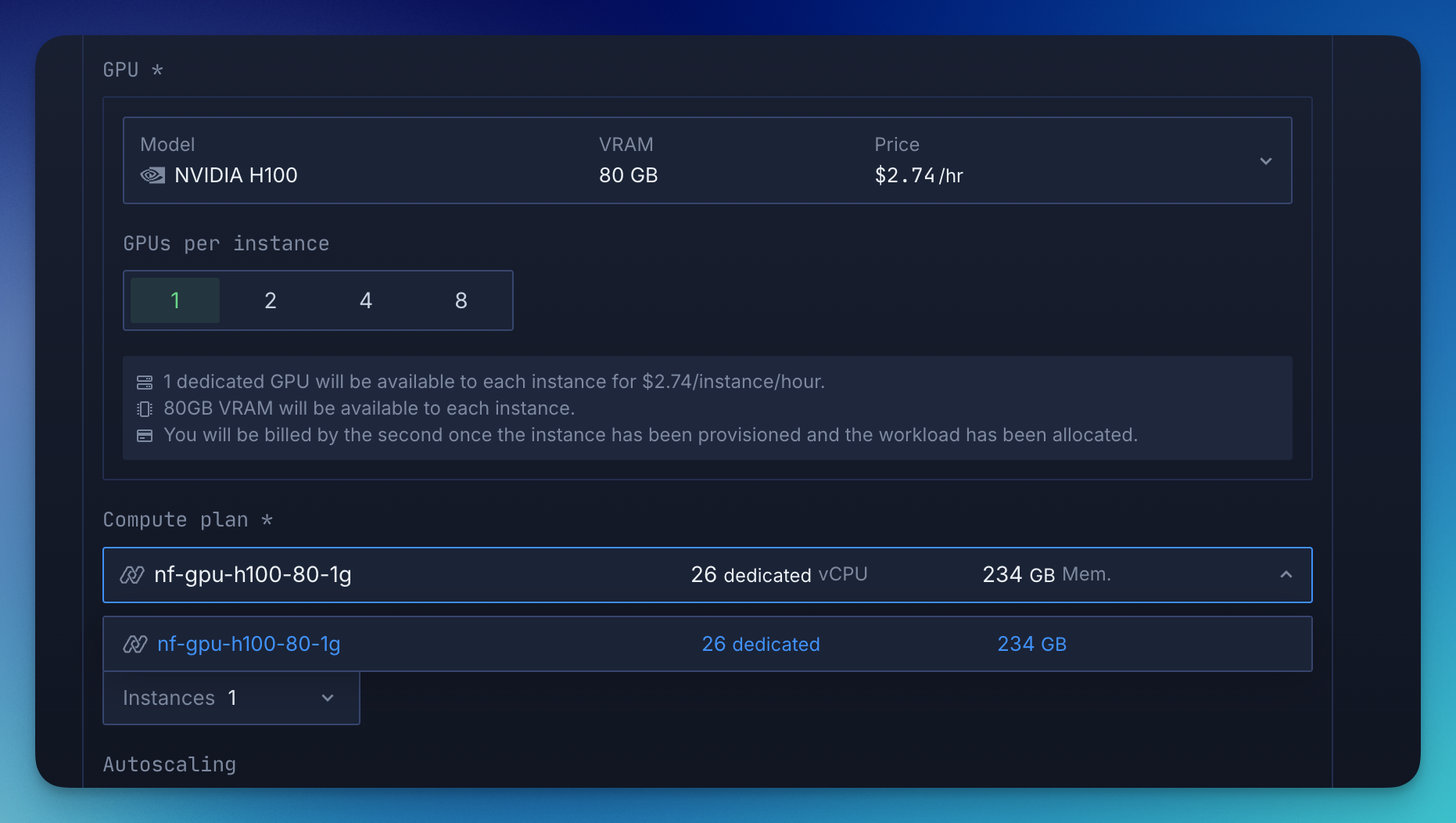

CPU $0.01667/hr, RAM $0.00833/hr, NVIDIA H100 $2.74 / hour, NVIDIA B200 $5.87 / hour

Modal Sandboxes Pricing

CPU $0.0473 / hour, RAM $0.0080 / hour, NVIDIA H100 $3.95 / hour, NVIDIA B200 $6.25 / hour

Take into account that Modal charges for CPU, GPU, and RAM separately when running GPU workloads, with a minimum default reservation of 0.125 CPU cores per function.

For an H100 instance with 26 vCPU, 234GB RAM, and 500GB NVME

Modal pricing breakdown:

- H100 GPU: $3.95/hour

- 26 CPU cores: 26 × $0.0473 = $1.23/hour

- 234GB RAM: 234 × $0.0080 = $1.87/hour

- 500 GB storage (charged as additional RAM, 25 GB): 25 × $0.0080 = $0.20/hour

- Total Modal cost: ~$7.25/hour

Northflank: $2.74/hour all-inclusive

Northflank's GPU pricing is all-inclusive. The $2.74/hour for an H100 includes GPU, CPU, and RAM.

For GPU workloads, Northflank is approximately 62% cheaper than Modal.

For CPU-only workloads, Northflank's CPU pricing is about 65% less expensive than Modal's.

E2B specializes in AI code execution with Firecracker microVMs and polished SDKs. Great for hackathons and demos but lacks production features.

Pros: 150ms cold starts, nice SDKs, 24hr persistence

Cons: No self-hosting, expensive at scale, sandbox-only

Newest player focusing on sub-90ms provisioning for AI workflows. Fast but still maturing.

Pros: Blazing fast starts, Docker ecosystem

Cons: Limited persistence, young platform

Beta offering with Firecracker isolation, tightly integrated with Vercel's platform.

Pros: Great DX for Vercel users

Cons: 45-min limits, Vercel-only

V8 isolates for instant execution at 200+ locations globally.

Pros: Zero cold starts, global by default

Cons: No persistence, JS/WASM only

With Modal, you must define images through their Python SDK. Northflank accepts any OCI-compliant image from any registry: Docker Hub, GitHub Container Registry, your private registry, without modifications.

While Modal uses gVisor only, Northflank gives you access to gVisor and true microVM isolation (Kata Containers) based on your security needs.

- Your cloud: Deploy in your AWS/GCP/Azure accounts

- Compliance: Keep data in your VPC

- Hybrid: Mix Northflank-managed and self-hosted

Northflank runs your complete stack, unlikely sandbox-only vertical products:

- Secure code execution

- Backend APIs

- Databases

- Scheduled jobs

- GPU workloads

With 2M+ monthly workloads, Northflank has solved the operational challenges others haven't:

- Multi-tenant isolation

- Resource quotas

- Audit logging

- Enterprise SSO

Choose Modal if: You're Python-first and comfortable with SDK-defined images

Choose E2B if: You need quick AI demos with nice SDKs

Choose Northflank if: You want to use any OCI image, need production-grade isolation, deployment flexibility, and a complete platform

Specialized sandboxing tools have their place, but modern AI applications need more than just isolated code execution.

Northflank leads because it's the only platform that combines:

- Enterprise-grade microVM isolation (Kata containers using CLH)

- A complete platform for all your workloads

- Production scale (2M+ microVMs monthly)

- True infrastructure flexibility (managed or BYOC)

- Transparent, predictable pricing

Don't settle for a sandbox when you need a platform.

With Northflank, secure AI execution is just one part of a comprehensive infrastructure solution that grows with your needs.

Try Northflank today on your own or book a demo with a Northflank engineer.