Top 5 SaladCloud alternatives for production GPU workloads in 2026

SaladCloud alternatives provide different approaches to deploying GPU workloads, from distributed networks to enterprise-grade infrastructure platforms.

If you need production reliability with stable infrastructure, full-stack platform capabilities beyond GPU access, or specific deployment control, understanding your options helps you choose the infrastructure that matches your technical and operational needs.

-

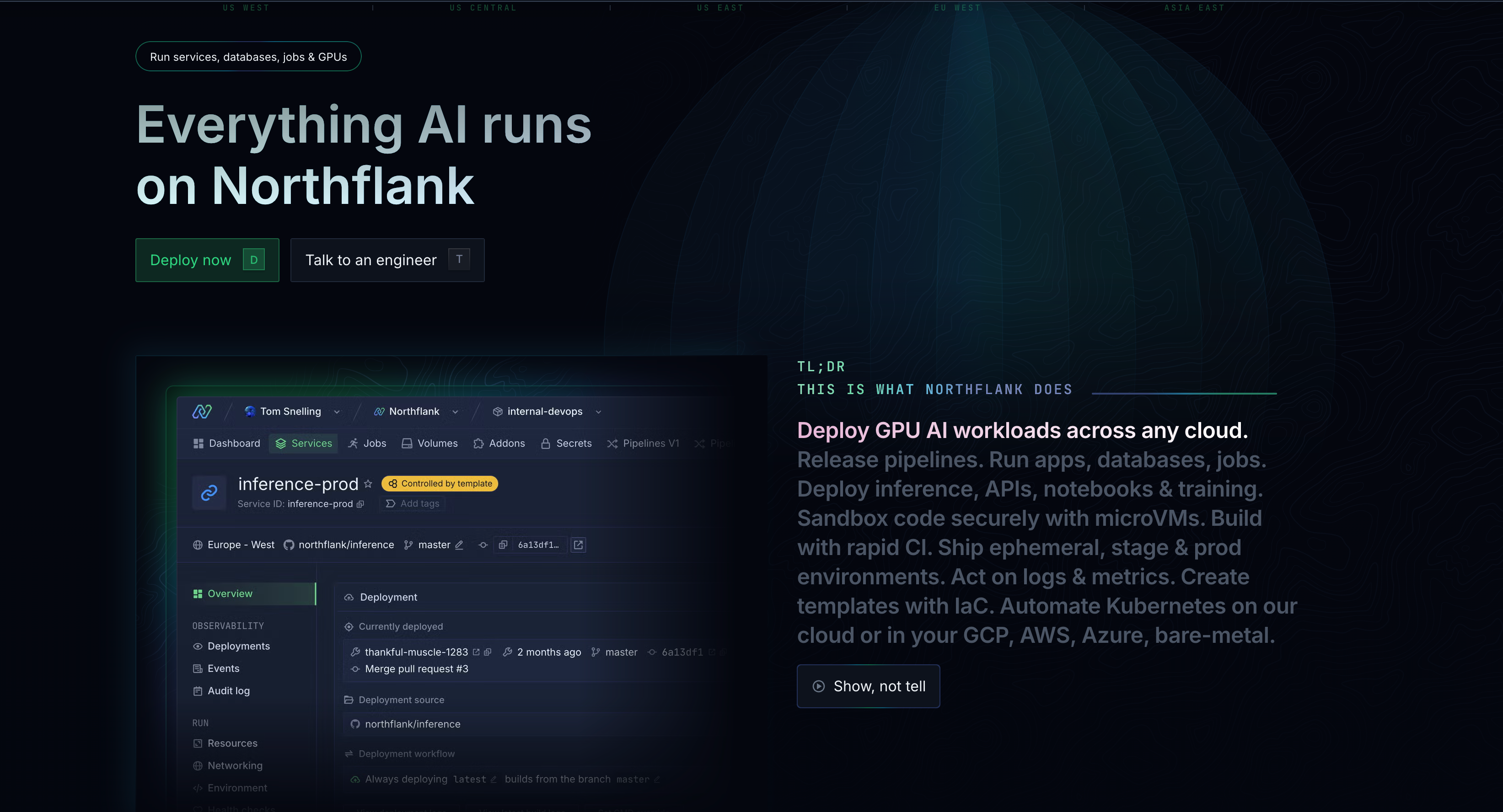

Northflank - Deploy GPU workloads alongside your entire application stack (databases, APIs, jobs, CI/CD) across AWS, GCP, Azure, Oracle Cloud, Civo, or bare-metal from one platform.

Northflank provides stable infrastructure with production-grade reliability and a full-stack platform where GPU services work alongside databases, CI/CD, and application hosting. Access both enterprise datacenter GPUs (H100, H200, B200, A100, L40S, etc.) and professional RTX GPUs with unified workflows across your entire application.

-

Vast.ai - Marketplace model connecting to consumer and datacenter GPUs with flexible rental options

-

RunPod - Community and Secure Cloud options with serverless capabilities across 30+ global regions

-

Lambda Labs - 1-Click Clusters built for academic researchers and AI teams with ML-ready environments

-

AWS/GCP/Azure - Traditional hyperscalers with GPU instances integrated into broader cloud ecosystems

Before examining specific platforms, understanding evaluation criteria helps you match alternatives to your requirements.

- Infrastructure reliability and node stability: Does your workload require stable, production-grade infrastructure or can it handle interruptions? Some GPU platforms use distributed node architectures where instances can be interrupted without warning as nodes go offline. Other platforms provide infrastructure where GPU instances remain stable and available. Consider whether your application needs consistent uptime or can architect for fault tolerance with interruption-prone environments.

- Platform scope and integrated capabilities: Do you need just GPU compute, or are you deploying complete applications? Some platforms focus on containerized GPU workloads, while others provide unified infrastructure for GPUs, databases, APIs, and CI/CD. The scope affects how many vendors you'll need to coordinate and how integrated your development workflow can be.

- Infrastructure control and deployment flexibility: Can you deploy in your own cloud accounts? Some platforms operate on their own infrastructure, while others support BYOC (Bring Your Own Cloud) capabilities. This matters for teams with existing cloud commitments, compliance requirements, or data residency needs.

- GPU type requirements: Do you need specific GPU models or access to the latest datacenter hardware? Platforms vary in their GPU offerings, from consumer GPUs (RTX series) to enterprise datacenter GPUs (H100, A100, L40S) to professional workstation GPUs. Teams may need consistent access to specific GPU types depending on workload requirements.

- Developer workflow integration: Does the platform support your development process? Git integration, automated builds, preview environments, and comprehensive observability affect how quickly teams ship changes. Some platforms provide container orchestration with API access, while others offer broader DevOps capabilities integrated into the workflow.

We'll review the top SaladCloud alternatives based on reliability characteristics, platform scope, deployment flexibility, and integrated capabilities to help your team make an informed decision.

Northflank approaches GPU deployment differently than distributed GPU networks by treating GPU workloads as components within your complete application architecture rather than isolated compute resources.

The platform lets you deploy GPU services alongside managed databases (PostgreSQL with pgvector, MySQL, MongoDB, Redis), web applications, APIs, background jobs, and scheduled tasks. When building an AI application, for example, you deploy your frontend, backend API, database, and GPU-powered inference service within the same project using Git-based workflows.

Northflank delivers a stable datacenter infrastructure with production-grade reliability. Your GPU workloads run in environments designed for consistent availability, while maintaining the flexibility to deploy on Northflank's managed cloud or in your own cloud accounts.

Key capabilities of Northflank for teams building AI applications

- Multi-cloud deployment: Works across AWS, GCP, Azure, Oracle Cloud, Civo, and bare-metal without vendor lock-in. You can deploy on Northflank's managed cloud or connect your own cloud accounts (BYOC) to maintain existing relationships and billing structures. The platform provides 6+ managed cloud regions and access to 600 BYOC regions through this multi-cloud approach.

- Git-based deployments: Connect to GitHub, GitLab, or Bitbucket repositories. Each commit triggers automated builds and deployments. Preview environments automatically spin up for pull requests, giving you isolated testing environments before merging changes. This workflow applies to your entire stack, including GPU workloads.v

- Built-in observability: Includes real-time log tailing with filtering and search, performance metrics for GPU utilization, memory usage, network traffic, and storage. Cost analytics show spending across different providers. Alerts integrate with Slack, email, or webhooks. These capabilities work without configuring separate monitoring tools.

- Security features: Include private networking between services, VPC support, role-based access controls, audit logs, and SAML SSO. You can deploy in your own Kubernetes clusters (EKS, GKE, AKS) for maximum infrastructure control.

- GPU options on Northflank: The platform supports NVIDIA B200, H200, H100, A100, L4, L40S, RTX Pro 6000 Blackwell Server Edition, and other GPU types across multiple cloud providers. GPU time-slicing and NVIDIA MIG let you run multiple independent workloads on provisioned GPUs to optimize resource utilization.

Northflank's pricing structure

Sandbox tier

- Free resources to test workloads

- 2 free services, 2 free databases, 2 free cron jobs

- Always-on compute with no sleeping

Pay-as-you-go

- Per-second billing for compute (CPU and GPU), memory, and storage

- No seat-based pricing or commitments

- Deploy on Northflank's managed cloud (6+ regions) or bring your own cloud (600 BYOC regions across AWS, GCP, Azure, Civo)

- GPU pricing: NVIDIA A100 40GB at $1.42/hour, A100 80GB at $1.76/hour, H100 at $2.74/hour, H200 at $3.14/hour, B200 at $5.87/hour

- Bulk discounts available for larger commitments

Enterprise

- Custom requirements with SLAs and dedicated support

- Invoice-based billing with volume discounts

- Hybrid cloud deployment across AWS, GCP, Azure

- Run in your own VPC with managed control plane

- Secure runtime and on-prem deployments

- Audit logs, Global back-ups and HA/DR

- 24/7 support and FDE onboarding

Use the Northflank pricing calculator for exact cost estimates based on your specific requirements, and see the pricing page for more details

When does Northflank make sense as a SaladCloud alternative?

Northflank fits teams that need:

- Production reliability: Customer-facing applications requiring stable infrastructure and consistent availability

- Full-stack platform: Unified interface for GPU compute, databases, APIs, and CI/CD pipelines (both GPU and CPU workloads)

- Developer workflows: Git-based deployments, preview environments, and integrated observability

- Infrastructure control: BYOC deployment in your own AWS, GCP, Azure, Oracle Cloud, Civo, or bare-metal infrastructure for compliance or data residency

Start by creating a Northflank account to test GPU workloads alongside your application infrastructure, or request your GPU cluster to discuss specific requirements. Learn more about GPU workloads on Northflank, explore available GPU instances, or review the documentation for implementation details.

Vast.ai operates as a marketplace connecting users to GPU resources from both consumer hardware providers and datacenter operators, creating a decentralized compute network.

Key features

- Marketplace model: Browse available GPUs with pricing set by individual providers, creating competition that can drive costs down for various GPU types.

- GPU variety: Access ranges from consumer RTX GPUs to datacenter A100s and H100s depending on marketplace availability at any given time.

- Rental flexibility: Choose between on-demand instances or interruptible compute for workloads that can handle interruptions.

Best for: Teams with fault-tolerant workloads who want marketplace pricing dynamics and can manage potential interruptions, though reliability varies across different providers in the marketplace.

RunPod offers GPU deployment across 30+ geographic regions through Secure Cloud (tier-3/tier-4 data centers) and Community Cloud (individual GPU providers).

Key features

- Serverless GPU: Automatic scaling and idle shutdown for variable workloads with pay-per-use pricing.

- Custom containers: Docker containers for exact runtime environments or pre-built templates for common frameworks.

- Automation tools: CLI and API for integration with CI/CD pipelines and programmatic deployment.

Best for: Teams needing distributed deployment options with serverless capabilities, though Community Cloud may have lower uptime guarantees and the platform focuses on GPU compute without integrated databases or comprehensive development workflows.

Lambda Labs targets academic researchers and AI teams through pre-configured GPU access built for machine learning workflows.

Key features

- 1-Click Clusters: Interconnected GPUs with pre-installed PyTorch, TensorFlow, CUDA, and Jupyter.

- GPU options: NVIDIA HGX B200, H100, A100, and GH200 instances with Lambda Private Cloud available.

- InfiniBand networking: NVIDIA Quantum-2 for distributed training across multiple GPU nodes.

Best for: University research groups and academic projects who need ML-ready environments without infrastructure setup complexity, though teams need separate solutions for databases and production infrastructure management.

Traditional cloud providers offer GPU instances as part of comprehensive cloud platforms with deep integration across storage, networking, security, and managed services.

- AWS capabilities: P5 instances with H100 GPUs, SageMaker, and integration with S3, Lambda, and RDS.

- GCP capabilities: A2 and A3 instances with A100/H100 GPUs, TPU alternatives, Vertex AI, and BigQuery integration.

- Azure capabilities: NC-series with NVIDIA GPUs, Azure ML integration, and Microsoft ecosystem connectivity.

Best for: Enterprises with existing cloud investments who need GPU capabilities within current infrastructure and established compliance frameworks, though GPU availability can be constrained during high-demand periods.

Use the comparison table to match your deployment needs, reliability requirements, and application architecture with the platform that addresses your specific requirements.

| Alternative | Best for | Key advantages | GPU options | Infrastructure model |

|---|---|---|---|---|

| Northflank | Production AI applications needing unified platform capabilities | Multi-cloud deployment with GPUs, databases, apps, jobs, and CI/CD; BYOC support; Git-based workflows; stable infrastructure | B200, H200, H100, A100, L40S, RTX Pro 6000, L4, GH200 and more | Stable datacenter infrastructure (managed cloud or BYOC) |

| Vast.ai | Cost-sensitive teams comfortable with marketplace dynamics | Marketplace pricing competition; GPU variety; flexible rental options | RTX series to H100s (varies by marketplace) | Marketplace of consumer and datacenter providers |

| RunPod | Distributed deployment with serverless needs | Serverless autoscaling; global regions; Community and Secure Cloud options | H100, A100, RTX 4090, 3090 and more | Secure Cloud data centers and Community Cloud |

| Lambda Labs | Academic research and ML experimentation | Pre-configured ML environments; InfiniBand networking; 1-Click Clusters | B200, H100, A100, GH200 | Lambda data centers and Private Cloud |

| AWS/GCP/Azure | Existing cloud ecosystem integration | Comprehensive cloud services; managed AI platforms; compliance frameworks | H100, A100, V100, L4 and more | Hyperscaler data centers |

GPU platforms take different approaches based on workload requirements. Some focus on distributed networks for cost optimization, others provide marketplace pricing flexibility, while comprehensive platforms offer complete application deployment with integrated infrastructure.

Northflank treats GPU workloads as integrated components within your full application stack.

With Northflank, you can deploy your databases, APIs, background jobs, and GPU services within the same project using unified workflows across AWS, GCP, Azure, Oracle Cloud, Civo, or bare-metal. The platform provides stable datacenter infrastructure with support for both enterprise datacenter GPUs (H100, H200, B200, A100, L40S, etc.) and professional RTX GPUs.

Start by creating a Northflank account to test GPU workloads alongside your application infrastructure, or request your GPU cluster to discuss specific requirements. Learn more about GPU workloads on Northflank, explore available GPU instances, or review the documentation for implementation details.

These resources provide additional context on GPU infrastructure options, pricing models, and deployment strategies to inform your platform decision.

- What is a cloud GPU? A guide for AI companies using the cloud - Learn how cloud GPUs work, when to use them versus local hardware, and how platforms like Northflank let you attach GPUs to any workload.

- What are spot GPUs? Complete guide to cost-effective AI infrastructure - Understand spot GPU instances, interruption handling, and how to optimize costs while maintaining reliability for AI workloads.

- 7 cheapest cloud GPU providers - Compare pricing across GPU providers including cost optimization strategies and when to prioritize reliability over price.

- The best serverless GPU providers - Review serverless GPU platforms for on-demand workloads, including orchestration capabilities and production-grade features.