vLLM vs TensorRT-LLM: Key differences, performance, and how to run them

Large language models have moved from research into production, powering copilots, assistants, search, and enterprise applications. But serving them efficiently remains one of the hardest challenges in AI infrastructure.

If you’ve looked into high-performance inference backends, you’ve likely come across vLLM and TensorRT-LLM. Both are optimized systems for running LLMs at scale, with a focus on squeezing the most out of GPUs.

At first glance, they might seem to overlap. Both promise lower latency, higher throughput, and better GPU utilization. But under the hood, they take very different approaches. Knowing these differences is crucial if you want to build an efficient, scalable, and cost-effective LLM service.

In this article, we’ll compare vLLM vs TensorRT-LLM side by side, explore their strengths and trade-offs, and show you how to deploy either on Northflank for production use.

If you are short on time, here is a high-level snapshot of how they compare.

| Feature | vLLM | TensorRT-LLM |

|---|---|---|

| Focus | High-performance general LLM inference | NVIDIA-optimized inference for maximum GPU efficiency |

| Architecture | PagedAttention + async GPU scheduling | CUDA kernels + graph optimizations + Tensor Cores |

| Performance | State-of-the-art throughput across models | Peak performance on NVIDIA GPUs |

| Supported models | Hugging Face ecosystem (flexible) | Optimized for LLaMA, Mistral, GPT, Qwen, etc. |

| Ecosystem fit | Open-source, broad integration | NVIDIA stack (Triton, NeMo, TensorRT) |

| Hardware | GPU-first, any CUDA GPU | Best on NVIDIA A100, H100, L40S |

| Ease of use | Easier to integrate with HF/OSS tools | Steeper setup, tied to NVIDIA SDKs |

💭 What is Northflank?

Northflank is a full-stack AI cloud platform that helps teams build, train, and deploy models without infrastructure complexity. GPU workloads, APIs, frontends, backends, and databases run together in a single platform, so your stack stays fast, flexible, and production-ready.

vLLM is an open-source inference engine designed to maximize throughput and reduce latency when serving LLMs.

Its key innovation is PagedAttention, which treats attention memory like virtual memory. Instead of wasting GPU space on inactive tokens, it efficiently reuses memory, allowing more concurrent requests and longer context windows without a performance hit.

This makes vLLM one of the most efficient open inference engines, widely adopted in production APIs and research.

- Exceptional performance with large batch sizes

- Long context windows supported efficiently

- Direct Hugging Face model integration

- Flexible and open-source ecosystem

- GPU-first, CPU support is limited

- More tuning needed for absolute peak performance vs NVIDIA stack

- Not as deeply optimized for specific NVIDIA hardware features

TensorRT-LLM is NVIDIA’s specialized inference library for large language models, built on top of TensorRT (their deep learning optimization SDK).

Instead of a general-purpose backend, it uses CUDA graph optimizations, fused kernels, and Tensor Core acceleration to extract every last bit of performance from NVIDIA GPUs. It also integrates tightly with Triton Inference Server and NeMo, making it a natural choice for enterprises already in the NVIDIA ecosystem.

- Peak performance on NVIDIA GPUs (A100, H100, L40S)

- Advanced graph and kernel-level optimizations

- Strong integration with NVIDIA’s enterprise stack

- Cutting-edge support for quantization and FP8/INT4 inference

- NVIDIA-only, no support for AMD or non-CUDA environments

- More complex to set up and optimize

- Less flexible outside of NVIDIA tooling

- vLLM: Delivers top-tier throughput and latency, especially with batching and long contexts.

- TensorRT-LLM: Pushes hardware to the limit with CUDA graph fusion and Tensor Core optimizations. For absolute peak performance on NVIDIA GPUs, TensorRT-LLM usually wins.

- vLLM: Works with a broad range of Hugging Face models out of the box.

- TensorRT-LLM: Supports major open LLMs but often requires conversion/preprocessing into optimized formats.

- vLLM: Easier to integrate, especially for teams already using Hugging Face.

- TensorRT-LLM: Steeper learning curve, but highly optimized once configured.

- vLLM: Runs on most CUDA GPUs, from consumer cards to datacenter hardware.

- TensorRT-LLM: Designed specifically for NVIDIA enterprise GPUs (A100, H100).

- vLLM: Flexible, OSS-first, fits into diverse pipelines.

- TensorRT-LLM: Best suited for enterprises already invested in NVIDIA’s AI stack.

| Use case | Best fit |

|---|---|

| Running open-source Hugging Face models quickly | vLLM |

| Maximizing throughput on NVIDIA A100/H100 GPUs | TensorRT-LLM |

| Flexible deployment across different infra | vLLM |

| Enterprise-grade NVIDIA ecosystem with Triton | TensorRT-LLM |

| Long-context window serving | vLLM |

| Peak GPU efficiency for production | TensorRT-LLM |

The short answer:

- If you want flexibility and fast integration with Hugging Face models, choose vLLM.

- If you want maximum performance and are deep in the NVIDIA ecosystem, choose TensorRT-LLM.

Choosing the right inference engine is only half the story. You also need a way to deploy it. Northflank is a full-stack cloud platform built for AI workloads, letting you run APIs, workers, frontends, backends, and databases together with GPU acceleration when you need it. The key advantage is that you do not have to stitch infrastructure together yourself.

With Northflank, you can containerize your application, assign GPU resources, and expose it as an API without extra complexity. If you want to roll your own, start with our guide on self-hosting vLLM in your own cloud account. For NVIDIA users, you can integrate TensorRT-LLM with Triton Inference Server inside the same platform for maximum GPU efficiency.

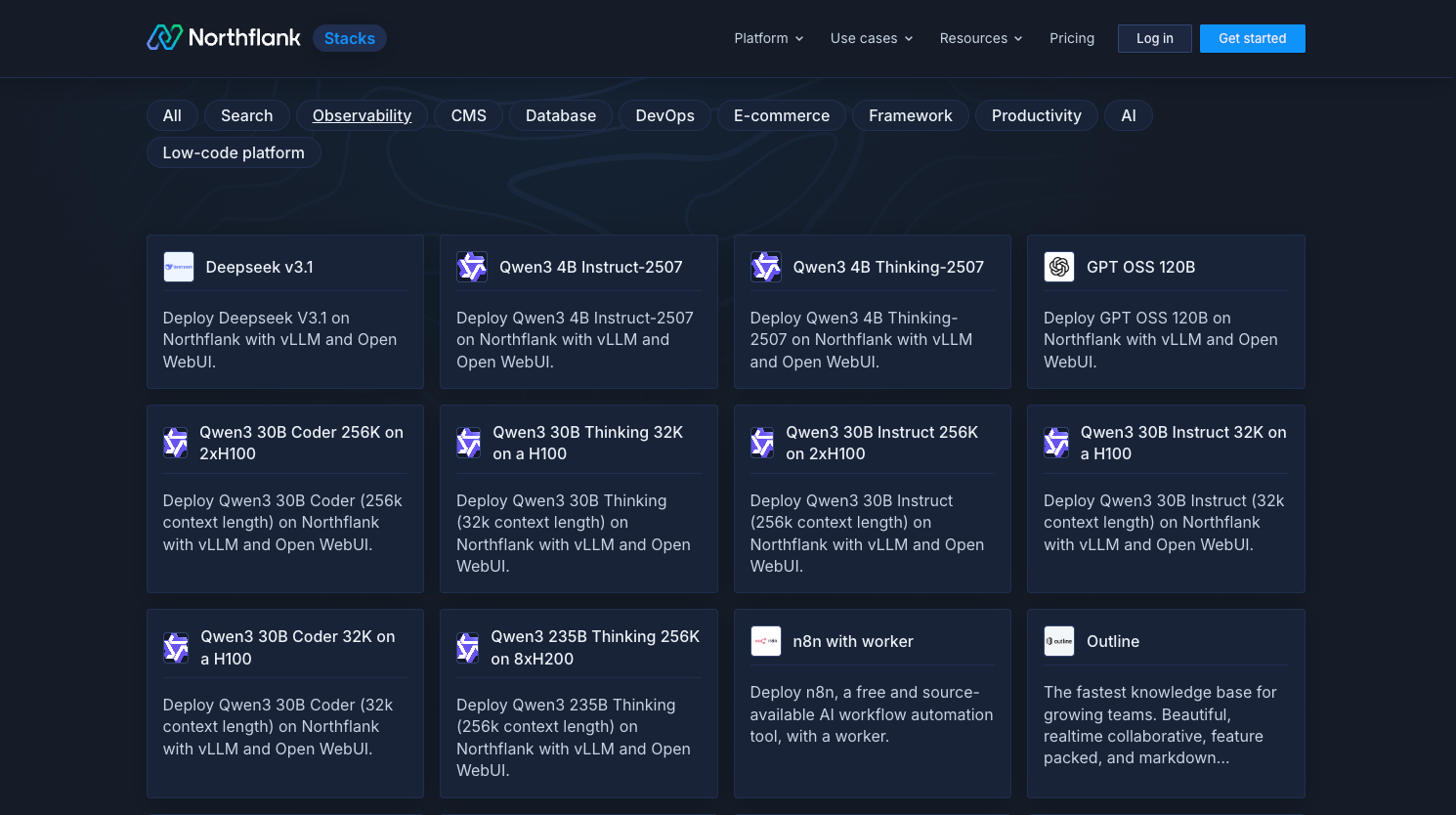

If speed matters, you can skip setup entirely with one-click templates. For example, deploy vLLM on AWS with Northflank in a single step, or explore the Stacks library for ready-to-use templates configured to run vLLM with models like Deepseek, GPT OSS, and Qwen. TensorRT-LLM can also be containerized and scaled in Northflank with built-in GPU provisioning.

This flexibility means you can start with vLLM for fast Hugging Face integration, and later move to TensorRT-LLM for maximum NVIDIA GPU performance or even run both side by side. Monitoring, autoscaling, and GPU scheduling are built in, so you can focus on your application while Northflank handles the rest.

vLLM and TensorRT-LLM are both leaders in high-performance LLM inference, but they represent different philosophies:

- vLLM → flexible, open-source, Hugging Face–friendly, great for rapid deployment and scaling.

- TensorRT-LLM → NVIDIA-optimized, hardware-specific, best for enterprises chasing maximum efficiency.

The good news is you don’t have to choose forever. With Northflank, you can run both side by side, experiment, and scale the one that best fits your needs.

Sign up today or book a demo to see how quickly you can get started.