What’s the best code execution sandbox for AI agents in 2026?

AI agents are generating billions of lines of code daily. Running that code safely requires a purpose-built code execution sandbox for AI agents: infrastructure that isolates execution, scales elastically, and integrates directly with AI agent workflows.

The best code sandbox for AI agents depends on your requirements:

- Northflank is the best choice for teams that need production-grade microVM isolation, unlimited session duration, bring-your-own-cloud deployment, and a complete platform beyond just sandboxes. Northflank processes over 2 million isolated workloads monthly using Kata Containers and gVisor.

- E2B excels at AI-first SDK design with Firecracker microVMs, but limits sessions to 24 hours and requires you to manage scaling at higher volumes.

- Modal offers strong Python-centric workflows with gVisor isolation and massive autoscaling, but lacks BYOC options and on-prem deployment.

- Daytona delivers the fastest cold starts (sub-90ms) but uses Docker containers by default, weaker isolation than microVMs.

For teams evaluating the best sandbox for AI code execution, this guide compares isolation strength, session limits, pricing, and platform completeness across the top providers.

Cursor alone generates nearly a billion lines of accepted code each day. AI coding assistants, autonomous agents, and LLM-powered applications are producing unprecedented volumes of code that needs a secure AI code sandbox to execute safely.

Running AI-generated code directly on your application servers, without a proper code execution sandbox**,** creates serious risks: it can expose secrets, overwhelm resources, escape container boundaries, or execute malicious operations, whether through bugs, hallucinations, or prompt injection attacks.

Purpose-built AI sandboxes solve three problems simultaneously:

Security isolation: Containers or microVMs cut the blast radius of malicious or buggy code. A compromised sandbox can't access your production databases or leak API keys.

Ephemeral scale: Thousands of agent sessions can spin up and tear down in seconds without leaving idle infrastructure running up your bill.

Observability and guardrails: Good sandbox platforms expose granular logs, metrics, and network controls so you can monitor and constrain what AI-generated code does.

When evaluating sandbox providers for AI agent workloads, focus on these criteria:

- Isolation technology: Does the AI sandbox platform use standard containers (shared kernel, weaker isolation), gVisor (user-space kernel interception), or microVMs like Firecracker and Kata Containers (dedicated kernel per workload)? For truly untrusted code, microVM isolation is essential.

- Startup latency: How fast can sandboxes spin up? Sub-second cold starts keep your agents responsive. Some platforms offer snapshot-based resume for even faster warm starts.

- Session duration: Can AI code execution sandboxes run for minutes, hours, or indefinitely? Many platforms impose strict time limits that break long-running agent workflows.

- Language and runtime flexibility: Are you locked to Python and JavaScript, or can you run any containerized workload? Can you bring custom images or must you use SDK-defined environments?

- Infrastructure flexibility: Can you deploy in your own cloud (BYOC), on-premises, or only on the provider's managed infrastructure? For regulated industries or data-sensitive applications, this matters.

- Networking controls: Can you define egress policies, set up tunnels for database connections, or lock down outbound access entirely?

- Platform completeness: Do you need just sandboxes, or will your AI application also require databases, backend APIs, GPU inference, and CI/CD? Starting with a complete platform avoids painful migrations.

| Platform | Isolation | Cold start | Max session | BYOC | Languages | Best for |

|---|---|---|---|---|---|---|

| Northflank | MicroVM (Kata/CLH) + gVisor | Seconds, depending on whether the container image is pulled or not | Unlimited | Yes | Any OCI image | Complete AI infrastructure |

| E2B | MicroVM (Firecracker) | ~150ms | 24 hours | Experimental | Any Linux runtime | AI agent SDKs |

| Modal | gVisor containers | Sub-second | Configurable | No | Python-first | ML/data workloads |

| Daytona | Docker (Kata optional) | ~90ms | Stateful | No | Docker images | Fast agent iterations |

| Together | MicroVM | 500ms (resume) | Configurable | No | Dev containers | Together AI users |

| Vercel Sandbox | MicroVM (Firecracker) | Sub-second | 45 min–5 hr | No | Node.js, Python | Vercel ecosystem |

1. Northflank: Best overall code execution sandbox platform for AI agents (and generally, AI infrastructure)

Northflank has operated secure sandboxing infrastructure since 2019, processing over 2 million isolated workloads monthly. Unlike sandbox-only tools, Northflank is a complete cloud platform where secure AI code sandbox execution is one capability among many, making it the best sandbox for AI agents that need production-grade infrastructure.

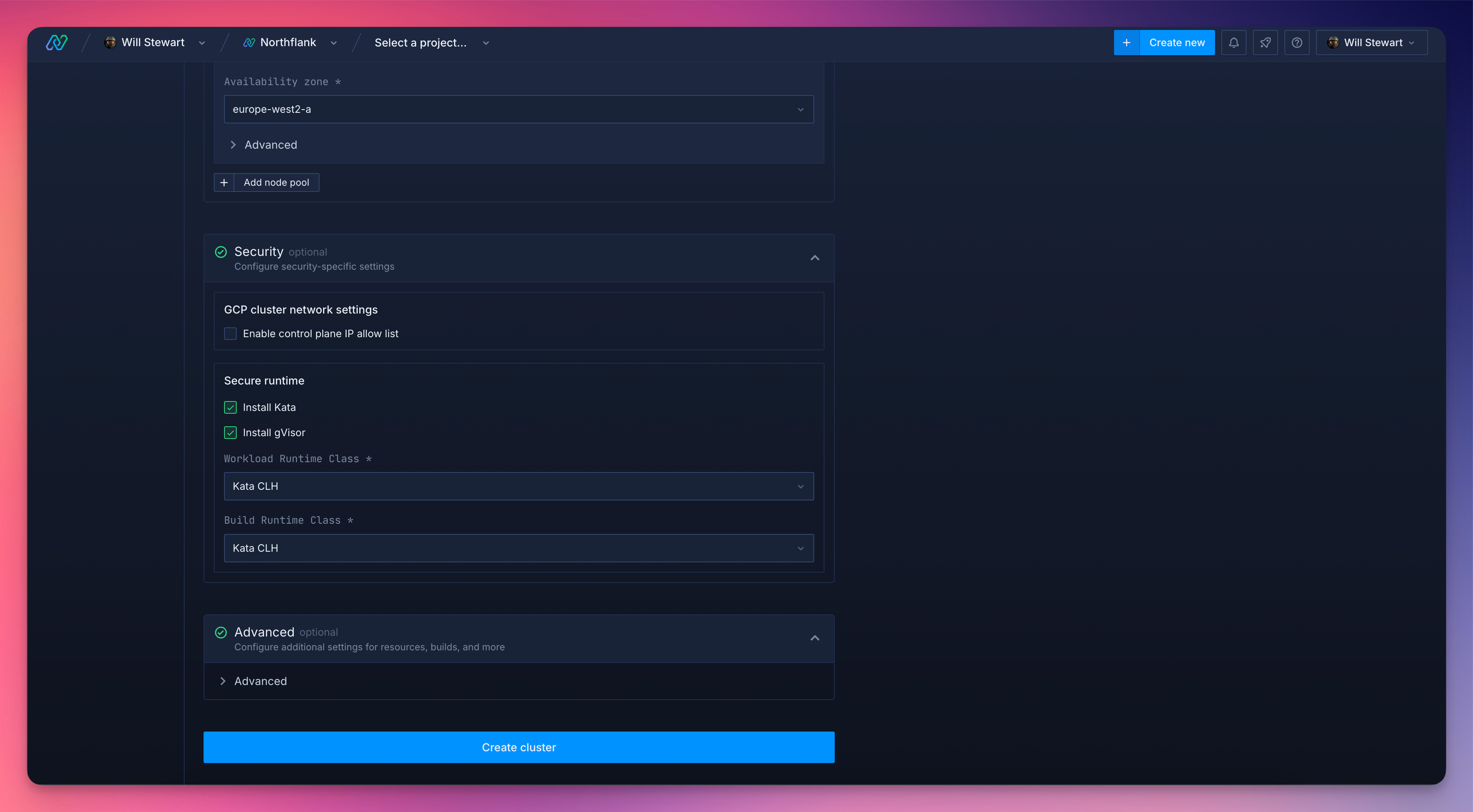

Multiple isolation technologies: Choose between Kata Containers with Cloud Hypervisor for true microVM isolation, or gVisor for user-space kernel protection. No other AI code execution sandbox platform offers this flexibility. Northflank's engineering team actively contributes to Kata Containers, QEMU, containerd, and Cloud Hypervisor in the open-source community.

Any OCI container image: Unlike platforms requiring SDK-defined images or proprietary formats, Northflank accepts any container from Docker Hub, GitHub Container Registry, or private registries, without modification. Your existing images work immediately.

Unlimited session duration: While E2B caps sessions at 24 hours and Vercel at 45 minutes to 5 hours, Northflank sandboxes persist until you terminate them. Critical for AI agents that maintain state across user interactions over days or weeks.

True BYOC deployment: Deploy sandboxes in your AWS, GCP, Azure, or bare-metal infrastructure. Keep sensitive data in your VPC while Northflank handles orchestration. No other major sandbox platform offers production-ready bring-your-own-cloud.

Complete platform: Northflank runs your entire AI application stack: sandboxed code execution, backend APIs, databases, scheduled jobs, and GPU workloads, with consistent security and orchestration. As your application grows beyond just sandboxes, your infrastructure grows with you.

Production-proven at scale: Companies like Writer, Sentry, and cto.new run multi-tenant customer deployments for untrusted code on Northflank. When cto.new launched to 30,000+ users, their Northflank-powered sandbox infrastructure handled thousands of daily deployments without issues.

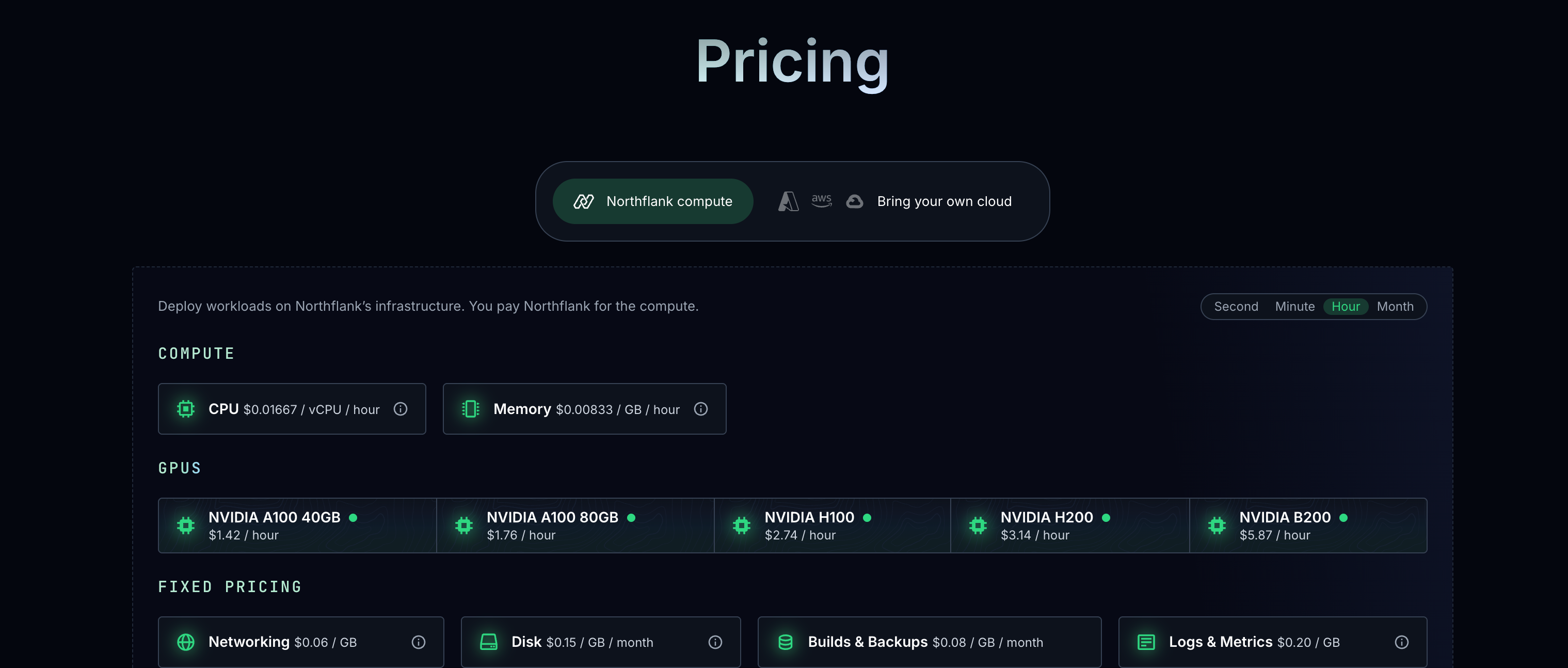

Transparent usage-based pricing with no hidden fees:

- CPU: $0.01667/vCPU-hour

- RAM: $0.00833/GB-hour

- GPU (H100): $2.74/hour all-inclusive

Northflank's GPU pricing includes CPU and RAM, approximately 62% cheaper than Modal and other providers of GPUs.

Teams building production AI applications that need enterprise-grade isolation, infrastructure flexibility, and a platform that handles more than just ephemeral code execution.

E2B built its platform specifically for AI agent workflows, with clean Python and JavaScript SDKs that make it easy to spin up sandboxes programmatically.

- Firecracker microVM isolation: Each sandbox runs in its own lightweight VM with a dedicated kernel

- Fast startup: Sandboxes boot in approximately 150ms

- Session persistence: Pause sandboxes and resume them later from the same state

- Open source: Core infrastructure is open source with self-hosting options

- AI framework integrations: Works seamlessly with LangChain, OpenAI, Anthropic, and other LLM providers

- 24-hour session limit: Even on Pro plans, sandboxes can't run longer than 24 hours

- Self-hosting complexity: Scaling past a few hundred sandboxes means running the E2B control plane yourself

- No built-in network policies: Lacks granular egress controls and IP filtering

- Custom images require Docker builds: You must craft and push a Docker image for every custom environment

- Hobby: Free with $100 one-time credit, 1-hour sessions, 20 concurrent sandboxes

- Pro: $150/month with 24-hour sessions, custom CPU/RAM

- Usage: ~$0.05/hour for 1 vCPU sandbox

AI agent developers who need reliable sandboxes with excellent SDK design and don't require sessions longer than 24 hours or infrastructure beyond code execution.

cto.new uses Northflank’s microVMs to scale secure sandboxes without sacrificing speed or cost. Read more about their use case running Northflank secure sandboxes here.

Modal provides a serverless platform optimized for machine learning and data workloads, with sandboxing as one capability within a broader compute fabric.

- Massive autoscaling: Scale from zero to 20,000+ concurrent containers with sub-second cold starts

- Python-first DX: Define sandboxes in Python code, no YAML or Kubernetes manifests

- Built-in networking: Tunneling for external connections and granular egress policies

- Snapshot primitives: Save and restore sandbox state efficiently

- GPU support: Access to the full range of NVIDIA GPUs for ML workloads

- No BYOC or on-prem: Managed-only deployment with no option to run in your own cloud

- SDK-defined images: Can't bring arbitrary OCI images; must define through Modal's SDK

- Python-centric: While JavaScript and Go SDKs exist, the platform is heavily optimized for Python

- gVisor isolation only: No microVM option for stronger isolation guarantees

- CPU: $0.047/vCPU-hour

- RAM: $0.008/GB-hour

- H100 GPU: $3.95/hour (plus separate CPU and RAM charges)

- $30/month free credits

Python-focused ML teams running batch jobs, model inference, and data pipelines who want sandboxing integrated with their existing Modal workflows.

Daytona pivoted in early 2025 from development environments to AI agent infrastructure, focusing on the fastest possible sandbox provisioning.

- Sub-90ms cold starts: The fastest sandbox creation in the market, critical when provisioning thousands of environments

- Native Docker compatibility: Standard container workflows work without proprietary formats

- Stateful sandboxes: Filesystem, environment variables, and process memory persist across agent interactions

- Built-in LSP support: Language server protocol integration for code intelligence

- Desktop environments: Linux, Windows, and macOS virtual desktops for computer-use agents

- Docker isolation by default: Standard containers share the host kernel, weaker security than microVMs. Kata Containers available but not default.

- Young platform: Feature parity with established players still evolving

- Limited networking controls: No first-class tunneling or granular egress policies yet

- Sandbox-only focus: No broader infrastructure capabilities for databases, APIs, or GPU workloads

- $200 free compute credit to start

- Pay-per-use after credits

- Startup program with up to $50k in credits

Teams optimizing for startup speed above all else, particularly for rapid agent iteration workflows where milliseconds matter.

The fundamental difference between Northflank and sandbox-only tools is scope and production readiness.

While other platforms solve the narrow problem of isolated code execution, Northflank provides complete infrastructure for AI applications:

- Persistent AI agents that maintain state across user sessions for days or weeks

- Backend APIs and databases running with the same security guarantees as your sandboxes

- GPU workloads for model inference and training alongside code execution

- CI/CD pipelines and preview environments integrated with your development workflow

- Enterprise controls including SSO, RBAC, audit logging, and compliance tools

Northflank's isolation technology has been battle-tested across millions of workloads since 2019. The engineering team actively maintains and contributes to the open-source projects that power this infrastructure: Kata Containers, QEMU, containerd, and Cloud Hypervisor.

No other sandbox platform offers Northflank's deployment options:

- Managed cloud: Zero-setup deployment on Northflank's infrastructure

- BYOC: Run in your AWS, GCP, Azure, or bare-metal with full control

- Multi-region: Deploy globally with consistent APIs and security

- Any runtime: Not locked to specific languages, frameworks, or image formats

The fastest path to running secure AI sandboxes:

- Sign up at northflank.com

- Create a project, choose your region or connect your own cloud account

- Deploy a service, select any container image from any registry

- Configure isolation, Northflank automatically provisions microVM-backed infrastructure

For teams with specific requirements, book a demo with Northflank's engineering team to discuss custom configurations, enterprise features, or high-volume pricing.

The sandbox market is crowded with tools that solve narrow problems. But AI applications need more than ephemeral code execution, they need databases, APIs, GPU inference, enterprise controls, and infrastructure flexibility.

Northflank provides all of this in one platform, with the strongest isolation options in the market and the flexibility to run anywhere: our cloud, your cloud, or bare metal.

Start building for free or talk to our engineering team about your AI infrastructure requirements.

MicroVMs (Firecracker, Kata Containers) provide the strongest isolation because each workload gets its own dedicated kernel. Standard containers share the host kernel, creating potential escape vectors. gVisor sits in between, intercepting syscalls in user space. For truly untrusted AI-generated code, microVM isolation is recommended.

Many AI agents need to maintain state across extended user interactions, hours, days, or even weeks. Session limits of 45 minutes (Vercel) or 24 hours (E2B) force you to implement complex state serialization and restoration. Platforms with unlimited session duration like Northflank avoid this architectural complexity.

Some platforms support GPU-accelerated sandboxes for AI inference. Northflank offers NVIDIA H100, A100, and other GPUs with all-inclusive pricing. Modal also supports GPUs but charges separately for GPU, CPU, and RAM. Check whether your sandbox provider supports the specific GPU types your workloads require.

BYOC (Bring Your Own Cloud) means the platform runs its control plane but provisions resources in your cloud account, you get the operational benefits of a managed platform while keeping data in your VPC. Self-hosting means you run everything yourself, including the control plane. Northflank offers true BYOC; E2B's self-hosting is still experimental.

Building sandbox infrastructure with Kubernetes, gVisor, or Firecracker is possible but requires significant engineering investment, typically months of work, plus ongoing operational burden. For most teams, a purpose-built platform provides better security, faster time-to-market, and lower total cost of ownership than rolling your own.

Pricing varies significantly based on workload patterns. For CPU-intensive workloads, Northflank's pricing ($0.01667/vCPU-hour) is approximately 65% cheaper than Modal ($0.047/vCPU-hour). For GPU workloads, Northflank's all-inclusive pricing ($2.74/hour for H100) is approximately 62% cheaper than Modal's separate billing for GPU, CPU, and RAM.