Top GPU hosting platforms for AI: inference, training & scaling

Most AI platforms help you run models. Few help you build production infrastructure around them. If you’re training LLMs, serving inference, or building full-stack ML products, you need more than access to GPUs.

You need a platform that handles everything from scheduling and orchestration to CI/CD, staging, and production. AWS and GCP offer raw compute, but they come with complexity and overhead. Lightweight tools are easy to start with, but they rarely scale when you need reliability or control.

Northflank offers an alternative. It gives you modern GPU hosting with the developer workflows and automation needed to move fast and stay in control.

In this guide, we’ll break down what GPU hosting actually means, why it matters for AI teams, and how platforms like Northflank compare to other options in the space.

If you're short on time, here’s the complete list of the best GPU hosting platforms for AI and ML teams. Some are built for scale. Others excel at speed, simplicity, or affordability. A few are rethinking the GPU experience from the ground up.

Planning GPU infrastructure? For teams scaling production AI workloads with specific capacity needs, request GPU capacity here.

| Platform | GPU Types Available | Key Strengths | Best For |

|---|---|---|---|

| Northflank | A100, H100, H200, B200, L40S, B200, L40S, MI300X, Gaudi and many more. | Full-stack GPU hosting with CI/CD, secure runtimes, app orchestration, GitOps, BYOC | Production AI apps, inference APIs, model training, fast iteration |

| NVIDIA DGX Cloud | H100, A100 | High-performance NVIDIA stack, enterprise tools | Foundation model training, research labs |

| AWS | H100, A100, L40S, T4 | Broad GPU catalog, deep customization | Enterprise ML pipelines, infra-heavy workloads |

| GCP | A100, H100, TPU v4/v5e | Tight AI ecosystem integration (Vertex AI, GKE) | TensorFlow-heavy workloads, GenAI with TPUs |

| Azure | MI300X, H100, A100, L40S | Enterprise-ready, Copilot ecosystem, hybrid cloud | Secure enterprise AI, compliance workloads |

| RunPod | A100, H100, 3090 | Secure containers, API-first, encrypted volumes | Privacy-sensitive jobs, fast inference |

| Vast AI | A100, 4090, 3090 (varied) | Peer-to-peer, low-cost compute | Budget training, short-term experiments |

While most hyperscalers like GCP and AWS offer strong infrastructure, their pricing is often geared toward enterprises with high minimum spend commitments. For smaller teams or startups, platforms like Northflank offer much more competitive, usage-based pricing without long-term contracts, while still providing access to top-tier GPUs and enterprise-grade features.

GPU hosting is the provisioning and management of GPU-enabled infrastructure to support compute-intensive tasks like model training, inference, or fine-tuning. But for AI/ML teams, it’s not just about spinning up machines with GPUs; it’s about how that infrastructure fits into the development and production lifecycle.

Effective GPU hosting includes:

- GPU-aware scheduling across jobs or containers

- Environment management, including CUDA and ML framework compatibility

- Isolation and security for workloads and data

- Service and job orchestration, depending on the use case

- Integration with development workflows (version control, CI/CD, observability)

It enables teams to abstract away infrastructure friction and focus on iteration, deployment, and scaling of models, whether training a new model or exposing an inference endpoint.

Earlier, we explored what GPU hosting involves. The best GPU hosting platforms go beyond raw compute, they simplify operational complexity while providing the tools teams need to build, deploy, and scale AI workloads reliably. Key capabilities include:

Support for configuring GPU limits, memory constraints, job timeouts, and queueing. Workloads should be scheduled based on resource availability and priority.

Seamless integration with Git for triggering builds and deployments when code changes. Automated pipelines help track experiments, update models, and deploy inference endpoints without manual effort.

Every workload must run in a sandboxed environment with role-based access control, secrets management, and network policies to reduce the attack surface.

The platform should handle both types of ML workloads, batch jobs (for training) and services (for inference), with appropriate scaling and monitoring support.

Real-time logs, GPU utilization metrics, and health monitoring help teams debug failures and optimize performance.

In addition to AI workloads, the platform should support running web services, APIs, frontends, and databases, enabling teams to build and deploy complete AI applications without stitching together multiple platforms.

A good platform is accessible through CLI, API, and UI, and offers a fast path from repo to deployed service. Clean abstractions help reduce operational overhead.

These features ensure the platform can support full ML lifecycles from research to production.

This section goes deep on each platform in the list. You’ll see what types of GPUs they offer, what they’re optimized for, and how they actually perform in real workloads. Some are ideal for researchers. Others are built for production.

Northflank abstracts the complexity of running GPU workloads by giving teams a full-stack platform; GPUs, runtimes, deployments, CI/CD, and observability all in one. You don’t have to manage infra, build orchestration logic, or wire up third-party tools.

Everything from model training to inference APIs can be deployed through a Git-based or templated workflow. It supports bring-your-own-cloud (AWS, Azure, GCP, and more), but it works fully managed out of the box.

What you can run on Northflank:

- Inference APIs with autoscaling and low-latency startup

- Training or fine-tuning jobs (batch, scheduled, or triggered by CI)

- Multi-service AI apps (LLM + frontend + backend + database)

- Hybrid cloud workloads with GPU access in your own VPC

What GPUs does Northflank support?

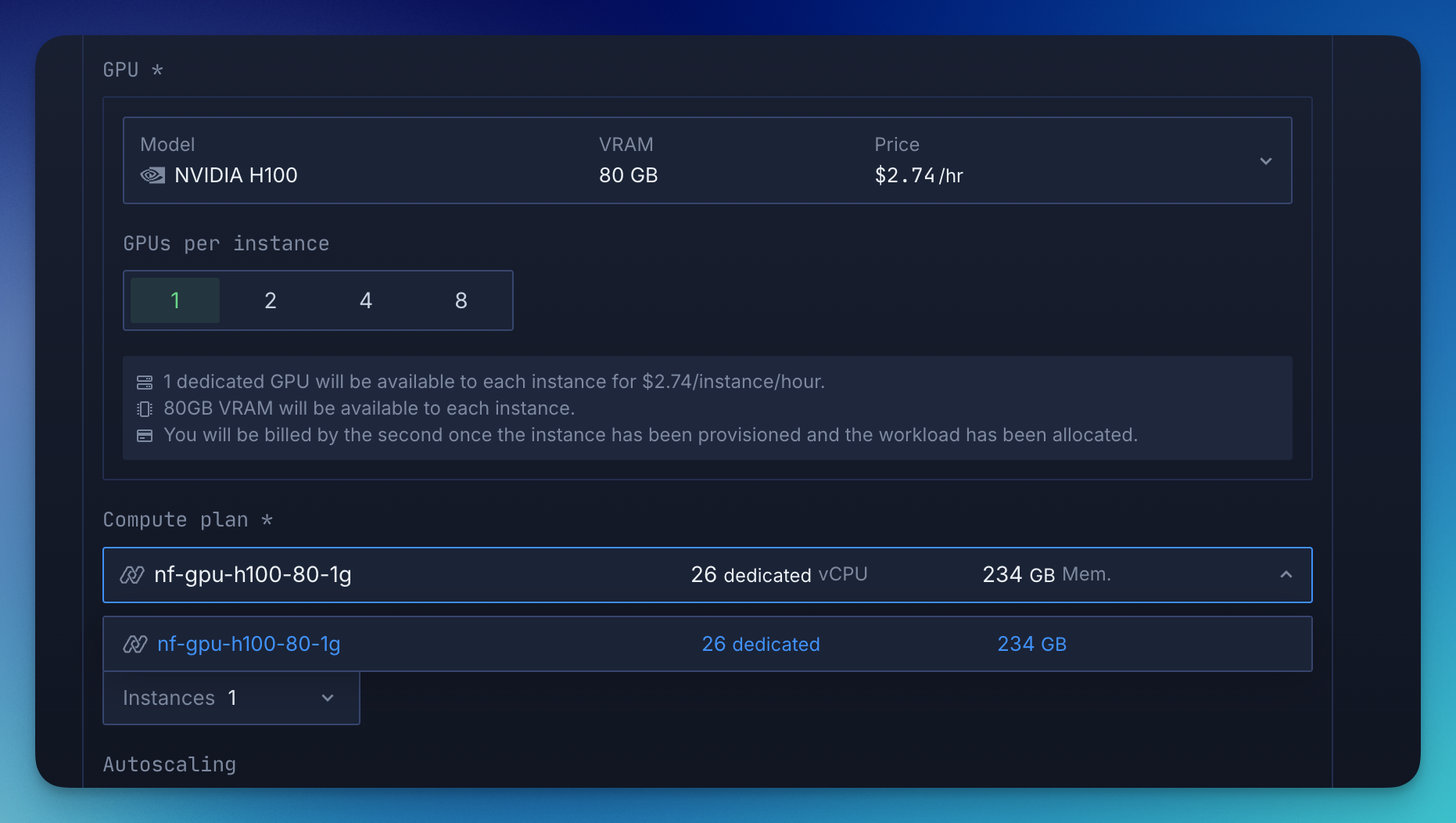

Northflank offers access to 18+ GPU types, including NVIDIA A100, H100, B200, L40S, L4, AMD MI300X, and Habana Gaudi.

Where it fits best:

If you're building production-grade AI products or internal AI services, Northflank handles both the GPU execution and the surrounding app logic. Especially strong fit for teams who want Git-based workflows, fast iteration, and zero DevOps overhead.

See how Cedana uses Northflank to deploy GPU-heavy workloads with secure microVMs and Kubernetes

DGX Cloud is NVIDIA’s managed stack, giving you direct access to H100/A100-powered infrastructure, optimized libraries, and full enterprise tooling. It’s ideal for labs and teams training large foundation models.

What you can run on DGX Cloud:

- Foundation model training with optimized H100 clusters

- Multimodal workflows using NVIDIA AI Enterprise software

- GPU-based research environments with full-stack support

What GPUs does NVIDIA DGX Cloud support?

DGX Cloud provides access to NVIDIA H100 and A100 GPUs, delivered in clustered configurations optimized for large-scale training and NVIDIA’s AI software stack.

Where it fits best:

For teams building new model architectures or training at scale with NVIDIA’s native tools, DGX Cloud offers raw performance with tuned software.

AWS offers one of the broadest GPU lineups (H100, A100, L40S, T4) and mature infrastructure for managing ML workloads across global regions. It's highly configurable, but usually demands hands-on DevOps.

What you can run on AWS:

- Training pipelines via SageMaker or custom EC2 clusters

- Inference endpoints using ECS, Lambda, or Bedrock

- Multi-GPU workflows with autoscaling and orchestration logic

What GPUs does AWS support?

AWS supports a wide range of GPUs, including NVIDIA H100, A100, L40S, and T4. These are available through services like EC2, SageMaker, and Bedrock, with support for multi-GPU setups.

Where it fits best:

If your infra already runs on AWS or you need fine-grained control over scaling and networking, it remains a powerful, albeit heavy, choice.

GCP supports H100s and TPUs (v4, v5e) and excels when used with the Google ML ecosystem. Vertex AI, BigQuery ML, and Colab make it easier to prototype and deploy in one flow.

What you can run on GCP:

- LLM training on H100 or TPU v5e

- MLOps pipelines via Vertex AI

- TensorFlow-optimized workloads and model serving

What GPUs does GCP support?

GCP offers NVIDIA A100 and H100, along with Google’s custom TPU v4 and v5e accelerators. These are integrated with Vertex AI and GKE for optimized ML workflows.

Where it fits best:

If you're building with Google-native tools or need TPUs, GCP offers a streamlined ML experience with tight AI integrations.

Azure supports AMD MI300X, H100s, and L40S, and is tightly integrated with Microsoft’s productivity suite. It's great for enterprises deploying AI across regulated or hybrid environments.

What you can run on Azure:

- AI copilots embedded in enterprise tools

- MI300X-based training jobs

- Secure, compliant AI workloads in hybrid setups

What GPUs does Azure support?

Azure supports NVIDIA A100, L40S, and AMD MI300X, with enterprise-grade access across multiple regions. These GPUs are tightly integrated with Microsoft’s AI Copilot ecosystem.

Where it fits best:

If you're already deep in Microsoft’s ecosystem or need compliance and data residency support, Azure is a strong enterprise option.

RunPod gives you isolated GPU environments with job scheduling, encrypted volumes, and custom inference APIs. It’s particularly strong for privacy-sensitive workloads.

What you can run on RunPod:

- Inference jobs with custom runtime isolation

- AI deployments with secure storage

- GPU tasks needing fast startup and clean teardown

What GPUs does RunPod support?

RunPod offers NVIDIA H100, A100, and 3090 GPUs, with an emphasis on secure, containerized environments and job scheduling.

Where it fits best:

If you're running edge deployments or data-sensitive workloads that need more control, RunPod is a lightweight and secure option.

Vast AI aggregates underused GPUs into a peer-to-peer marketplace. Pricing is unmatched, but expect less control over performance and reliability.

What you can run on Vast AI:

- Cost-sensitive training or fine-tuning

- Short-term experiments or benchmarking

- Hobby projects with minimal infra requirements

What GPUs does Vast AI support?

Vast AI aggregates NVIDIA A100, 4090, 3090, and a mix of consumer and datacenter GPUs from providers in its peer-to-peer marketplace. Availability and performance may vary by host.

Where it fits best:

If you’re experimenting or need compute on a shoestring, Vast AI provides ultra-low-cost access to a wide variety of GPUs.

If you've already looked at the list and the core features that matter, this section helps make the call. Different workloads need different strengths, but a few platforms consistently cover more ground than others.

| Use Case | What to Prioritize | Platforms to Consider |

|---|---|---|

| Full-stack AI delivery | Git-based workflows, autoscaling, managed deployments, bring your own cloud, and GPU runtime integration. | Northflank |

| Large-scale model training | H100 or MI300X, multi-GPU support, RDMA, high-bandwidth networking | Northflank, NVIDIA DGX Cloud, AWS |

| Real-time inference APIs | Fast provisioning, autoscaling, low-latency runtimes | Northflank, RunPod |

| Fine-tuning or experiments | Low cost, flexible billing, quick start | Northflank, Vast AI. |

| Production deployment (LLMs) | CI/CD integration, containerized workloads, runtime stability | Northflank, GCP |

| Edge or hybrid AI workloads | Secure volumes, GPU isolation, regional flexibility | Northflank, RunPod, Azure, AWS |

GPU hosting is now a critical layer in the modern AI stack. The right platform depends on your workload, how much control you need, and how quickly you want to go from prototype to production. We’ve covered what GPU hosting actually involves, what to look for, and how today’s top platforms compare. If you want GPU infrastructure that works out of the box and scales with your team, Northflank is worth a look. It’s built for developers, supports real workflows, and helps you ship faster with less overhead.

Try Northflank or book a demo to see how it fits your ML stack.