Top 5 Lightning AI alternatives for ML teams in 2026

Lightning AI has become a popular platform for teams building and deploying machine learning models, offering specialized studios and GPU infrastructure for ML development.

This guide covers five alternatives to Lightning AI, comparing their technical approaches, deployment capabilities, and use cases to help you find the right solution for your ML workloads.

-

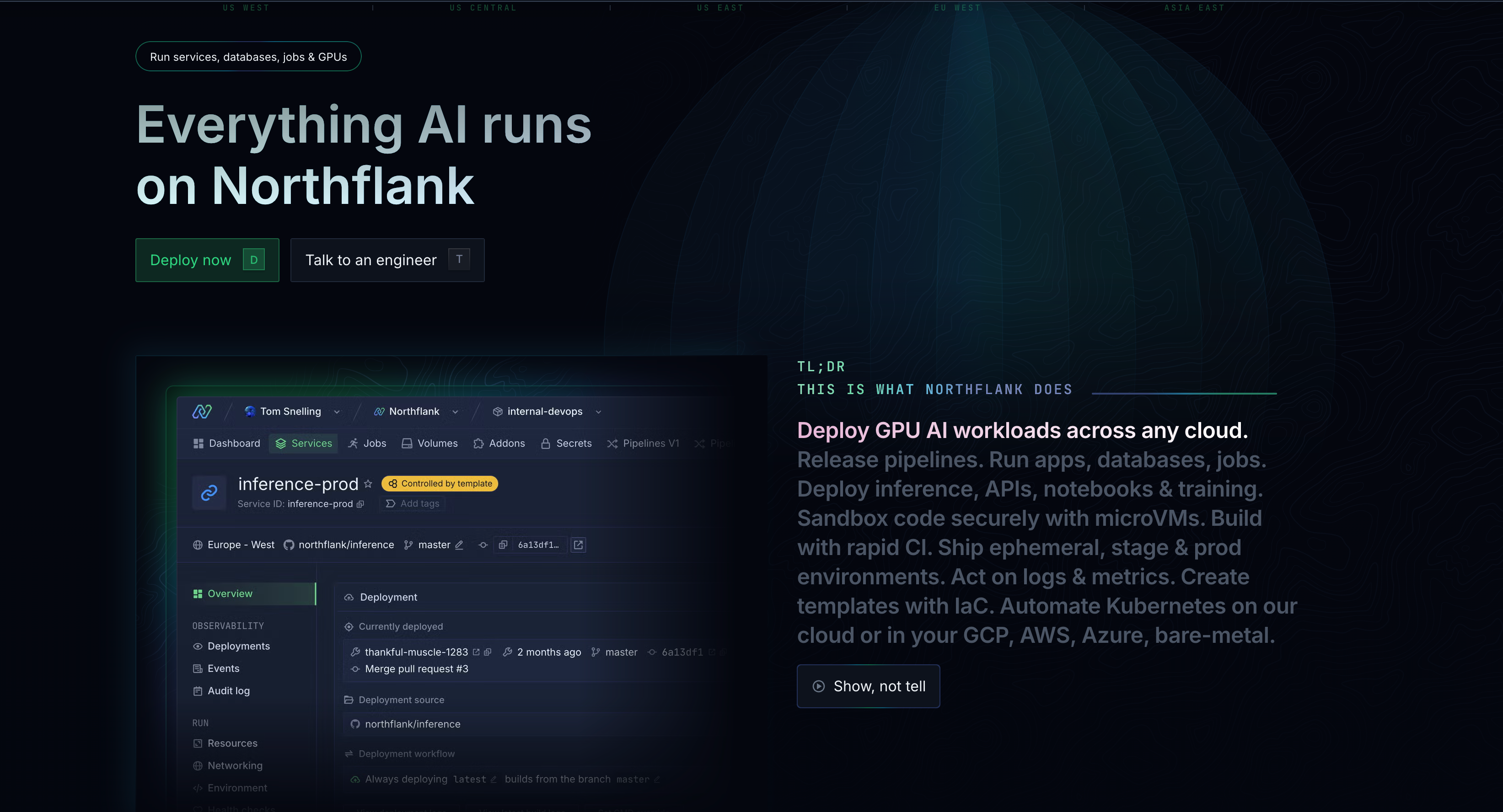

Northflank (all-in-one deployment platform) – Complete unified platform that deploys and runs ANY containerized workload, including ML models, APIs, and GPU-accelerated applications.

Unlike Lightning AI's specialized ML studio approach, Northflank provides production-grade infrastructure for deploying trained models, serving inference endpoints, and running AI applications alongside your other services. Deploy ML workloads, databases, web apps, and GPU jobs through a unified interface in minutes.

-

Modal – Serverless platform for ML and AI applications with GPU support. Python-first developer experience for training models, running inference, and deploying ML workloads.

-

Replicate – Platform for deploying and running machine learning models with API access.

-

Runpod – GPU cloud platform for training models and running inference with serverless and dedicated compute options.

-

Amazon SageMaker – Comprehensive enterprise ML platform covering the full lifecycle from notebooks to production deployment.

Lightning AI is a specialized platform for AI development that offers Lightning AI Studios, cloud-based IDEs designed specifically for machine learning workflows, including model training, fine-tuning, and experimentation.

Key features include:

- AI development studios – Cloud IDEs with pre-configured ML environments

- Multi-cloud GPU marketplace – Access to GPUs from AWS, GCP, Lambda, Nebius, and other providers

- Zero-setup environment – Pre-built templates for common ML tasks and frameworks

- Collaborative coding – Real-time collaboration features for ML teams

- Automated scaling – Infrastructure that scales with training workloads

Lightning AI focuses on the development and training phases of machine learning, providing data scientists with powerful tools for experimentation and model development.

Understanding this distinction is essential when evaluating Lightning AI alternatives.

ML development platforms like Lightning AI and Modal provide environments for building and training models. They offer notebooks, IDEs, and GPU access for experimentation. These platforms help data scientists write code, run training jobs, and iterate on models. While they may offer deployment features, their primary focus and differentiation is the development and training workflow.

Deployment platforms like Northflank provide infrastructure for running trained models in production. They handle containerized ML applications, serve inference endpoints, manage scaling under load, and integrate with your existing infrastructure. These platforms focus on reliability, performance, and operational requirements for production AI workloads.

All-in-one deployment platforms like Northflank go further by running your entire application stack - not just ML models, but also APIs, databases, web applications, and background jobs.

This means you can deploy your ML inference endpoints alongside the web applications that use them, the databases that store results, and the APIs that orchestrate everything, all in one unified platform.

Some platforms attempt both development and deployment, while others specialize in one area. When choosing a Lightning AI alternative, determine if you need development tools, production deployment infrastructure, or both.

Understanding your specific requirements helps narrow down the right solution.

- Primary use case – Are you training models, deploying them to production, or both? Development platforms optimize for experimentation while deployment platforms prioritize reliability and scale.

- GPU requirements – What GPU types and quantities do you need? Training large models requires different compute than serving inference requests.

- Production readiness – Does the solution support production deployments with high availability, monitoring, and security? Development notebooks and production infrastructure have different requirements.

- Cost structure – How are you charged for compute? Options include pay-per-use, subscriptions, reserved instances, and spot pricing. Training costs differ significantly from inference serving.

- Integration requirements – Does the solution work with your existing MLOps tools and CI/CD pipelines? Deployment platforms need to integrate with your infrastructure, while development platforms focus on data science tools.

- Team expertise – What skills does your team have? Some solutions require Kubernetes knowledge, while others abstract infrastructure complexity.

- Deployment velocity – How quickly can you move from trained model to production? Some platforms handle this end-to-end while others require manual steps.

The following solutions represent different approaches to ML infrastructure, from complete deployment platforms to specialized development environments.

See a detailed comparison of each alternative below, including their key capabilities, use cases, and how they differ from Lightning AI.

Northflank provides a fundamentally different approach from Lightning AI. Lightning AI helps you train and develop machine learning models in specialized studios. Northflank focuses on deploying and running those models in production alongside your entire application infrastructure.

How Northflank differs from Lightning AI

Lightning AI is a platform for ML development and training. It provides cloud IDEs, notebooks, and GPU access for data scientists building models. You train models in Lightning AI Studios, experiment with different approaches, and iterate on your ML code. While Lightning AI offers deployment capabilities, its primary strength is the development and training workflow.

Northflank is a complete deployment platform that runs your ML models in production. After training your model anywhere (Lightning AI, locally, or other platforms), deploy it to Northflank as a containerized application.

Northflank handles deployment automation, GPU provisioning, scaling, monitoring, health checks, and all operational requirements. It runs ML inference APIs, model serving endpoints, and AI applications alongside your databases, web services, and other infrastructure in one unified platform.

Key capabilities of Northflank

- Multi-cloud GPU deployment – Deploy GPU workloads to Northflank's managed cloud or connect your own GKE, EKS, AKS, Civo, OKE, or bare-metal Kubernetes clusters. Bring Your Own Cloud (BYOC) provides a fully managed platform experience inside your VPC, maintaining complete control over data residency and security.

- Complete production infrastructure – All essential capabilities built-in: CI/CD automation, release pipelines with promotion workflows, preview environments, secrets management, monitoring and logging, autoscaling, health checks, rollbacks, and RBAC. Access everything through real-time UI, API, CLI, or JavaScript client.

- Unified infrastructure management – Run ML models, APIs, databases, web applications, and background jobs in one platform. Deploy containerized workloads from any registry or build from Git repositories automatically.

Why teams choose Northflank for ML deployment

Teams training models in Lightning AI or other platforms often need robust production infrastructure for serving those models. Northflank addresses deployment, scaling, and operational management in one platform.

Companies like Weights scaled to serve millions of users using Northflank, running over 10,000 AI training jobs and half a million inference runs daily without managing autoscaling or spot instance orchestration.

AI companies use Northflank to deploy models trained anywhere, serving inference endpoints with automatic scaling, GPU provisioning, and zero infrastructure overhead.

Try Northflank's free developer sandbox or book a demo (optional) to speak with an engineer. See pricing details

Best for: Teams needing production deployment for trained ML models, organizations wanting to run AI applications with GPU requirements, companies in search of a unified infrastructure for ML workloads and traditional applications, and teams wanting Kubernetes power without operational complexity.

Modal provides a serverless platform for ML and AI applications with a Python-first developer experience for training and deploying ML workloads.

Key capabilities

- Serverless GPU compute with automatic scaling for training and inference

- Python-native API for defining compute, storage, and dependencies

- Container-based execution with custom environments

- Scheduled jobs and cron workflows for ML pipelines

- Built-in secrets management and volume storage

Best for: Teams using Python-based ML workflows and organizations needing serverless compute for both training and inference workloads.

See 6 best Modal alternatives for ML, LLMs, and AI app deployment

Replicate provides a platform for deploying and running machine learning models through API calls.

Key capabilities

- Deploy any machine learning model with a simple API interface

- Automatic scaling from zero to handle traffic spikes

- Support for custom models and popular open-source models

- Docker-based deployment with custom dependencies

Best for: Teams needing model deployment via API, organizations running inference workloads with variable traffic.

See 6 best Replicate alternatives for ML, LLMs, and AI app deployment

Runpod offers GPU cloud infrastructure optimized for AI workloads with both serverless and dedicated compute options.

Key capabilities

- Serverless GPU compute with automatic scaling

- Dedicated GPU pods for consistent performance

- Pre-built templates for common ML frameworks

- Community marketplace for GPU capacity

- Container-based deployments with Docker support

Best for: Teams running inference workloads at scale, and companies deploying containerized ML applications with varying compute demands.

See RunPod alternatives for AI/ML deployment beyond just a container

Amazon SageMaker provides a comprehensive enterprise ML platform covering the full machine learning lifecycle from development to production deployment.

Key capabilities

- Managed Jupyter notebooks with scalable compute

- Automated model training with hyperparameter tuning

- Model deployment with managed endpoints and monitoring

- MLOps capabilities including pipelines, model registry, and experiment tracking

- Integration with AWS services for data, security, and governance

Best for: Organizations using AWS infrastructure, teams with governance and compliance requirements, and companies with existing AWS data services.

See AWS SageMaker alternatives: Top 6 platforms for MLOps in 2026

Choosing the right Lightning AI alternative depends on understanding what stage of the ML lifecycle you're optimizing.

Development-focused solutions like Modal and Lightning AI itself provide environments for training models and experimenting with approaches.

Deployment-focused platforms provide production infrastructure for running trained models and serving inference endpoints.

Northflank stands out as an all-in-one deployment platform that runs your entire application stack - ML models alongside APIs, databases, and web applications in a unified infrastructure.

Start with a solution that matches your primary use case. Try Northflank's free sandbox or schedule a demo to see how a unified deployment platform handles ML production workloads alongside your entire application infrastructure.