vLLM vs Ollama: Key differences, performance, and how to run them

Large language models are no longer just research toys. They power customer support, code assistants, knowledge retrieval, and even production systems that handle millions of requests a day.

But if you have ever tried to run one yourself, you know that serving these models is not trivial. You need to balance latency, throughput, memory, cost, and developer experience. That is why two open-source projects, vLLM and Ollama, have quickly become popular choices for various types of teams.

At first glance, they may look similar. Both let you run and interact with large language models locally or in the cloud. But under the hood, they approach the problem from different angles. Understanding these differences can save you a lot of time and money when choosing the right tool for your stack.

In this article, we will compare vLLM and Ollama side by side, look at their strengths and trade-offs, and explore where each shines. By the end, you will not only know which one suits your needs but also how to deploy either on Northflank to get them running in production quickly.

If you are short on time, here is a high-level snapshot of how they compare.

| Feature | vLLM | Ollama |

|---|---|---|

| Focus | High-performance LLM inference | Developer-friendly local model runner |

| Architecture | PagedAttention + optimized GPU scheduling | Lightweight runtime + simple model packaging |

| Performance | Industry-leading throughput and low latency | Optimized for quick setup and ease of use |

| Supported models | Hugging Face models | Curated set of open LLMs (LLaMA, Mistral, Gemma, etc.) |

| Ecosystem fit | Best for production APIs and scaling | Best for local dev and fast prototyping |

| Hardware | GPU-first (A100, H100, etc.) | Runs on consumer infrastructure |

| Ease of use | Requires more setup but flexible | Very easy, almost plug-and-play |

💭 What is Northflank?

Northflank is a full-stack AI cloud platform that helps teams build, train, and deploy models without infrastructure complexity. GPU workloads, APIs, frontends, backends, and databases run together in a single platform so your stack stays fast, flexible, and production-ready.

Sign up to get started or book a demo to see how it fits your stack.

vLLM is a high-performance inference engine for large language models. It was designed from the ground up to solve one of the hardest problems in serving LLMs: throughput.

Unlike general-purpose frameworks, vLLM introduces PagedAttention, a technique that manages memory like a virtual memory system. Instead of wasting GPU memory on tokens that are not being actively processed, it reuses memory efficiently across requests.

That may sound technical, but the result is simple: vLLM lets you serve more requests with the same hardware without degrading latency. In benchmarks, it consistently outperforms traditional backends like Hugging Face Transformers and even custom inference stacks.

- Exceptional performance with large batch sizes and long context windows

- Strong support for cutting-edge compression and quantization methods

- Scales well across multi-GPU clusters

- Integrates with Hugging Face models directly

- Requires GPUs for best results; CPU support is limited

- More complex to deploy compared to lighter runtimes

- Not the best fit for quick local experiments

In other words, vLLM is built for teams who care about efficiency at scale, whether you are serving thousands of concurrent API calls or powering production workloads.

Ollama takes a very different approach. Instead of starting from performance bottlenecks, it focuses on developer experience. The project makes it incredibly easy to download, run, and interact with large language models on your local machine. With a single command, you can pull a model and start chatting with it, no need for complex configurations or GPU cluster setup.

Ollama’s packaging system is one of its strongest features. Models are distributed as containers with weights and instructions bundled together, which makes sharing and reproducing environments straightforward. It also has first-class support for Apple Silicon, which means you can run models efficiently on an M1 or M2 laptop without needing a data center GPU.

- Extremely easy to set up and run

- Works on consumer infrastructure

- Clean packaging system for distributing models

- Great for prototyping, experimentation, and local development

- Not optimized for high-throughput production workloads

- Limited to curated models rather than any arbitrary Hugging Face model

- Scaling beyond a single machine requires extra work

So while Ollama is not designed to compete with vLLM in raw throughput, it wins on simplicity and accessibility, especially for developers who just want to get started.

Now that we have looked at them separately, let us compare them directly across key dimensions.

vLLM dominates in raw performance thanks to PagedAttention and GPU scheduling optimizations. Ollama, on the other hand, is not built for large-scale throughput. If you need to serve thousands of requests per second, vLLM is the clear winner.

vLLM works with a wide range of Hugging Face models and supports advanced quantization formats. Ollama focuses on a curated catalog of models that are packaged neatly for ease of use. That means vLLM gives you more flexibility, but Ollama provides consistency.

Ollama is hands-down easier to use. It feels almost like Docker for language models. vLLM requires more setup, though tools like FastAPI make it manageable.

vLLM is designed for data center GPUs such as A100s and H100s. Ollama runs well on consumer GPUs and Apple Silicon, making it more accessible for individual developers.

Scaling is where vLLM shines. It was built for multi-GPU clusters and cloud deployments. Ollama is better suited to single-machine setups and local experiments.

Here is a quick guide to help you decide.

| Use case | Best fit |

|---|---|

| Running production APIs at scale | vLLM |

| Prototyping on a laptop | Ollama |

| Serving models with long context windows | vLLM |

| Running curated open LLMs with minimal setup | Ollama |

| Multi-GPU scaling in the cloud | vLLM |

| Offline local experimentation | Ollama |

The choice really depends on your goals. If you are a developer exploring models on your laptop or need a simple setup for demos, Ollama is perfect. If you are building an application that needs to handle serious traffic or want to maximize GPU efficiency, vLLM is the better option.

Choosing the right tool is only half the story. You also need a way to deploy it. Northflank is a full-stack cloud platform built for AI workloads, letting you run APIs, workers, frontends, backends, and databases together with GPU acceleration when you need it. The key advantage is that you do not have to stitch infrastructure together yourself.

With Northflank, you can containerize your application, assign GPU resources, and expose it as an API without extra complexity. If you want to roll your own, start with our guide on self-hosting vLLM in your own cloud account. For a hands-on example, see how we deployed Qwen3 Coder with vLLM.

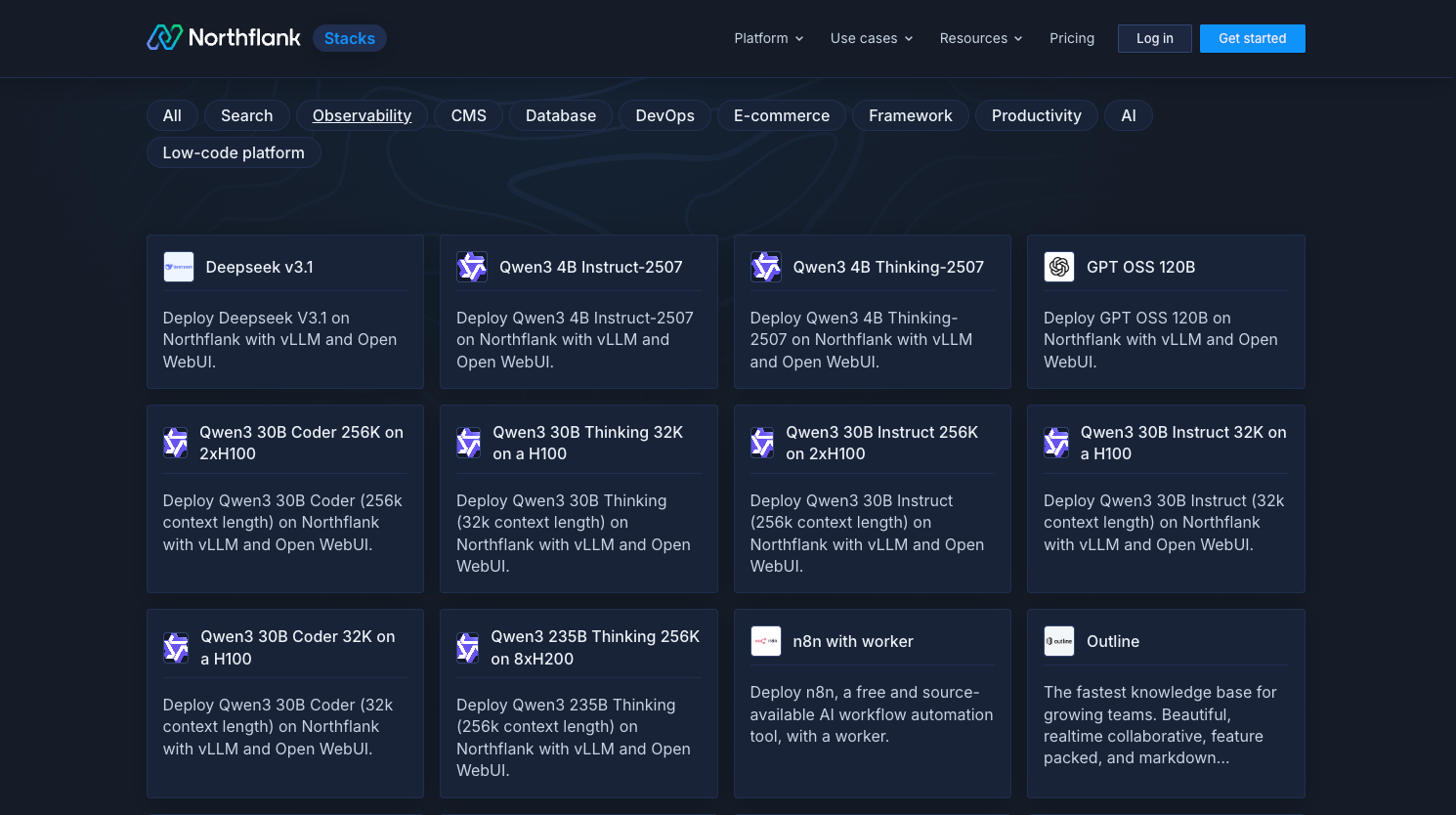

If speed matters, you can skip setup entirely with one-click templates. For example, deploy vLLM on AWS with Northflank in a single step, or explore the Stacks library configured to run vLLM with models like Deepseek, GPT OSS, and Qwen.

This flexibility means you can start by testing Ollama locally and then scale vLLM across multiple H100s in production. Monitoring, autoscaling, and GPU provisioning are built in, so you can focus on your application while Northflank handles the rest.

vLLM and Ollama represent two different philosophies for running large language models. vLLM is built for scale, squeezing every drop of performance from GPUs and handling demanding production workloads. Ollama is built for accessibility, making it easy for developers to get models running on their laptops or small machines.

There is no single right choice. It depends on whether you value raw performance or developer simplicity. The good news is that you do not have to pick one forever. With Northflank, you can deploy both, switch between them, and scale as your needs evolve.

If you are ready to try it yourself, you can launch a vLLM or Ollama service on Northflank in just a few minutes. Sign up today or book a demo to see how it fits your workflow.