6 best MLflow alternatives: Open source & commercial ML platforms

MLflow is a popular open-source platform for managing the machine learning lifecycle. It provides experiment tracking, model packaging, and deployment capabilities.

However, teams are looking for alternatives due to MLflow's limitations in areas like multi-user collaboration, role-based access controls, and production-grade deployment infrastructure.

If you're looking for better team collaboration features, more reliable deployment options, or enterprise-grade security, we would cover several alternatives that provide these.

And if your team is specifically focused on production model deployment and scaling, platforms like Northflank provide advanced infrastructure management and deployment capabilities that address many of MLflow's shortcomings in production environments.

Before we go into the individual platforms, this is a quick comparison to help you quickly identify which MLflow alternative might work best for your team's needs.

| Feature | Northflank | BentoML | Kubeflow | Neptune.ai | Azure ML | ZenML |

|---|---|---|---|---|---|---|

| Primary focus | AI/ML model deployment & full-stack platform | Model serving & deployment | End-to-end ML platform | Experiment tracking | Enterprise ML platform | MLOps pipeline orchestration |

| Experiment tracking | Real-time monitoring & observability | Basic monitoring & logging | Built-in with Katib & pipelines | Advanced experiment management | Comprehensive tracking | Pipeline-based tracking |

| Model registry | Container registry for models | Centralized model store | Full model lifecycle mgmt | Model versioning & staging | Enterprise model registry | Model registry integration |

| Model deployment | Production-grade containers | API endpoints & batch jobs | KServe & distributed serving | Limited deployment options | Multiple deployment targets | Multiple deployment backends |

| RBAC & team collaboration | Fine-grained RBAC & teams | Not available | Kubernetes-native RBAC | Team workspaces & permissions | Enterprise-grade RBAC | Organization & workspace RBAC |

| Staging environments | Multi-environment support | Development & production | Namespace-based staging | Experiment comparison | Multi-workspace staging | Pipeline environment management |

| Infrastructure management | Auto-scaling & monitoring | Docker & Kubernetes | Kubernetes orchestration | Managed service only | Cloud-native scaling | Multi-cloud orchestration |

| Licensing | Commercial (usage-based) | Open source (Apache 2.0) | Open source (Apache 2.0) | Freemium + Commercial | Commercial (Azure pricing) | Open source + Commercial |

| Learning curve | Moderate (developer-friendly) | Low to moderate | Steep (Kubernetes knowledge) | Low (intuitive UI) | Moderate (Azure ecosystem) | Moderate (pipeline concepts) |

| Best for | AI/ML production deployment & scaling | Fast model serving | Kubernetes-native teams | Research & experimentation | Enterprise Azure users | MLOps pipeline standardization |

The key takeaways and summary of the comparison are these:

- Northflank specializes in production deployment, infrastructure management, and team collaboration for both AI/ML and full-stack applications

- BentoML and Kubeflow provide comprehensive model serving capabilities but with different complexity levels

- Neptune.ai delivers the best experiment tracking experience

- Azure ML provides comprehensive enterprise features within the Microsoft ecosystem

- ZenML delivers flexible MLOps orchestration with extensive integration capabilities

While MLflow has gained popularity as an open-source ML platform, many teams start looking for alternatives when they encounter its limitations in real-world production environments. Let's look at some of the things MLflow lacks and why some teams are in search of alternatives.

MLflow wasn't designed with team collaboration in mind. MLflow lacks robust multi-user support or role-based access controls (RBAC).

Collaboration is hard when you can't easily share experiments or collaborate on them because MLflow doesn't provide user management and permissions.

This means anyone with access to your MLflow UI can delete experiments, making it risky for larger teams.

While MLflow can deploy models, its deployment options are fairly basic compared to modern production requirements.

You'll often need additional tools and significant DevOps work to handle scaling, monitoring, and production-grade infrastructure management that teams expect today.

MLflow focuses primarily on model tracking but falls short when it comes to comprehensive data versioning.

Running MLflow beyond the basic local use case requires substantial DevOps work.

You need to set up a tracking server with a backing database, possibly a file or object store for artifacts, and handle authentication yourself.

If your team needs to track dataset changes alongside model versions, you'll need to look elsewhere.

The MLflow UI, while functional, can feel dated and limited when you're trying to collaborate with team members or present results to stakeholders.

The visualization options are basic, and sharing experiment results or creating reports for non-technical team members can be challenging.

As your team grows and experiments multiply, MLflow can become difficult to manage.

The lack of proper user management, combined with limited organizational features, means you'll likely hit walls when trying to scale MLflow across multiple teams or projects.

Now that you understand MLflow's limitations, you'll want to know what features to prioritize when evaluating alternatives. Here are the key capabilities that can make or break your team's ML workflow.

Your alternative should provide proper user management and permissions from day one.

Look for platforms that offer granular role-based access controls, allowing you to set different permission levels for data scientists, ML engineers, and stakeholders.

This prevents accidental deletions and ensures sensitive experiments remain secure.

Moving models to production shouldn't require a separate DevOps team.

Look for platforms that offer containerized deployments, auto-scaling, staging environments, and monitoring out of the box.

The ability to deploy models as REST APIs, batch jobs, or integrate with existing infrastructure is crucial for real-world applications.

While MLflow offers basic tracking, modern alternatives provide more intuitive interfaces for comparing experiments, visualizing metrics, and sharing results.

Look for platforms with advanced filtering, search capabilities, and the ability to create custom dashboards for different team members.

A comprehensive model registry should go beyond simple storage.

You want version control, stage management (development, staging, production), model lineage tracking, and the ability to roll back to previous versions when needed.

Integration with your deployment pipeline is equally important.

Your MLflow alternative should play well with your existing tools.

Check for integrations with popular ML frameworks (PyTorch, TensorFlow, scikit-learn), cloud providers, CI/CD systems, and data storage solutions.

The more seamlessly it fits into your current workflow, the smoother your transition will be.

Consider both upfront costs and long-term expenses.

Open-source solutions might seem free, but factor in infrastructure, maintenance, and support costs.

Commercial platforms often provide better support and managed services, but can become expensive as you scale.

Evaluate based on your team size, usage patterns, and budget constraints.

Once you understand MLflow's limitations, it becomes clear that different teams need different solutions. Here are the six best alternatives to MLflow, each addressing specific challenges that teams encounter when scaling their ML operations.

Northflank goes beyond basic experiment tracking to provide a complete platform designed for deploying and scaling production-ready ML models and AI applications.

Where MLflow struggles with production deployment and team collaboration, Northflank excels by combining containerized infrastructure with advanced staging environments, Git-based CI/CD, and enterprise-grade RBAC.

Key overlap with MLflow: Model serving and deployment

Northflank directly addresses MLflow's deployment limitations by providing production-grade container deployment for ML models.

Northflank gives you a comprehensive deployment platform that handles scaling, monitoring, and team collaboration seamlessly.

Key features:

- Production-grade Docker container deployment for ML models with full runtime control and customization

- Enterprise-grade staging environments and production pipelines for secure model deployment workflows

- Team collaboration with fine-grained RBAC, addressing MLflow's biggest weakness in multi-user environments

- Multi-environment support for development, staging, and production model deployments

- Git-based CI/CD with automated model deployment triggers

- Real-time monitoring and observability tools for model performance tracking

- Free tier: Generous limits for testing and small projects

- CPU instances: Starting at $2.70/month ($0.0038/hr) for small workloads, scaling to production-grade dedicated instances

- GPU support: NVIDIA A100 40GB at $1.42/hr, A100 80GB at $1.76/hr, H100 at $2.74/hr, up to B200 at $5.87/hr

- Enterprise BYOC: Flat fees for clusters, vCPU, and memory on your infrastructure, no markup on your cloud costs

- Pricing calculator available to estimate costs before you start

- Fully self-serve platform, get started immediately without sales calls

- No hidden fees, egress charges, or surprise billing complexity

Why Northflank excels where MLflow falls short in production:

MLflow's deployment capabilities are basic and require significant DevOps work to scale.

Northflank provides enterprise-grade infrastructure management, automatic scaling, and proper staging environments out of the box.

While MLflow lacks RBAC and team collaboration features, Northflank offers comprehensive user management and permissions that large teams require.

Best for: Teams moving from experimentation to production who need reliable model deployment, proper staging environments, and enterprise-grade collaboration features. Ideal for organizations requiring both ML model deployment and full-stack application support.

See how Cedana uses Northflank to deploy workloads onto Kubernetes with microVMs and secure runtimes

BentoML focuses specifically on converting trained models into production-ready serving systems.

It is a Python library for building online serving systems optimized for AI apps and model inference.

Unlike MLflow's basic deployment options, BentoML provides specialized serving capabilities designed for production environments.

Key features:

- Production-ready ML service creation with API endpoint generation

- Framework-agnostic approach supporting TensorFlow, PyTorch, scikit-learn, and more

- Docker and Kubernetes deployment with containerization built-in

- Adaptive micro-batching dynamically adjusts batch size and batching intervals based on real-time request patterns for optimized performance

Best for: Teams that need fast, reliable model serving without the complexity of a full MLOps platform. Perfect for organizations already satisfied with their experiment tracking but need better deployment capabilities.

For teams evaluating BentoML alongside other model serving solutions, check out our detailed comparison in 6 best BentoML alternatives for self-hosted AI model deployment.

Kubeflow is an advanced, scalable platform for running machine learning workflows on the Kubernetes cluster.

It provides comprehensive ML workflow orchestration that MLflow lacks, making it ideal for teams already operating in Kubernetes environments.

Key features:

- Complete ML workflow orchestration with pipeline management

- Kubernetes-native ML platform with distributed training capabilities

- Track Experiments/Runs: With Kubeflow pipelines or using the Kubeflow Notebooks, track every variation of the hyper-parameters along with any configuration in that specific Experiment

- Enterprise-grade multi-user support with namespace-based isolation

Best for: Organizations with Kubernetes expertise who need end-to-end ML platform capabilities. Ideal for teams running complex, distributed ML workloads that require sophisticated orchestration.

If you're considering Kubeflow among other orchestration platforms, our guide on Top 7 Kubeflow alternatives for deploying AI in production provides detailed comparisons.

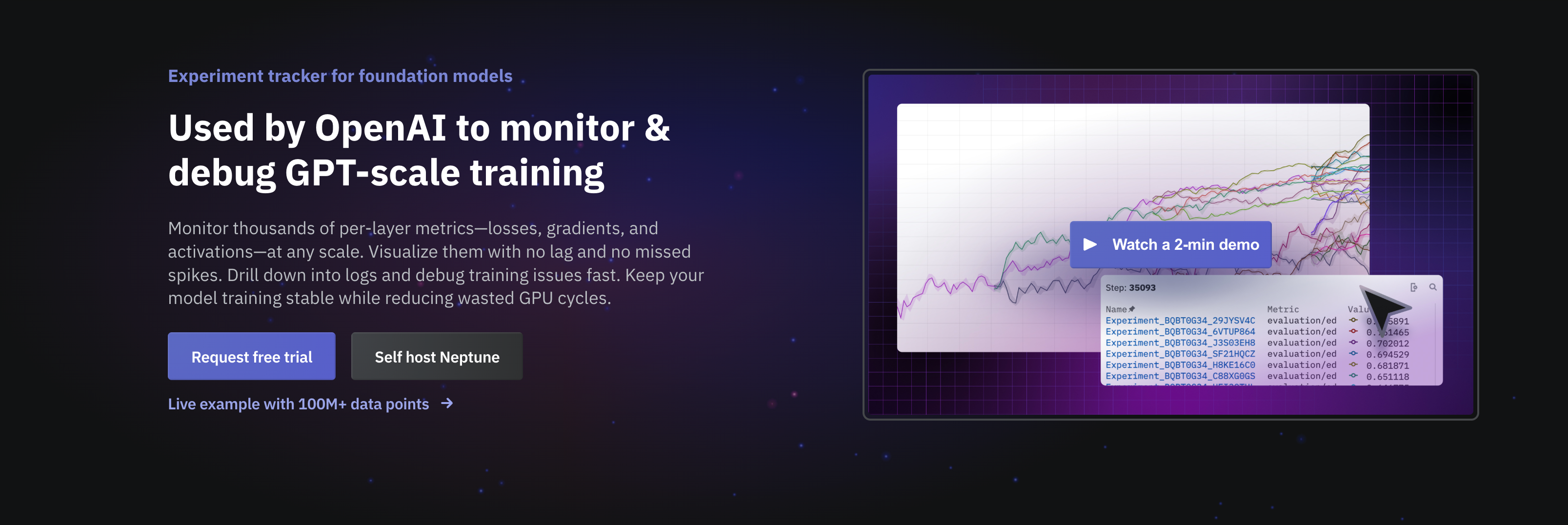

Neptune is a lightweight experiment tracker for ML teams that struggle with debugging and reproducing experiments, sharing results, and messy model handover.

It directly addresses MLflow's collaboration and visualization limitations with a purpose-built experiment tracking platform.

Key features:

- Advanced experiment management and collaboration with team workspaces

- Version production-ready models and metadata associated with them in a single place. Review models and transition them between development stages

- Superior visualization and team features compared to MLflow's basic UI

- Protect your projects, ensure correct access levels, and collaborate securely with role-based access control and SSO

Best for: Research teams and organizations prioritizing experiment tracking and collaboration over deployment capabilities. Perfect for teams that need better visualization and sharing than MLflow provides.

Azure ML provides a comprehensive enterprise ML platform within the Microsoft ecosystem, offering capabilities that far exceed MLflow's scope.

It offers multiple interfaces, including the Azure Machine Learning studio UI, the Azure Machine Learning V2 CLI, and the Python Azure Machine Learning V2 SDK to accommodate different workflows and preferences.

Key features:

- Microsoft's comprehensive ML platform with enterprise-grade security and compliance

- Azure role-based access control (Azure RBAC) to manage access to Azure resources, giving users the ability to create new resources or use existing ones

- Integrated development environment with seamless Azure service integration

- Production deployment at scale with multiple target environments

Best for: Enterprise teams already invested in the Microsoft ecosystem who need comprehensive ML platform capabilities with enterprise support and compliance features.

ZenML allows orchestrating ML pipelines independent of any infrastructure or tooling choices.

It provides the pipeline orchestration and workflow management that MLflow lacks, focusing on creating reproducible ML workflows.

Key features:

- MLOps pipeline framework with declarative workflow definition

- Flexible deployment backends supporting multiple cloud providers and tools

- ZenML Pro significantly enhances collaboration through comprehensive Role-Based Access Control (RBAC) with detailed permissions across organizations, workspaces, and projects

- Production orchestration with extensive integration ecosystem

Best for: Teams needing comprehensive MLOps workflow orchestration and standardization. Ideal for organizations that want to prevent vendor lock-in while maintaining consistent ML practices across different tools.

Understanding how MLflow stacks up against specific alternatives can help you make the right choice for your team's needs. Here are three quick key comparisons that highlight the most important differences.

Choose Kubeflow if: You need complex ML pipeline orchestration, your team has Kubernetes expertise, and you want enterprise-grade multi-user support.

Choose MLflow if: You want simple experiment tracking with minimal setup overhead and don't need advanced pipeline orchestration.

Choose BentoML if: Your primary need is fast, reliable model serving, and you're satisfied with your current experiment tracking solution.

Choose MLflow if: You want an all-in-one platform for both experiment tracking and basic model deployment, even if the deployment features are limited.

Choose Northflank if: You need production-grade model deployment, proper staging environments, team collaboration features, and infrastructure that scales automatically.

Choose MLflow if: You're primarily focused on experiment tracking and model management, with basic deployment needs that don't require advanced infrastructure features.

MLflow is completely open-source under the Apache 2.0 license, so you can use it freely and run it locally with minimal setup.

However, running MLflow beyond the basic local use case requires substantial DevOps work.

You need to set up a tracking server with a backing database, possibly a file or object store for artifacts, and handle authentication yourself.

While the software is free, the hidden costs of infrastructure, maintenance, security, and scaling often make commercial alternatives more cost-effective for production environments, especially when you need enterprise features like RBAC and advanced deployment capabilities.

A quick one:

- Best for teams needing RBAC and collaboration: Northflank and ZenML provide comprehensive role-based access controls and team management features that MLflow lacks.

- Best for production deployment and scaling: Northflank excels at production-grade model deployment with auto-scaling infrastructure, while Kubeflow offers enterprise-scale orchestration for Kubernetes environments.

- Best for experiment tracking and visualization: Neptune.ai delivers the most advanced experiment management interface with good visualization and collaboration tools.

- Best for budget-conscious teams: BentoML and Kubeflow offer good open-source capabilities without licensing costs, though they require more setup effort.

- Best for enterprise requirements: Azure ML provides comprehensive enterprise features within the Microsoft ecosystem, while Northflank offers enterprise-grade deployment with flexible infrastructure options.

When teams outgrow MLflow's basic deployment capabilities, they need a platform built for production ML workloads.

Northflank combines enterprise-grade infrastructure management with the RBAC and staging environments that MLflow lacks.

For teams serious about deploying models reliably at scale, Northflank provides the comprehensive solution that goes beyond what MLflow offers.

Looking to deploy your ML models in production? Sign up for Northflank's free tier to get started immediately, or book a demo to see how Northflank can streamline your model deployment workflow.