Top Vercel Sandbox alternatives for secure AI code execution and sandbox environments

When you’re building AI agents that generate and execute code, you need secure sandbox environments that can isolate untrusted code without compromising your infrastructure.

Vercel Sandbox made waves when it launched in beta, offering Firecracker microVMs with up to 45-minute execution times. But for many teams building production AI applications, Vercel's approach has significant limitations.

Maybe you need sandboxes that persist longer than 45 minutes. Maybe you want to run workloads in your own cloud. Or maybe you need a platform that handles more than just ephemeral code execution.

This article covers the top Vercel Sandbox alternatives, comparing them across isolation methods, pricing models, persistence capabilities, and real-world production readiness.

If you're evaluating secure sandbox platforms for AI code execution:

- Vercel Sandbox is good for quick demos and Vercel-native workflows. Firecracker isolation, simple SDK, but 45-minute runtime limits and no BYOC options.

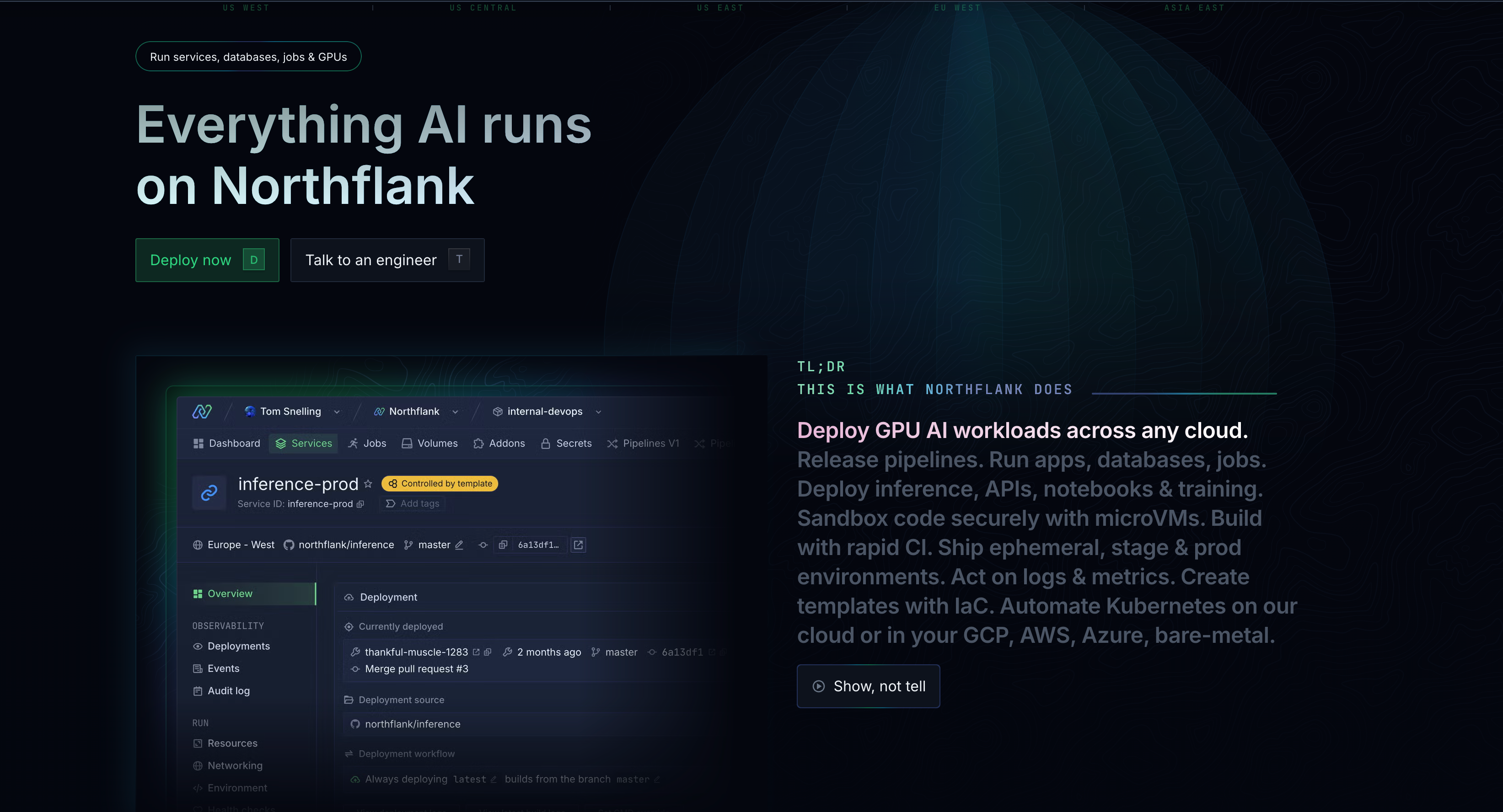

- Northflank offers production-proven microVM isolation with unlimited session duration, full orchestration, and runs in your cloud. Better for serious AI infrastructure and long-running workloads.

- E2B.dev provides Firecracker sandboxes with good persistence but no production self-hosting and limited infrastructure control.

- Modal excels at Python ML workloads with gVisor isolation but lacks multi-language support and persistent sessions.

- Daytona focuses on fast container starts but weak on streaming and lacks microVM-level security.

Vercel Sandbox securely runs untrusted code in isolated cloud environments, like AI-generated code. You can create ephemeral, isolated microVMs using the new Sandbox SDK, with up to 45m execution times, and is now in Beta and available to customers on all plans.

Under the hood, Vercel Sandbox uses Firecracker microVMs with Node.js and Python support, supporting execution times up to 45 minutes and a maximum of 8 vCPUs with 2 GB of memory allocated per vCPU.

- True microVM isolation via Firecracker

- Simple SDK integration with AI workflows

- Standalone SDK that can be executed from any environment, including non-Vercel platforms

- Active CPU pricing - you only pay compute rates when your code is actively executing

- Maximum runtime duration of 45 minutes - inadequate for long-running AI workloads

- Currently, Vercel Sandbox is only available in the iad1 region - no global deployment options

- No Bring Your Own Cloud (BYOC) or self-hosting capabilities

- Limited to Node.js and Python runtimes

- Still in beta with unclear production readiness timeline

Vercel tracks sandbox usage by Active CPU time, Provisioned memory, Network bandwidth, and Sandbox creations:

Hobby Plan:

- 5 CPU hours

- 420 GB-hours provisioned memory

- 20 GB network

- 5,000 sandbox creations

- Up to 10 concurrent sandboxes

Pro Plan:

- Same base allotments as Hobby

- 100,000 sandbox creations (vs 5,000)

- Up to 150 concurrent sandboxes

- Overage pricing: $0.128/CPU hour, $0.0106/GB-hr memory, $0.15/GB network, $0.60/1M creations

The 45-minute session limit makes Vercel Sandbox unsuitable for persistent AI workloads where users might return to projects hours or days later.

Secure sandbox environments are essential for:

1️⃣ AI agent code execution - Safely running LLM-generated scripts and tools

2️⃣ Developer environments - Providing isolated coding environments for testing

3️⃣ Multi-tenant SaaS platforms - Running customer code securely at scale

4️⃣ Educational platforms - Teaching programming with safe execution environments

5️⃣ Data science workflows - Running untrusted analysis scripts and visualizations

If you're building production AI applications, developer tools, or platforms where end users execute code, you need more than basic sandboxing.

| Platform | Isolation method | Max session duration | BYOC support | Multi-language | Best for |

|---|---|---|---|---|---|

| Northflank | microVM (Kata, gVisor, Firecracker) | Unlimited | Yes | Yes | Production AI infrastructure |

| Vercel Sandbox | microVM (Firecracker) | 45 minutes | No | Node.js, Python | Vercel-native demos |

| E2B.dev | microVM (Firecracker) | 24 hours active | Experimental | Yes | AI agent sandboxes |

| Modal | Container (gVisor) | Stateless | No | Python only | ML workloads |

| Daytona | Container (optional Kata) | Variable | No | Yes | Fast dev environments |

| Cloudflare Workers | V8 Isolates | Stateless | No | JS/WASM only | Edge functions |

Northflank has been running secure microVMs in production since 2019, executing over 2 million isolated workloads monthly. Unlike sandbox-only solutions, Northflank is a complete cloud platform that excels at secure code execution.

Strengths:

- Multiple isolation options: Kata Containers with Cloud Hypervisor, gVisor, and Firecracker microVMs

- Unlimited session duration: Sandboxes persist until you terminate them - critical for long-running AI workloads

- Full BYOC support: Deploy in your AWS, GCP, Azure, or bare metal infrastructure

- Complete platform: Run AI agents, APIs, databases, and GPU workloads with consistent security

- Production-proven scale: Powers secure multi-tenant deployments for companies like Writer and Sentry

- Polyglot support: Any language, runtime, or framework

- Enterprise-ready: SSO, RBAC, audit logging, and compliance tools built-in

Limitations:

- More comprehensive than pure sandbox tools - may be overkill for simple use cases

- Cold-start latency higher than container-only solutions (though tunable)

Who it's for:

- Teams building serious AI infrastructure requiring persistent sessions

- Companies needing enterprise controls and custom cloud deployments

- Platforms running multi-tenant workloads at scale

Pricing:

- Transparent usage-based pricing: ~$0.01667/vCPU-hour, $0.00833/GB-hour RAM

- No forced plan tiers or minimum fees

- GPU and spot pricing available

E2B.dev focuses specifically on AI agent sandboxes using Firecracker microVMs, with excellent SDK design and persistence features.

Strengths:

- Firecracker microVM isolation

- Up to 24-hour active sessions, 30-day paused sessions

- Clean SDK for AI integration

- Multi-language support (Python, JavaScript, R, Java, Bash)

Limitations:

- Self-hosting still experimental, not production-ready

- Limited to sandbox use cases only

- No infrastructure flexibility or BYOC options

- Pricing lacks transparency

Who it's for:

- AI agent developers needing reliable sandboxes

- Teams focused purely on code execution (not full infrastructure)

Pricing:

- Free tier: 2 vCPU, 512MB RAM, ~1hr sessions

- Pro: $150/month + usage fees

Modal uses gVisor containers with heavy optimization for machine learning and data science workloads.

Strengths:

- Sub-second container starts with custom Rust runtime

- Excellent GPU support (T4 to H200)

- Container keep-alive and checkpointing

- Strong for batch ML jobs and model inference

Limitations:

- Python-only for function definitions

- No BYOC or self-hosting

- Limited to serverless model (no persistent services)

- Opaque pricing structure

Who it's for:

- Python-focused ML teams

- Data scientists running batch workloads

- Teams needing GPU access for model inference

Daytona pivoted to AI code execution in 2026, focusing on fast container starts with optional enhanced isolation.

Strengths:

- Extremely fast cold starts

- Docker image support with Git integration

- Kata Containers available for enhanced isolation

Limitations:

- Default configuration uses standard containers (weaker isolation)

- Poor streaming support and session persistence

- Limited orchestration capabilities

Who it's for:

- Teams prioritizing startup speed over security

- Development environment use cases

Cloudflare takes a completely different approach using V8 isolates rather than containers or VMs.

Strengths:

- Zero cold starts, always warm

- 200+ global edge locations

- Excellent for stateless functions

- Strong security via V8 isolate technology

Limitations:

- JavaScript/WebAssembly only

- No persistent state or long-running processes

- No GPU support

- Not suitable for complex AI workloads

Who it's for:

- Edge computing and API middleware

- Simple stateless functions

- Teams needing global distribution

The fundamental difference between Northflank and other sandbox solutions is scope and production readiness.

While Vercel Sandbox and others focus purely on short-lived code execution, Northflank provides complete infrastructure for AI applications:

- Persistent AI agents that maintain state across user sessions

- Backend APIs and databases with the same security guarantees

- GPU workloads for model inference and training

- Scheduled jobs for batch processing and maintenance

- Multi-region deployment with consistent experience

Companies like Writer and Sentry trust Northflank to run multi-tenant customer deployments for untrusted code at massive scale. This isn't theoretical - it's battle-tested infrastructure handling millions of secure workloads monthly.

Unlike platform-locked solutions:

- Managed cloud: Zero setup, just deploy

- BYOC: Run in your existing AWS, GCP, Azure, or bare metal

- Multi-region: Global deployment with consistent APIs

- Any runtime: Not locked to specific languages or frameworks

While most sandbox tools lack enterprise features, Northflank includes:

- SSO and directory synchronization

- Granular role-based access control

- Comprehensive audit logging

- Compliance tools and certifications

- SLAs with dedicated support

When evaluating Vercel Sandbox alternatives, consider these key factors:

1. Session persistence: Can users return to their work later, or do sessions expire quickly?

2. Infrastructure control: Do you need BYOC, custom networking, or specific compliance requirements?

3. Scale requirements: Are you building for thousands of concurrent users or just internal tools?

4. Language support: Do you need more than Node.js and Python?

5. Integration depth: Do you need just sandboxes or a complete platform for your AI application?

For most production AI applications, the 45-minute session limit and platform lock-in of Vercel Sandbox becomes a significant constraint. Users expect to return to their projects, and developers need infrastructure flexibility.

Vercel Sandbox helped introduce secure sandboxing to the Vercel ecosystem, but it's designed primarily for short-lived demos and Vercel-native workflows.

For teams building production AI applications, the key question isn't just "can it sandbox code?" but "can it run my complete AI infrastructure securely at scale?"

Northflank leads because it's the only platform that combines:

- Production-proven microVM isolation (2M+ workloads monthly)

- Unlimited session persistence for real user workflows

- Complete platform that grows with your AI application

- True infrastructure flexibility (managed or BYOC)

- Transparent, predictable pricing without platform lock-in

Don't settle for ephemeral sandboxes when your AI applications need persistent, scalable infrastructure. With Northflank, secure code execution is just one part of a comprehensive cloud platform built for the AI era.