6 best BentoML alternatives for self-hosted AI model deployment (2026)

BentoML is a widely used open-source tool for packaging and serving machine learning models. It works well for local development and setting up inference endpoints.

If you’re looking for alternatives to BentoML, say to add autoscaling, get more visibility into your workloads, or support things like APIs, databases, or background jobs, this guide covers several platforms that can help.

We’ll look at platforms like Northflank, Modal, RunPod, and KServe, tools that support a mix of AI and infrastructure needs. For example, Northflank supports both AI and non-AI workloads on one platform. You can deploy model trainers, inference jobs, Postgres, Redis, and schedulers side-by-side, with autoscaling, CI/CD, logs, metrics, and secure runtimes built in.

Let’s look at a breakdown of BentoML alternatives that fit different use cases, from GPU-backed model serving to full-stack deployment.

If you're looking into other options beyond BentoML, this section covers platforms that support model serving, training, and broader application needs:

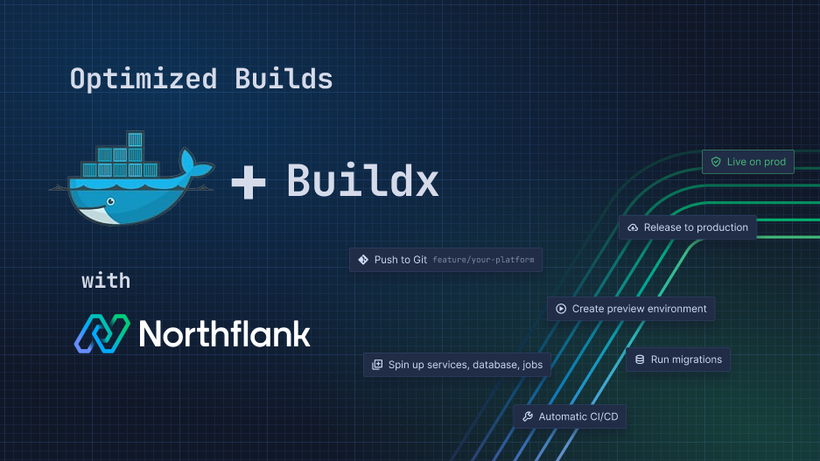

- Northflank – For teams that want to run AI models and full applications side by side on the same platform. Supports model serving, training jobs, APIs, background workers, and databases like Postgres and Redis. Built-in autoscaling, monitoring, and continuous delivery. You can also run GPU workloads in your own cloud using Bring Your Own Cloud (BYOC).

- Modal – Designed for running Python functions and ML inference at scale. Autoscaling is handled for you, with minimal infrastructure setup required.

- RunPod – Lets you run custom containers on GPU machines. Helpful for training and inference workloads, especially when you want access to spot instances or specific GPU types.

- Anyscale – Built around Ray for distributed compute. Useful for teams that are already using Ray to manage large-scale training jobs or data pipelines.

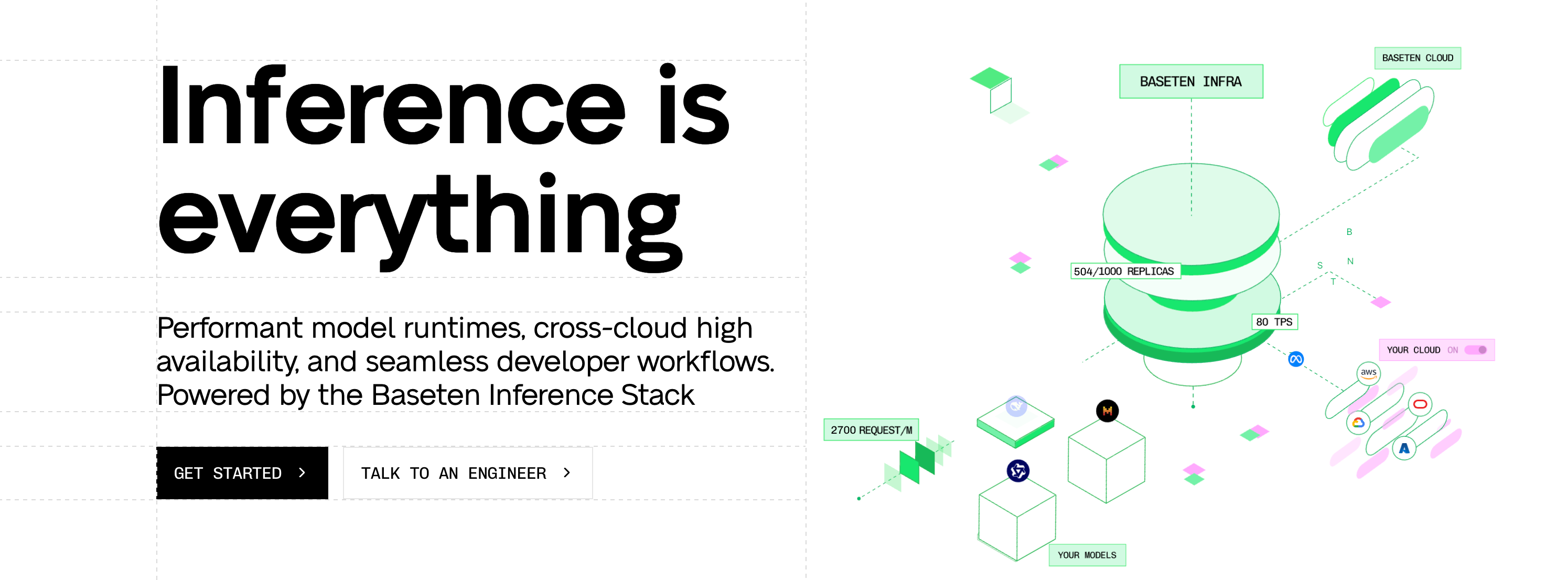

- Baseten – Offers a low-code UI for deploying models, with autoscaling and basic observability tools included. Good for ML engineers who want to focus on iteration.

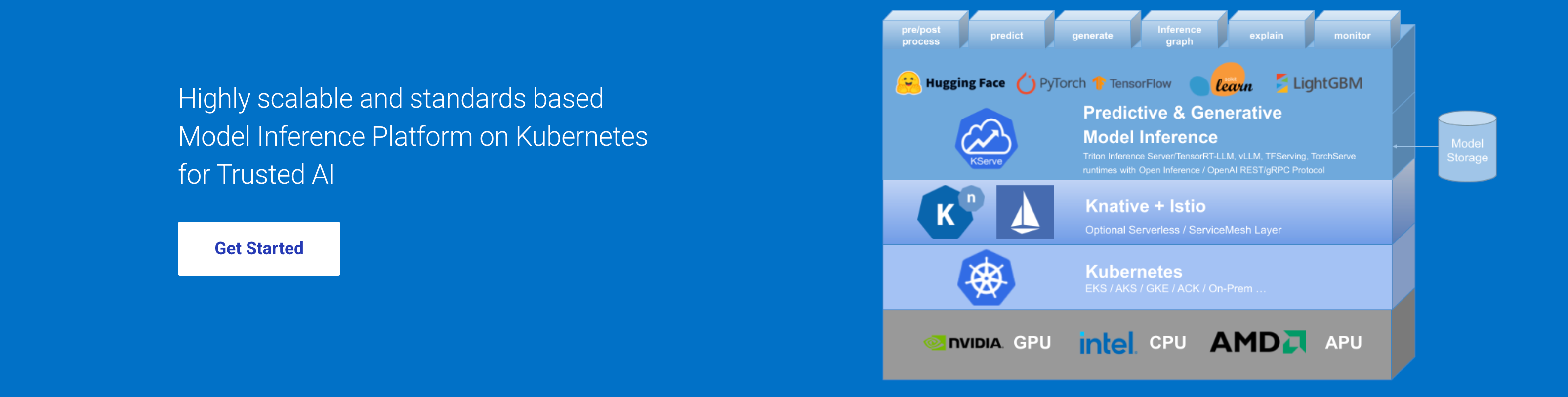

- KServe – An open-source model serving framework built for Kubernetes. Best suited for infrastructure-savvy teams that prefer OSS and want to run models in-cluster.

Deploy models, jobs, and full applications on a single platform

BentoML is useful for serving models, but if you're working toward production or managing multiple services, it helps to step back and ask what else your team might need.

-

Do you need to serve models only, or are you also training them regularly?

Some platforms focus on inference, while others let you run full training pipelines, manage datasets, and schedule recurring jobs all in one place.

-

Are you building a single endpoint, or do you want to run supporting services like APIs, schedulers, Redis, or Postgres alongside your model?

If your application depends on other services, it helps to deploy everything together with shared monitoring, networking, and deployment flows.

-

Do you need autoscaling and monitoring that work outside the BentoML runtime?

In production, you’ll likely want infrastructure-aware metrics, logs, and autoscaling that adapt to actual usage, not limited to what BentoML provides by default.

-

Is multi-cloud or BYOC (Bring your own cloud) GPU flexibility important to your team?

Some teams want full control over cloud costs and GPU usage. Bring Your Own Cloud (BYOC) setups let you run on your own infrastructure without giving up developer experience.

-

Do you want to build an internal AI platform, not only an endpoint?

For teams building long-term ML infrastructure, it's useful to have secure runtimes, RBAC, CI/CD, and the ability to scale across multiple apps and teams.

Next, we’ll look at 6 BentoML alternatives that support some or all of these needs in their own way.

If you’re looking for a platform that handles more than inference alone, these six alternatives give you different ways to deploy, scale, and manage your AI and ML workloads.

So, choose the best based on your team’s goals, infrastructure setup, and how much flexibility you want in production.

1. Northflank – Run your AI models and full applications in one place with autoscaling and support for your own GPUs

If you’re looking for something that supports both model serving and broader application infrastructure, Northflank brings it together in one platform. You can deploy your AI workloads alongside your APIs, databases, background jobs, and more, all with built-in autoscaling and monitoring.

See some of what you can do with Northflank:

- Run AI/ML jobs (training, inference) with attached GPUs

- Deploy custom Docker images (including Jupyter notebooks, APIs, or background jobs)

- Deploy APIs, workers, Postgres, and Redis side-by-side

- Integrate with your existing ML workflows using CI/CD pipelines and custom Docker builds

- Autoscaling for jobs and services

- Built-in logs, metrics, RBAC, and CI/CD pipelines

- Run on your own cloud with BYOC GPU support and fast provisioning

Pricing highlights:

- Free plan available for testing and small projects

- Pay-as-you-go with no monthly commitment

- Enterprise pricing available for larger teams and advanced setups

(See full pricing details)

Go with Northflank if you want one platform to run both your AI models and full applications, with autoscaling, built-in observability, and support for your own GPUs.

💡See how teams use Northflank in production:

How Cedana deploys GPU-heavy workloads with secure microVMs and Kubernetes

Cedana runs live-migration and snapshot/restore of GPU jobs, using Northflank’s secure runtimes on Kubernetes

Modal is built around running Python functions in the cloud, making it easy to serve models or run inference without managing infrastructure. It’s suited for developers who want to write minimal code and quickly scale compute as needed.

See what you can do with Modal:

- Run inference functions with GPU support

- Define logic using Python decorators and functions

- Autoscaling handled behind the scenes

- Ideal for short-lived or stateless tasks

Pricing: Free tier available. Paid plans are based on compute time and storage usage.

Go with Modal if you want a Python-first way to deploy and scale inference jobs, with minimal infrastructure setup.

If you're comparing platforms, you might also want to check out the 6 best Modal alternatives for ML, LLMs, and AI app deployment.

RunPod makes it easy to spin up GPU-backed containers for AI training or inference. You can choose from public, secure, or private nodes and run your own Docker containers with access to GPUs.

See what you can do with RunPod:

- Launch GPU containers for training or inference

- Use public nodes or bring your own secure pods

- Run Jupyter notebooks or custom Docker images

- Integrate with your existing ML workflows

Pricing: Pay-as-you-go based on GPU type and runtime. No fixed monthly fees.

Choose RunPod if you want fast, cost-flexible access to GPU containers for ML workloads with minimal setup.

If you're looking at other platforms that go beyond containerized GPU workloads, see these RunPod alternatives for AI/ML deployment.

If your team is already using Ray or building distributed applications, Anyscale gives you a managed environment to run workloads at scale. It's designed for tasks that benefit from distributed parallelism, like model training, batch jobs, or hyperparameter tuning.

What you can do with Anyscale:

- Launch Ray clusters on AWS in a managed environment

- Run distributed ML workloads and scale out with autoscaling

- Use Ray Serve for model inference and microservice APIs

- Collaborate across users and teams with shared workspaces

- Monitor and track experiments with Ray dashboards

Pricing: Free Developer tier available. Paid plans include usage-based billing for compute and cluster management.

Go with Anyscale if you’re building distributed ML pipelines with Ray and want managed infrastructure built around that ecosystem.

If you're comparing platforms for distributed model training or inference, check out these Anyscale alternatives for AI/ML deployment.

Baseten focuses on helping teams deploy and serve ML models quickly using a web-based UI and built-in observability. It’s useful if you’re working with popular open-source models and want minimal infrastructure setup.

See what you can do with Baseten:

- Deploy models from Hugging Face or your own training pipeline

- Use pre-built templates for models like Llama and Whisper

- Built-in monitoring and performance metrics

- Simple interface for deploying REST endpoints

Pricing: Free tier available. Paid plans are usage-based, with limits on requests and concurrency.

Go with Baseten if you want a low-code way to deploy open-source models, with built-in monitoring and templates for fast iteration.

If you’re comparing Baseten with other tools in this space, check out these Baseten alternatives for AI/ML model deployment.

KServe is an open-source Kubernetes-based model serving tool maintained by the Kubeflow project. It’s best suited for teams that already have Kubernetes expertise and want full control over how models are deployed, scaled, and versioned in production.

See what you can do with KServe:

- Serve models using frameworks like TensorFlow, PyTorch, XGBoost, and ONNX

- Manage prediction routing, model canarying, and rollout strategies

- Scale with Kubernetes-native autoscaling and inference graphs

- Deploy multiple model versions with traffic splitting

Pricing: Free and open source. You’ll need to run and maintain it yourself on your own Kubernetes infrastructure.

Go with KServe if you want OSS model serving and already have the Kubernetes knowledge to operate it.

After going through the top alternatives, below is a side-by-side comparison to help you assess how each platform performs in key areas like model serving, GPU support, autoscaling, and broader application deployment.

This table gives you the context you need to bring options back to your team, particularly if you're thinking beyond basic inference.

| Platform | Model serving | GPU scaling | Autoscaling | Deploy non-AI apps | Monitoring & logs | CI/CD integration |

|---|---|---|---|---|---|---|

| Northflank | Supports both training and inference with custom Docker images or prebuilt templates | Built-in support for attached GPUs and BYOC GPU provisioning | Native autoscaling for both jobs and services | Supports full applications like your APIs, databases, workers, schedulers | Built-in logs and metrics with dashboard access | Built-in CI/CD pipelines with Git-based triggers |

| BentoML | Inference-focused, container-based model serving | Requires manual setup or third-party tools | Manual configuration only | Limited to model endpoints; no support for broader app deployment | Basic logs, limited observability | No built-in CI/CD support |

| Modal | Python-first inference with minimal setup | GPU support handled internally, no custom provisioning | Autoscaling for functions is abstracted and automatic | Focused on single-function inference workloads | Basic monitoring through internal interface | No native CI/CD, minimal deployment controls |

| RunPod | Containerized model serving for training or inference | GPU scaling available per workload, with templates | Limited autoscaling, manual scaling may be required | Basic container support; not designed for full application stacks | Requires external setup for full observability | No built-in CI/CD |

| Anyscale | Distributed model serving and training via Ray | GPU scaling supported within Ray workloads | Limited autoscaling, needs manual Ray setup | Not intended for full app deployment | Ray dashboard provides some observability; limited | CI/CD integration not built in |

| Baseten | GUI-based model deployment with prebuilt templates | GPU support available during deployment | Autoscaling support for deployed models | Not designed for deploying broader services or APIs | Built-in observability tools specific to model performance | No full CI/CD, deployment happens via GUI |

| KServe | Open-source serving for multiple model types | GPU support available with proper configuration | Can autoscale via Kubernetes, but setup is manual | Requires custom YAMLs for services outside ML models | Requires external observability stack like Prometheus/Grafana | CI/CD setup is external and user-managed |

BentoML is focused on model serving, but when your team needs to go beyond inference, like running APIs, databases, or background jobs alongside your models, that’s where Northflank stands out.

Northflank gives you a single platform where you can deploy both your AI workloads and any other workloads, so you’re not restricted to serving models alone. You also get built-in autoscaling, monitoring, and production-grade infrastructure controls.

What makes Northflank a complete alternative:

- Deploy models, APIs, Redis, Postgres, and workers in one place

- Run GPU-powered jobs for inference, fine-tuning, and batch processing

- Autoscaling is built-in, no need for manual configuration

- Built-in monitoring, logs, cost tracking, RBAC, and GitOps pipelines

- Supports both AI/ML and traditional web workloads together

- SOC 2-aligned: deploy to private clusters, with secure runtimes and audit logs

Northflank isn’t limited to inference. You can run your entire AI stack and full application infrastructure together with autoscaling, monitoring, and BYOC GPU support built in.

These common questions come up when teams are checking out BentoML and looking at broader deployment options.

1. What is BentoML used for?

BentoML is a framework for packaging and serving machine learning models. It helps developers deploy models as REST or gRPC services with Python-friendly tooling.

2. Is BentoML good?

Yes, it’s well-suited for teams that want a lightweight, Python-native way to serve models locally or in simple cloud setups. For larger-scale deployments or teams that need autoscaling, monitoring, and full application infrastructure, platforms like Northflank are often used.

3. Is BentoML open-source?

Yes, BentoML is open-source under the Apache 2.0 license. You can self-host it or use it as part of a larger MLOps toolchain.

4. What are the advantages of BentoML?

It’s Python-first, easy to get started with, and integrates well with many ML frameworks. That said, it focuses mainly on model serving. Teams needing to manage full application workloads or deploy in production environments often look to tools like Northflank for additional infrastructure control.

5. What are machine learning ops?

Machine learning operations (MLOps) is the practice of managing the lifecycle of ML models, from training and deployment to monitoring and retraining. BentoML covers serving, but broader MLOps may involve tools for orchestration, pipelines, observability, and compliance.

BentoML is great if you want a Python-based model server that keeps things simple. However, once you need autoscaling, full observability, or the ability to deploy APIs, jobs, and databases alongside your models, you’ll need more than a model server.

That’s where platforms like Northflank come in. You can run both AI workloads and full applications on a unified platform, with built-in CI/CD, logs, metrics, and autoscaling, plus support for GPUs and BYOC when needed.