If you need a powerful yet simple way to run your own AI document assistant, AnythingLLM is one of the best open-source tools you can use. It lets you chat with your files, connect data sources, and query them using LLMs all from a clean web interface.

With Northflank, you can deploy AnythingLLM in minutes using a one-click template or set everything up manually. Northflank takes care of scaling, networking, and infrastructure while you focus on working with your data.

Before you begin, create a Northflank account.

- Deploying AnythingLLM with a one-click template on Northflank

- Deploying AnythingLLM manually on Northflank

What is Northflank?

Northflank is a developer platform that makes it easy to build, deploy, and scale applications, databases, jobs, and even GPU workloads. It abstracts Kubernetes with smart defaults, giving you production-ready deployments without losing flexibility.

You can launch AnythingLLM on Northflank in just a few minutes using the ready-made template. This option is ideal if you want to quickly spin it up or demo the platform without performing a manual setup.

The AnythingLLM deployment on Northflank includes:

- 1 persistent volume for storing configs, uploads, and local data

- 1 secret group for managing environment variables (API keys, embeddings provider credentials)

- Deployment of AnythingLLM from the Docker image:

mintplexlabs/anythingllm:latest

- Visit the AnythingLLM template on Northflank

- Click “Deploy”

- Northflank will automatically:

- Create a project, volume, secret group, and service

- Deploy AnythingLLM with the required configuration

- Expose a public URL for your app

- Once live, open the URL to access the AnythingLLM web UI in your browser

Note: You’ll need to add API keys for your LLM and embedding providers (e.g., OpenAI, Anthropic, Cohere).

If you want more flexibility or need to customize your setup, you can deploy AnythingLLM manually. This approach provides complete control over configuration and integration.

Note: You can also customise Northflank's one-click deploy templates.

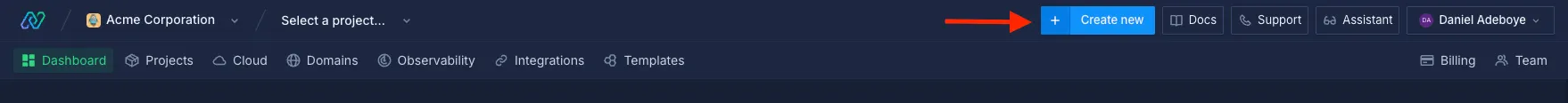

Log in to your Northflank dashboard, click the “Create new” button (+ icon) in the top right corner of your dashboard. Then, select “Project” from the dropdown.

Projects serve as workspaces that group together related services, making it easier to manage multiple workloads and their associated resources.

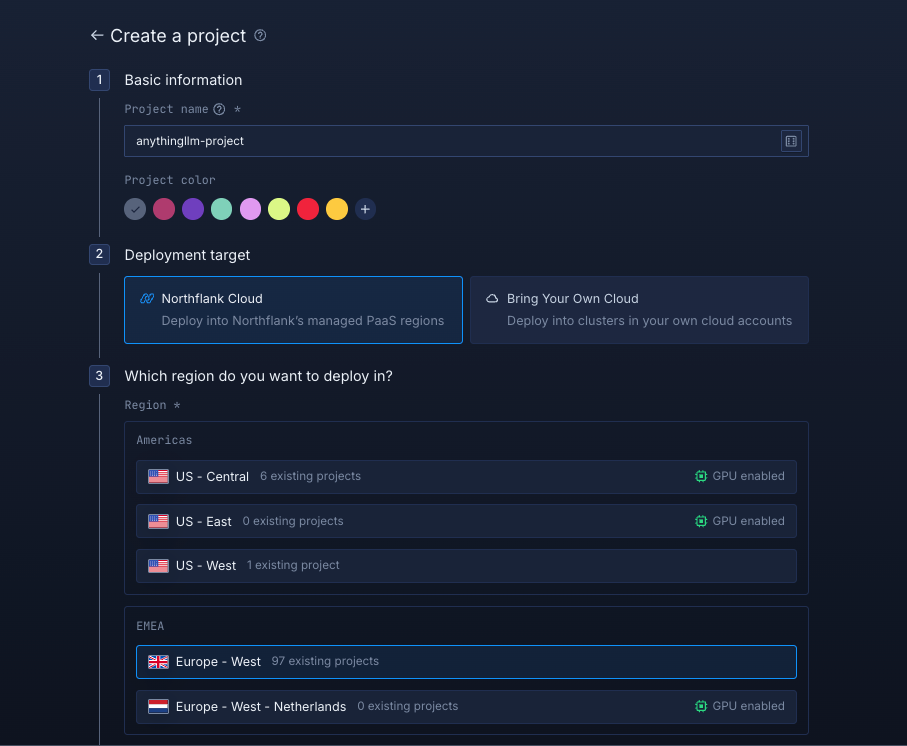

You’ll need to fill out a few details before moving forward.

-

Enter a project name, such as

anythingllm-projectand optionally pick a color for quick identification in your dashboard. -

Select Northflank Cloud as the deployment target. This uses Northflank’s fully managed infrastructure, so you do not need to worry about Kubernetes setup or scaling.

(Optional) If you prefer to run on your own infrastructure, you can select Bring Your Own Cloud and connect AWS, GCP, Azure, or on-prem resources.

-

Choose a region closest to your users to minimize latency.

-

Click Create project to finalize the setup.

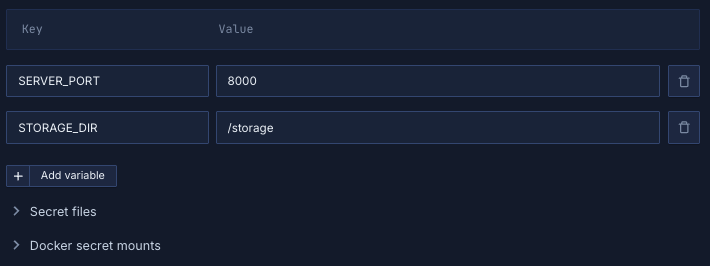

Next, navigate to the Secrets tab and click "Create Secret Group." Name it something easy to recognize, such as anythingllm-secrets. This group will hold all the environment variables required by AnythingLLM. You can find the full list of supported variables in the AnythingLLM docs.

If you don't want to go through the stress of manually configuring or searching for environment variables to use, you can use the already configured ones below:

SERVER_PORT="8000"

STORAGE_DIR="/storage"Notes about these values:

SERVER_PORT- Defines the internal port that AnythingLLM will use. This should match the port you expose in your service configuration.STORAGE_DIR- Specifies where AnythingLLM will store its data. This should match the mount path of your persistent volume.

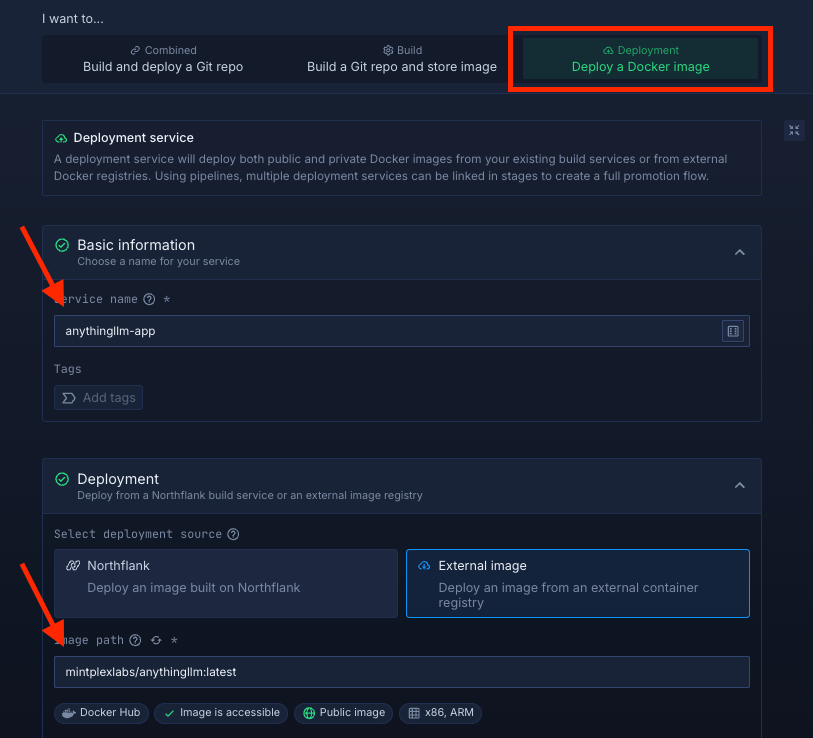

Within your project, navigate to the Services tab in the top menu and click ’Create New Service’. Select Deployment and give your service a name such as anythingllm-app.

For the deployment source, choose External image and enter the official AnythingLLM Docker image: mintplexlabs/anythingllm:latest.

Select compute resources

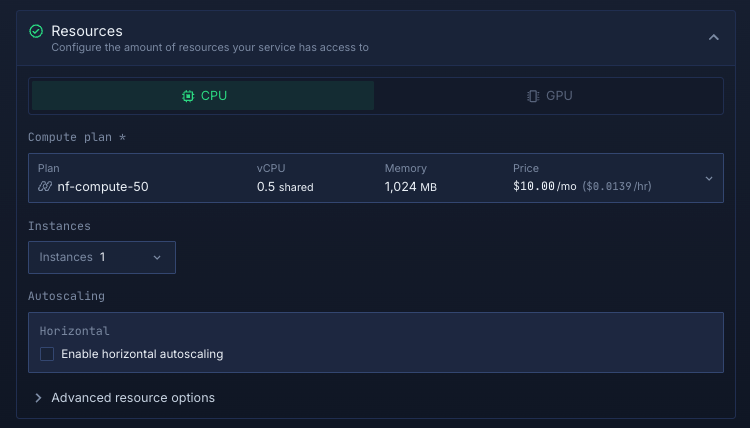

Choose the compute size that best matches your workload:

- Small plans are fine for testing or lightweight usage.

- Larger plans are recommended for production, as AnythingLLM can be resource-intensive under real-world traffic.

The flexibility to adjust resources later means you can start small and scale up as your scheduling needs grow.

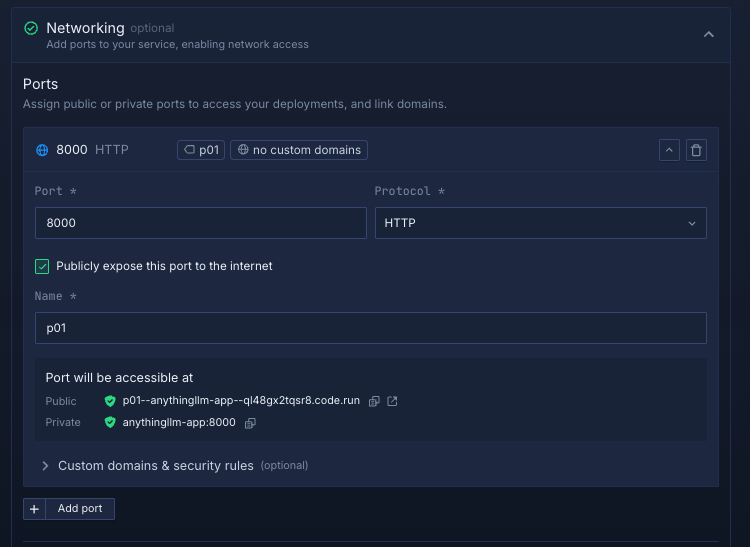

Set up a port so your app is accessible:

- Port:

8000 - Protocol:

HTTP - Public access: enable this to let people access your scheduling app from the internet

Northflank will automatically generate a secure, unique public URL for your service. This saves you from having to manage DNS or SSL certificates manually.

Deploy your service

When you’re satisfied with your settings, click “Create service.” Northflank will pull the image, provision resources, and deploy AnythingLLM.

Once the deployment is successful, you’ll see your service’s public URL at the top right corner, e.g.: p01--anythingllm-app--lppg6t2b6kzf.code.run

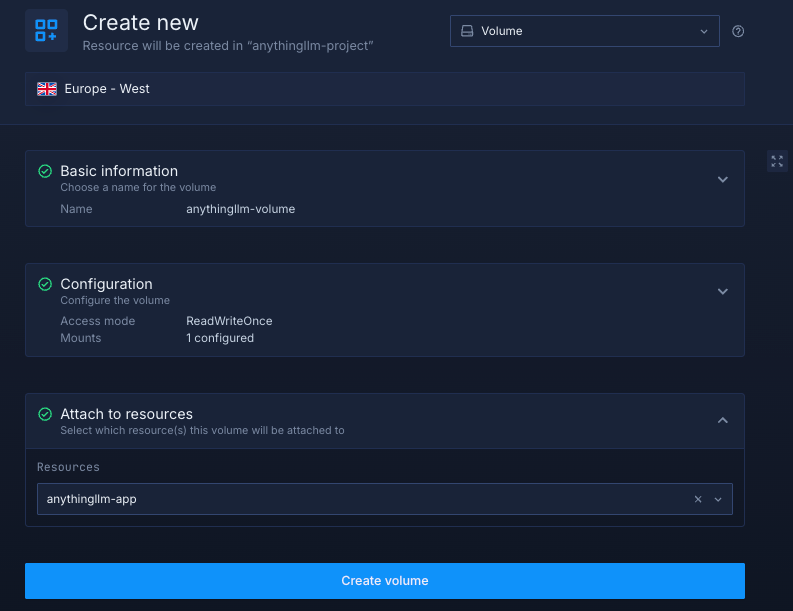

Inside your project, go to the Volumes tab and click Create new volume.

- Name it

anythingllm-volume - Choose a storage type (NVMe is recommended)

- Choose a storage size (start small for testing, scale up for production)

- Set the volume mount path to

/storage(the directory used by AnythingLLM to store data) - Attach the volume to your

anythingllm-appservice to enable persistent storage.

Click Create volume to finalize.

After successfully creating your volume, you need to restart your service. Once completed, you can access your deployed AnythingLLM app.

Deploying AnythingLLM on Northflank gives you a production-ready way to run your AI-powered document assistant without managing infrastructure.

Whether you choose the one-click template for speed or the manual setup for full control, Northflank provides the scalability and reliability, while AnythingLLM powers your AI-driven search and chat.

Together, they make it easy to ingest documents, query data, and run secure LLM applications at scale.