If you need a simple and private interface for working with large language models, Open WebUI provides a familiar chat experience that can connect to Ollama or any OpenAI-compatible API. It supports features like document upload, retrieval-augmented chat, image generation, and model management, making it a good option for teams and developers who want a secure internal AI workspace.

With Northflank, you can deploy Open WebUI in minutes using a one-click stack template or configure the setup manually. Northflank manages networking, storage, scaling, and environment configuration so you can focus on using the UI rather than maintaining infrastructure.

Open WebUI is an open-source interface for interacting with large language models through a browser-based chat environment. It provides authentication, persistent conversation history, document ingestion, and optional integrations such as browsing, voice input, and image generation. You can connect it to self-hosted backends like Ollama or external APIs that follow the OpenAI format.

Before getting started, create a Northflank account.

- Deploying Open WebUI with a one-click template on Northflank

- Deploying Open WebUI manually on Northflank

What is Northflank?

Northflank is a platform that allows developers to build, deploy, and scale applications, services, databases, jobs, and GPU workloads on any cloud through a self-service approach. For DevOps and platform teams, Northflank provides a powerful abstraction layer over Kubernetes, enabling templated, standardized production releases with intelligent defaults while maintaining the configurability you need.

The fastest way to run Open WebUI on Northflank is through the one-click deploy template. It provisions the project, service, persistent storage, and required environment variables automatically. This is ideal if you want to launch Open WebUI quickly without manually setting up everything.

The Open WebUI stack template includes:

- A deployment service running the official Open WebUI image

- A persistent volume mounted at

/app/backend/data - A secret group containing the required environment variables

This provides a ready-to-use, persistent chat environment that connects directly to your model runtime.

- Visit the Open WebUI template on Northflank

- Click

Deploy Open WebUI now - Under the

Advancedsection enter yourOLLAMA_BASE_URLorOPENAI_API_BASE_URL & OPENAI_API_KEYif you are connecting to Ollama or any OpenAI-compatible API. - Click

Deploy stack - Wait for the project, service, volume, and secrets to be created

- Open the service page and use the generated public URL to access the UI

You can now sign in, configure your workspace, and begin interacting with your connected models.

Deploy Ollama in minutes

Use Northflank’s one-click template to deploy Ollama with GPU, storage, and a public API. No manual setup required.

If you want more control over configuration, you can deploy Open WebUI manually. This lets you adjust compute plans, environment variables, and service behaviour.

You can also modify the one-click template if you want to extend or customise the default deployment.

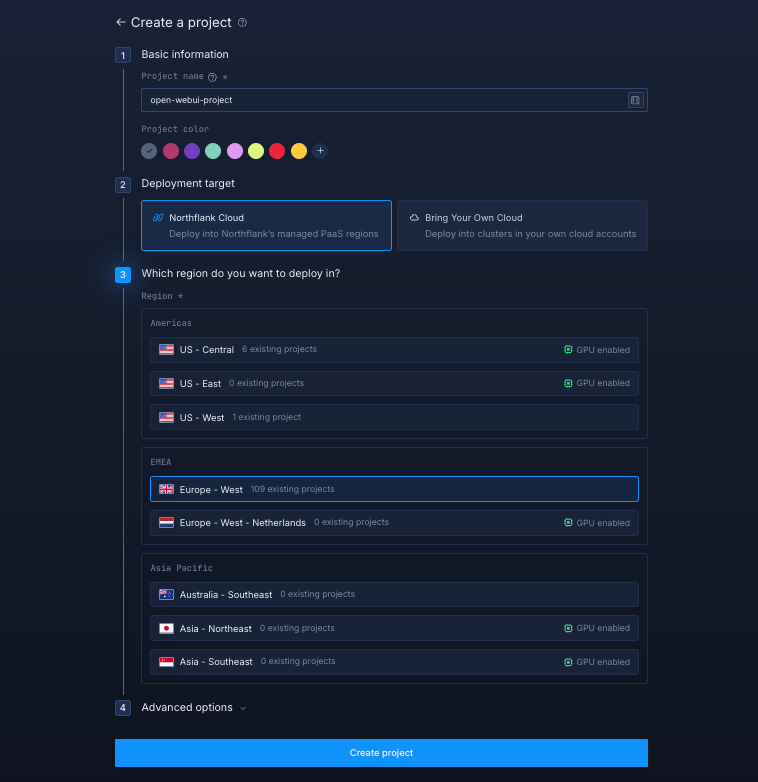

Log in to your Northflank dashboard, then click the “Create new” button (+ icon) in the top-right corner. Then, select “Project” from the dropdown.

Projects serve as workspaces that group together related services, making it easier to manage multiple workloads and their associated resources.

You’ll need to fill out a few details before moving forward.

-

Enter a project name, such as

open-webui-projectand optionally pick a colour for quick identification in your dashboard. -

Select Northflank Cloud as the deployment target. This uses Northflank’s fully managed infrastructure, so you do not need to worry about Kubernetes setup or scaling.

(Optional) If you prefer to run on your own infrastructure, you can select Bring Your Own Cloud and connect AWS, GCP, Azure, or on-prem resources.

-

Choose a region closest to your users to minimise latency.

-

Click Create project to finalise the setup.

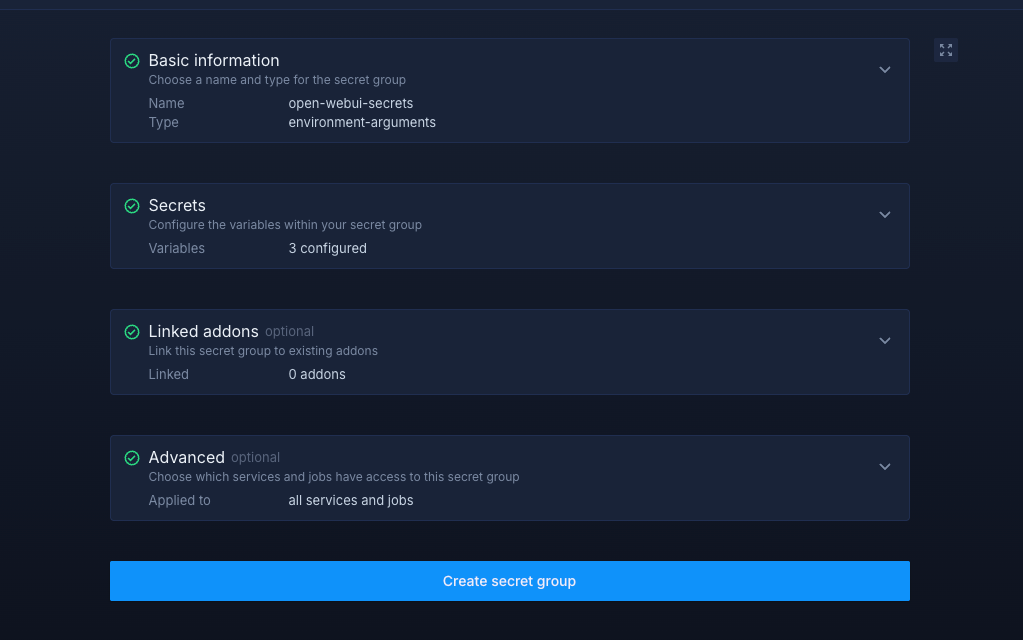

Next, navigate to the Secrets tab and click "Create Secret Group." Name it something easy to recognise, such as open-webui-secrets. This group contains all environment variables required by Open WebUI. You can find the full list of supported variables in the Open WebUI documentation.

If you don’t want to manually configure or search for environment variables, you can use the already configured ones below:

OLLAMA_BASE_URL=XXXXXXXXXXX

OPENAI_API_BASE_URL=XXXXXXXXXXX

OPENAI_API_KEY=XXXXXXXXXXXNote about the values:

OLLAMA_BASE_URLshould point to your Ollama instance URL.OPENAI_API_BASE_URLis optional and only needed if you're connecting to an OpenAI-compatible provider.OPENAI_API_KEYis required when using models served through an OpenAI-compatible API. Leave it empty if you only plan to use local models from your Ollama instance.

Once you’ve added these variables, click Create Secret Group, then attach it to your Open WebUI service so they become available at runtime.

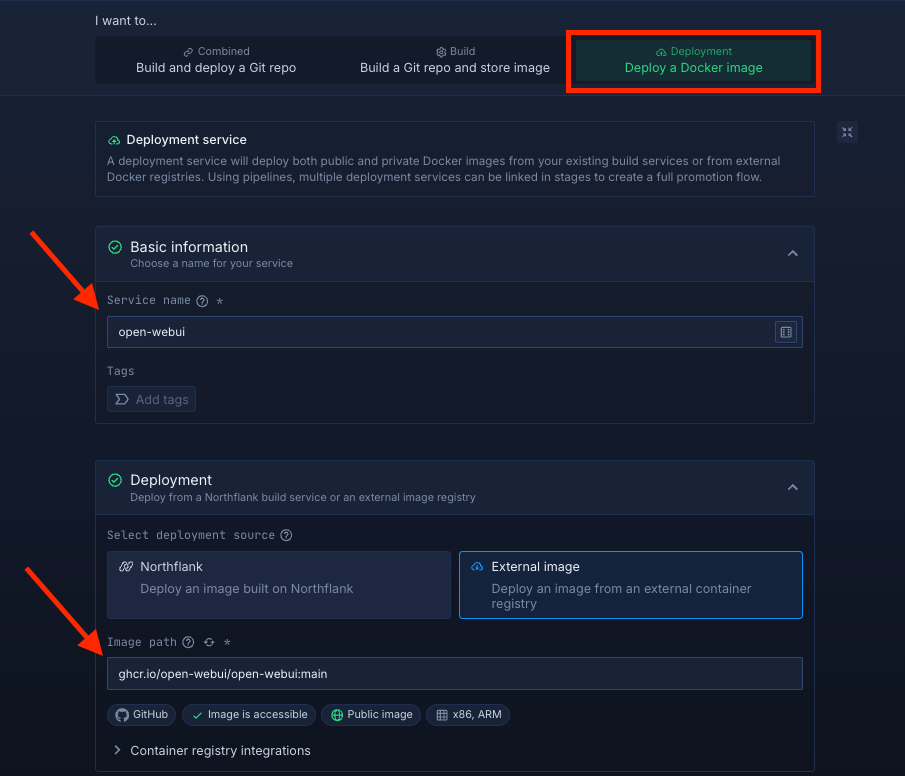

Within your project, navigate to the Services tab in the top menu and click ’Create New Service’. Select Deployment and give your service a name such as open-webui.

For the deployment source, choose External image and enter the official Open WebUI image: ghcr.io/open-webui/open-webui:main.

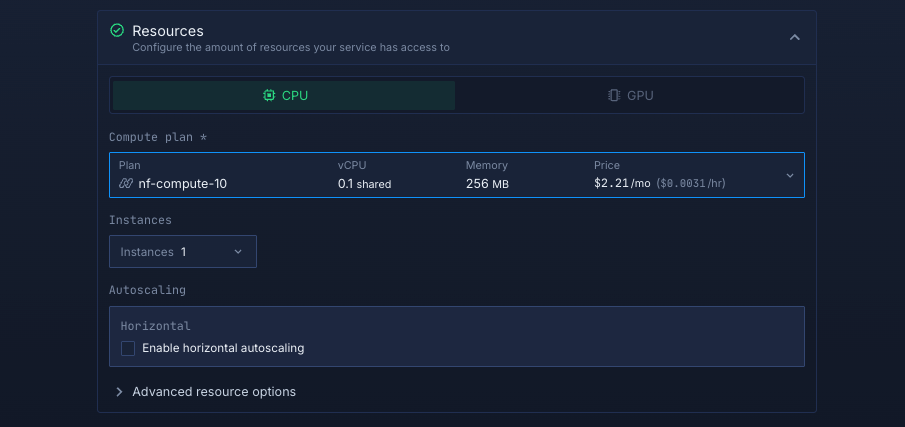

Select compute resources

Choose the compute size that best matches your workload:

- Small plans are fine for testing or lightweight usage.

- Larger plans are recommended for production.

Note: You can adjust resources later, so you can start small and scale as needed.

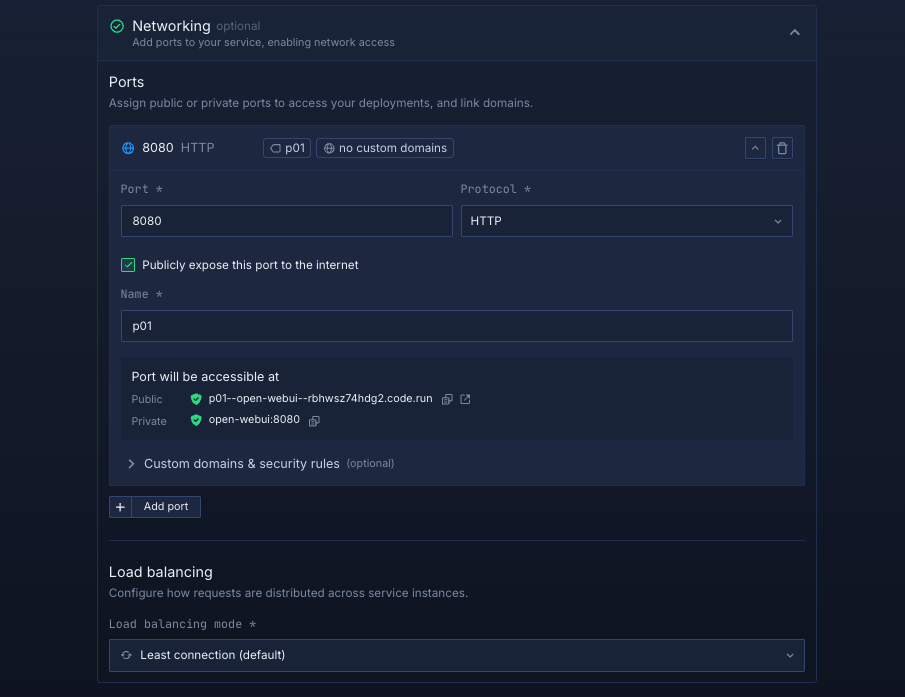

Set up a port so your app is accessible:

- Port:

8080 - Protocol:

HTTP - Public access: enable this to let people access your Open WebUI instance from the internet

Northflank will automatically generate a secure, unique public URL for your service. This saves you from having to manage DNS or SSL certificates manually.

Deploy your service

When you’re satisfied with your settings, click “Create service.” Northflank will pull the image, provision resources, and deploy Open WebUI.

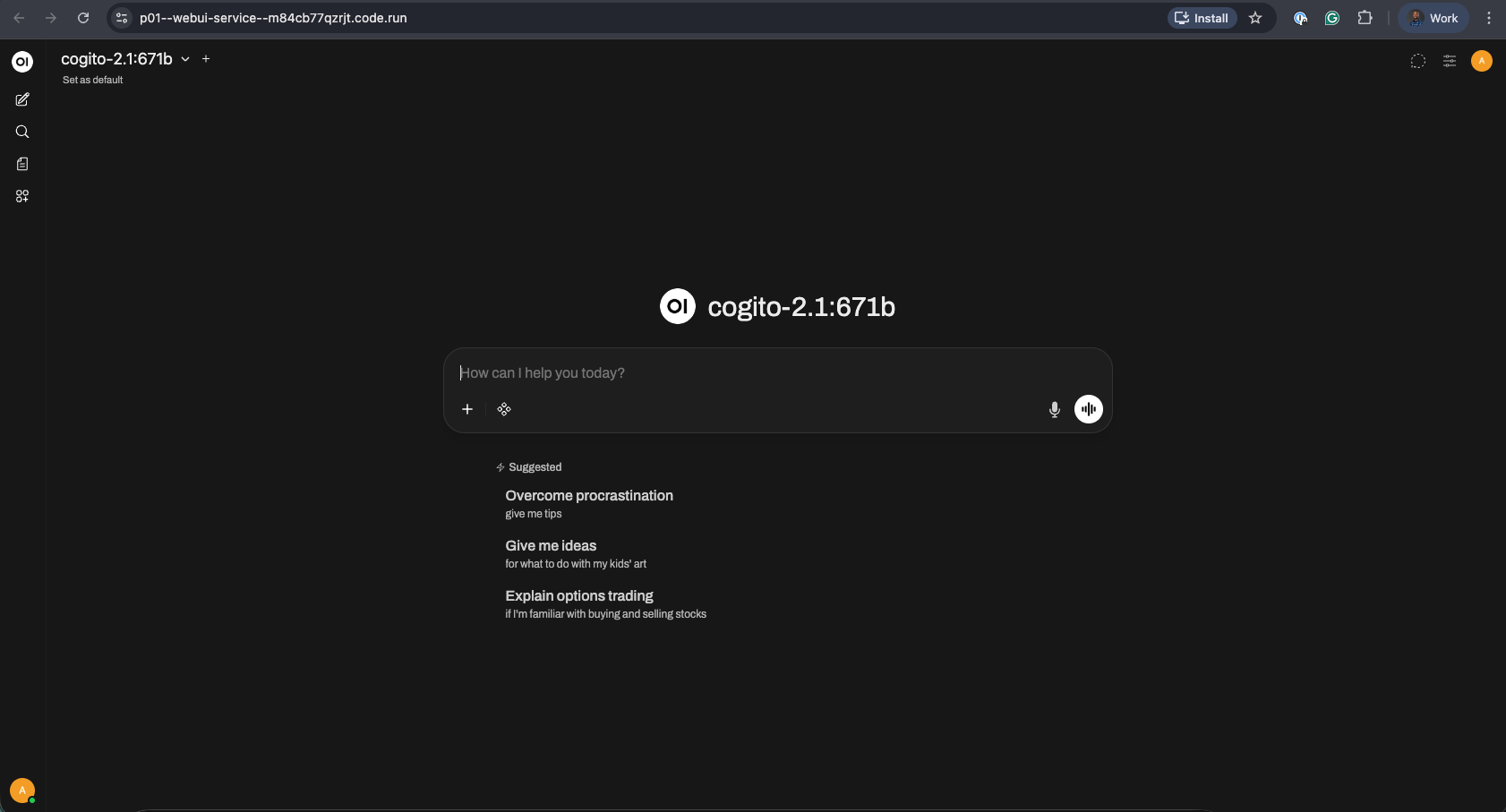

Once the deployment is successful, you’ll see your service’s public URL at the top right corner, e.g.: p01--open-webui--lppg6t2b6kzf.code.run

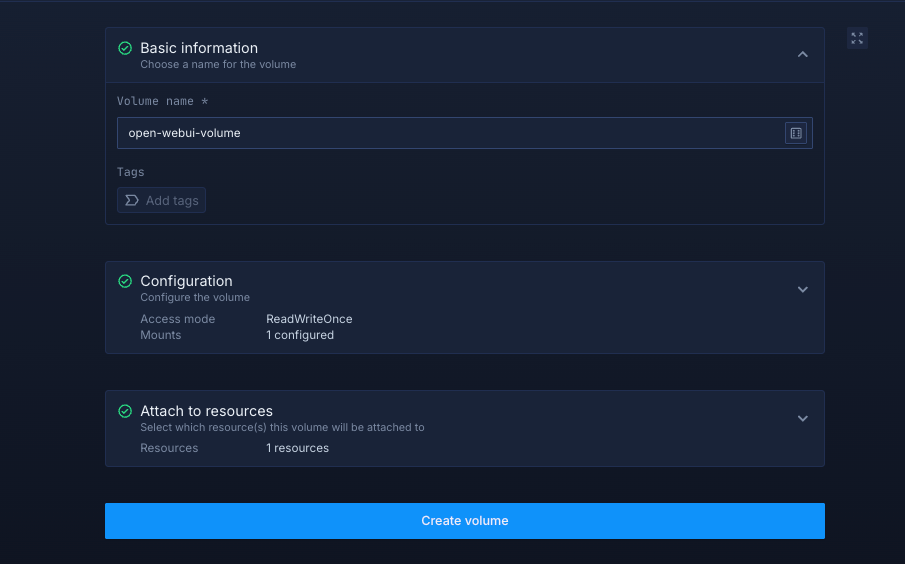

Inside your project, go to the Volumes tab and click Create new volume.

- Name it

open-webui-volume - Choose an access mode and storage type (Single Read/Write and NVMe is recommended)

- Choose a storage size (start small for testing, scale up for production)

- Set the volume mount path to

/app/backend/data - Attach the volume to your

open-webuiservice to enable persistent storage - Click Create volume to finalize.

After successfully creating your volume, you need to restart your service. Once completed, you can access your deployed service.

Deploying Open WebUI on Northflank gives you a private, stable, and extensible interface for working with large language models. You can use the one-click template for a streamlined setup or configure everything manually if you want full control. With persistent storage, automatic networking, and simple backend integration, you have everything needed to build a secure internal AI workspace for yourself or your team.