Runpod vs Lambda vs Northflank: GPU cloud platform comparison

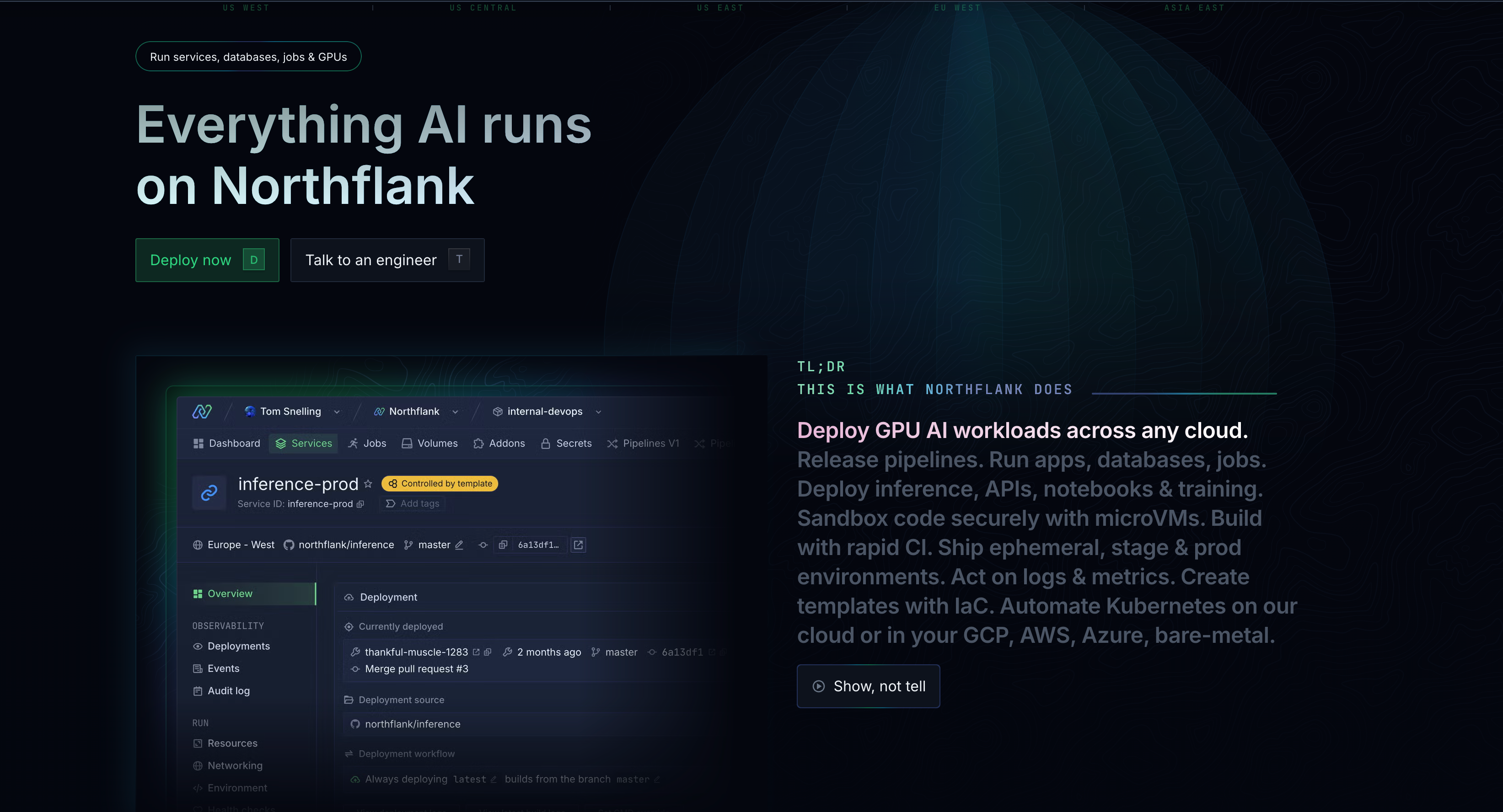

If you need serverless AI workflows with zero cold starts, Runpod provides managed infrastructure. For traditional GPU cloud with academic backing, Lambda Labs offers reliable compute with a decade-long AI focus. However, Northflank delivers better overall value with affordable GPU pricing (H100s at $2.74/hr) and enterprise features like deploying in your own cloud, plus a complete platform for both AI and non-AI workloads that reduces vendor complexity. Try the platform or speak with an engineer to see the difference.

You're comparing Runpod vs Lambda vs Northflank because you need GPU cloud infrastructure for your AI projects, and I understand that choosing between these three platforms can feel overwhelming with their different pricing models and features.

That's why I've put together this guide to simplify the process of finding your next GPU cloud platform by:

- Breaking down what you get with each platform

- Comparing their pricing

- Helping you determine which one delivers the best value for your specific needs and budget

One thing to keep in mind is that each platform takes a fundamentally different approach. Runpod focuses on serverless AI workflows, Lambda emphasizes traditional cloud with academic roots, and Northflank gives you a complete developer platform.

Let’s get into it.

We'll compare Runpod, Lambda, and Northflank based on their business models, pricing approaches, target audiences, and enterprise capabilities.

After looking at the table, you should be able to answer these questions:

- “Which platform's approach aligns best with my team's technical expertise and workflow?”

- “What pricing model works best for my usage patterns and budget?”

- “Do I need GPU access only or a complete development platform with additional services like databases, CI/CD pipelines, and APIs?”

- “What level of enterprise features and infrastructure control does my project require?”

If you can't fully answer these questions from the table alone, proceed to the next sections, where we go into each platform's specific features and capabilities in detail.

Now, look at the table:

| Feature | Runpod | Lambda | Northflank |

|---|---|---|---|

| Business model | AI-focused cloud platform with serverless capabilities | Traditional GPU cloud with AI-first heritage since 2012 | Full-stack developer platform with GPU orchestration |

| Primary target | AI developers and ML teams seeking managed serverless infrastructure | AI researchers, academic institutions, and enterprises needing traditional cloud | AI developers, ML teams, enterprises, and teams needing full-stack solutions |

| Pricing approach | Pay-per-second with Flex/Active worker options | Pay-per-minute with on-demand, reserved, and private cloud tiers | Per-second billing with flexible pricing and enterprise features included |

| GPU availability | 30+ GPU models (RTX 4090 to H100) across global regions | Latest NVIDIA models (B200, H200, H100, A100) with focus on hardware | 18+ GPU types including H100, H200, B200, A100, AMD MI300X across multiple clouds |

| Platform focus | Serverless AI optimization with FlashBoot and auto-scaling | Pure AI compute with pre-installed ML frameworks and inference APIs | Complete platform supporting both AI and non-AI workloads (databases, APIs, CI/CD) |

| Enterprise features | 99.9% uptime SLA, SOC2 certification in progress | SOC2 Type II compliant, private cloud options, academic partnerships | SOC2/HIPAA/ISO 27001 ready, BYOC deployment, secure microVM isolation |

| Infrastructure control | Managed infrastructure with AI-specific tools and templates | Traditional cloud instances with pre-configured environments | Full control with option to deploy in your own cloud infrastructure |

| Unique value proposition | Zero cold starts with FlashBoot technology and serverless scaling | Academic-trusted platform with decade-long AI expertise and inference APIs | Most affordable pricing with enterprise features and full-stack platform capabilities |

Maybe I have the power to read minds, but I know you might already be thinking:

“Hey! Can Northflank actually handle both my AI workloads and the rest of my application infrastructure without forcing me to jump from one platform to the other?”

The answer is “YES”. I’ll walk you through what Northflank is (if you’re hearing about it for the first time), what the platform offers, what the pricing is like, and why Northflank’s approach might save you both time and money compared to bringing together separate solutions.

Northflank is a comprehensive (you can also call it the “all-in-one”) developer platform that handles everything from GPU compute to databases, APIs, and CI/CD pipelines, allowing you to deploy your entire application stack either on Northflank’s managed cloud or in your own cloud infrastructure while using the platform layer.

That was a lot… I know. What I’m saying in simple terms is this:

Northflank is like getting your GPU compute, databases, APIs, and deployment tools all in one place. So, intead of using separate services for each piece of your project, you can build and run everything through Northflank, either on Northflank’s servers or on your own AWS/Google Cloud account.

So, unlike other platforms that focus solely on GPU access, Northflank treats GPU workloads as part of your broader development needs.

This means you can run your AI training on an H100, deploy your inference API, set up your databases, and manage your CI/CD pipelines all from the same platform.

You might be a founder or work in a startup that needs everything to “just work,” or an enterprise that requires deploying in your own AWS/GCP/Azure account; Northflank adapts to your infrastructure preferences.

Now you know what Northflank is and offers to an extent, but there’s more. Let’s look at some of the key features you get with Northflank that make it different from other GPU providers.

I have categorized them into three: GPU and compute capabilities, full-stack platform features, and enterprise and security.

- GPU and compute capabilities:

- 18+ GPU types including H100, H200, B200, A100, and AMD MI300X

- Deploy in your own cloud infrastructure (GCP, AWS, Azure, Oracle Cloud) while using Northflank's platform

- Secure microVM isolation for multi-tenant workloads

- Real-time interface with team collaboration and fine-grained permissions

- Full-stack platform features:

- Support for both AI and non-AI workloads (databases, background jobs, APIs, CI/CD pipelines)

- Native integrations with GitHub, GitLab, Bitbucket, and container registries

- Multiple access methods: UI, API, CLI, JavaScript client, Infrastructure as Code, TypeScript SDK

- Built-in monitoring, logging, and observability tools

- Enterprise and security:

- SOC2/HIPAA/ISO 27001 compliance ready

- Bring Your Own Cloud (BYOC) deployment options

- Fine-grained access controls and team management

- 99.9% uptime SLA

Notice that the major advantage here is that you’re not only getting GPU access, you’re getting a complete platform that can handle your entire development workflow, which often translates to significant cost savings by reducing the number of vendors you need.

Northflank uses transparent per-second billing with no hidden fees, and the GPU pricing is often more affordable than specialized GPU providers:

See some of the GPU pricing examples:

- H100 (80GB): $2.74/hour

- H200 (141GB): $3.14/hour

- B200 (180GB): $5.87/hour

- A100 (40GB): $1.42/hour, A100 (80GB): $1.76/hour

All pricing includes CPU, memory, and storage bundled together, so you're not getting surprised by additional compute costs.

And the Platform plans:

- Developer Sandbox: Free for basic workloads and exploration

- Starter Plan: Pay-as-you-go with no commitments

- Pro Plan: Advanced features for growing teams

- Enterprise Plan: Custom solutions with BYOC deployment options

So, what’s the cost advantage? Enterprise features like Bring Your Own Cloud (BYOC) deployment, compliance support, and full-stack capabilities are included at no extra cost. This often reduces your total infrastructure expenses since you're not paying for multiple specialized platforms.

Let’s see the differences quickly:

Versus Runpod:

While Runpod is good at serverless AI workflows, Northflank provides broader platform capabilities that extend beyond AI workloads. You get affordable GPU pricing plus the ability to deploy your entire application stack, including databases, APIs, and CI/CD pipelines. Northflank also offers enterprise features like Bring Your Own Cloud (BYOC) deployment that Runpod doesn't provide.

Versus Lambda:

Lambda focuses purely on AI compute with good academic backing, but Northflank provides a comprehensive (all-in-one) development platform. While Lambda charges separately for compute, storage, and additional services, Northflank bundles everything together with transparent pricing. Plus, Northflank's BYOC option gives you infrastructure control that Lambda's managed-only approach doesn't offer.

So, the main differentiator? Northflank takes out the need to piece together multiple vendors for your infrastructure needs. Instead of managing separate platforms for GPU compute, databases, CI/CD, and monitoring, you get everything in one place with consistent pricing and unified management.

If you're specifically looking for AI-focused infrastructure that scales automatically without you having to manage servers, Runpod might be what you need. Let’s see what makes Runpod’s approach different.

Runpod is an AI-focused cloud platform that provides serverless GPU environments with pre-installed machine learning frameworks. It’s specifically designed for AI workloads with GPUs that can scale from zero to thousands of workers automatically.

One of Runpod’s focuses is solving the "cold start" problem that most serverless platforms face. With Runpod’s FlashBoot technology, you get sub-200ms startup times, which means your AI models can respond almost instantly, even when scaling from zero.

Let’s see what Runpod focuses on:

- Serverless AI capabilities:

- FlashBoot technology with sub-200ms cold starts

- Auto-scaling from 0 to 1000+ GPU workers

- Pre-configured environments with PyTorch, TensorFlow, and other ML frameworks

- Runpod Hub for one-click model deployment

- GPU options:

- 30+ GPU models from RTX 4090s to H100s

- Pay-per-second billing

- Global regions for low-latency access

- Instant multi-node GPU clusters

- Developer experience:

- Serverless and traditional cloud GPU options

- Built-in Jupyter notebooks and development tools

- API access for automation

Runpod offers three pricing models:

- Cloud GPUs (traditional instances):

- H100 SXM (80GB VRAM): $2.69/hour

- A100 SXM (80GB VRAM): $1.74/hour

- A100 PCIe (80GB VRAM): $1.64/hour

- RTX 4090 (24GB VRAM): $0.69/hour

- Available in Community Cloud (cheaper) or Secure Cloud (enterprise features)

- Serverless pricing:

- Flex Workers (pay when running): H100 (80GB) at $4.18/hour, A100 (80GB) at $2.72/hour

- Active Workers (always-on, 30% discount): H100 (80GB) at $3.35/hour, A100 (80GB) at $2.17/hour

- Storage: Network volumes at $0.07/GB/month with no data transfer fees

The serverless options cost more per hour, but you only pay when actually processing, which can save money for sporadic workloads.

Let’s see the major differences:

Versus Lambda:

Runpod focuses on serverless capabilities while Lambda offers traditional cloud instances. Runpod's FlashBoot technology handles cold starts, making it better for real-time applications, while Lambda's strength is in academic backing and inference APIs with more predictable pricing.

Versus Northflank:

Runpod specializes purely in AI workloads with deeper ML-specific optimizations like zero cold starts and auto-scaling. However, you're limited to mainly GPU compute; you'll need separate platforms for databases, APIs, and CI/CD, which Northflank handles as part of its full-stack approach.

The main trade-off:

Runpod gives you the most advanced serverless AI features, but you're getting a specialized tool rather than a complete platform. Great if AI processing is your only need, but you'll need additional services for everything else.

You can also see how Runpod compares to Vast.ai in this article, and if you want to take a look at more Runpod alternatives, you can also check this piece.

If you're coming from an academic background or need a traditional GPU cloud provider with proven AI expertise, Lambda might feel familiar and reliable. Let’s break down what the decade-plus focus on AI infrastructure offers to see if the academic-trusted approach fits your needs.

Lambda is a traditional GPU cloud platform that's been focused solely on AI since 2012. They position themselves as "the superintelligence cloud" and have built their reputation by serving 97% of top US research universities and over 100,000 ML engineers.

Unlike newer platforms, Lambda started by selling GPU workstations to researchers before moving into cloud services. This means their platform is designed around the workflows that academic researchers and AI labs use, with pre-installed frameworks and configurations that "just work" for machine learning.

Let’s see what Lambda focuses on:

- AI-first infrastructure:

- Latest NVIDIA GPUs (B200, H200, H100, A100) with hardware focus

- Pre-installed ML frameworks: PyTorch, TensorFlow, CUDA, cuDNN, JAX

- On-demand instances, 1-Click Clusters, and private cloud options

- Inference API for deploying models with no rate limits

- Academic and enterprise features:

- SOC2 Type II compliance

- 99.999% uptime SLA

- Direct support from AI infrastructure engineers

- Academic partnerships and institutional pricing

- Flexible deployment options:

- On-demand instances (pay-per-minute)

- 1-Click Clusters (16-1,536 GPUs with InfiniBand)

- Private cloud contracts (1,000+ GPUs for enterprises)

Lambda offers straightforward per-minute billing across different service tiers:

- On-demand instances:

- H100 SXM (80GB): $2.99/hour

- H100 PCIe (80GB): $2.49/hour

- A100 SXM (40GB): $1.29/hour

- GH200 (96GB): $1.49/hour

- A6000 (48GB): $0.80/hour

- 1-Click Clusters:

- H100 clusters: From $2.69/hour per GPU

- B200 clusters: From $3.79/hour per GPU (reserved pricing available)

- Private cloud: As low as $2.99/hour for B200 with multi-year commitments

- Inference API: Token-based pricing for various models, including Llama and DeepSeek variants

Let’s see what makes Lambda different from the rest.

Versus Runpod:

Lambda offers traditional cloud instances while Runpod focuses on serverless capabilities. Lambda's strength is in proven reliability and academic backing, while Runpod majors at auto-scaling and zero cold starts. Lambda is better for consistent workloads, and Runpod for variable AI processing.

Versus Northflank:

Lambda focuses purely on AI compute with deep ML expertise, while Northflank provides a full-stack development platform. Lambda offers inference APIs and academic partnerships that Northflank doesn't, but you're limited to mainly GPU services; you'll need separate platforms for databases, CI/CD, and other development tools.

The main value:

Lambda gives you a proven, academic-trusted platform with deep AI expertise and reliable infrastructure. You're getting specialized knowledge and battle-tested systems, but you're paying for a single-purpose tool rather than a comprehensive development platform.

If you want to take a look at other Lambda alternatives, you can check this piece.

After comparing all three platforms, see this quick decision framework:

Choose Lambda if you're working in academic research or need a proven AI platform with traditional cloud reliability and inference APIs.

Go with Runpod if you need AI-specific workflows with serverless capabilities and zero cold starts for variable workloads.

However, for most teams building production applications, Northflank delivers the best overall value by combining affordable GPU pricing with enterprise features like deploying in your own cloud, full-stack platform capabilities, and comprehensive DevOps tools that eliminate the need for multiple vendors.

While others force you to choose between AI specialization, cost, or platform completeness, Northflank gives you all three in one solution.

See the differences for yourself by trying out Northflank's platform or speaking 1:1 with an Engineer (yes, a real human, not a machine 😉) to discuss your specific requirements.