Rent H100 GPU: Pricing, performance, and where to get one

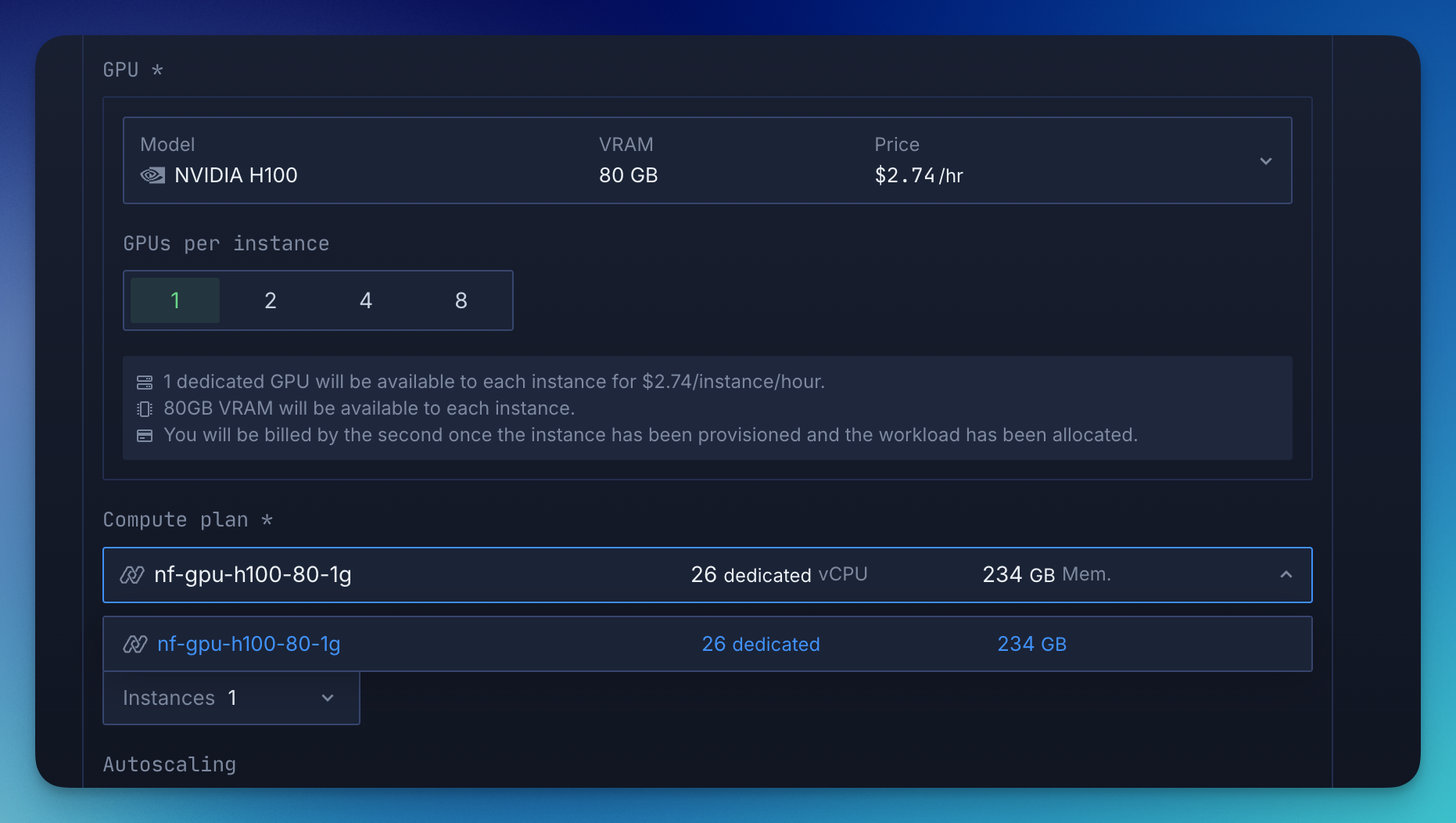

If you're looking for the best platform to rent your H100 GPU, you can get one on Northflank at a cheaper rate ($2.74/hr) for 80GB VRAM.

Unlike other platforms, Northflank offers a full-stack solution that integrates infrastructure, deployments, and observability in one place. It also supports various GPUs, including the H200, A100, B200, and many more.

Need dedicated H100 capacity? H100s are in high demand. If your project requires guaranteed availability or specific volume commitments, request GPU capacity here.

When you need to train or fine-tune deep learning models at scale, the hardware you choose makes a direct impact on speed, efficiency, and cost. Many teams now look to rent H100 GPUs, one of the most powerful options in NVIDIA’s lineup, built on the Hopper architecture and tuned for transformer models, FP8 precision, and high-throughput training. It outperforms even the well-established A100, making it ideal for both large-scale training and low-latency inference.

The cost of owning an H100 is significant, but renting puts its capabilities within reach. With cloud access, you can bring top-tier compute to your projects exactly when needed, whether adapting a foundation model to a niche dataset or deploying AI services at scale.

At Northflank, developers rent H100 GPUs to accelerate research, shorten training times, and run production inference without the complexity of managing physical infrastructure. This guide explores why the H100 is different and how to get the most from renting one.

The NVIDIA H100 Tensor Core GPU is the flagship of the Hopper architecture, designed from the ground up for large-scale AI and high-performance computing. It brings together fourth-generation Tensor Cores for accelerating matrix operations, FP8 precision for higher efficiency without sacrificing accuracy, and HBM3 memory for extreme bandwidth.

Think of the PCIe variant as the versatile all-rounder, ready to slot into existing servers with minimal reconfiguration. The SXM variant, in contrast, is the high-performance specialist, connecting through NVLink and NVSwitch to push inter-GPU communication to incredible speeds. Together, they cover a spectrum of needs from smaller-scale training to the largest distributed AI jobs running across multiple nodes.

This hardware is not just about bigger numbers on a spec sheet; it is about enabling new possibilities. The H100 makes it feasible to train models with hundreds of billions of parameters or run real-time inference for massive user bases, both of which were once restricted to only the largest tech companies.

As previously explained, the NVIDIA H100 is one of the most capable GPUs ever built, offering exceptional speed, memory bandwidth, and efficiency for large-scale AI workloads. Here's why renting makes sense:

- Cost-effective access - Avoid significant upfront investment while gaining immediate access to cutting-edge hardware

- No specialized infrastructure needed - Skip the complex setup and cooling requirements of owning physical GPUs

- On-demand flexibility - Scale compute power up or down based on project needs:

- Training massive transformer models

- Fine-tuning foundation models to specific domains

- Running low-latency inference in productionRunning low-latency inference in production

- Pay only for what you use - When your job is finished, the hardware is no longer on your books

- Try advanced features - Experiment with newer capabilities like FP8 precision and faster interconnects without commitment.

Renting H100s lets you match world-class performance with the pace and budget of your projects.

While the performance is unmatched, owning H100s outright is not always practical. The h100 rent price depends on form factor, provider, and market demand, but renting offers the flexibility to match compute power to project needs.

If your workload is constant and predictable, dedicated access may be best. For workloads that can pause or resume without penalty, spot rentals can reduce costs significantly. The PCIe model is generally more affordable, while the SXM model commands a premium for its higher performance ceiling.

The ability to rent for hours, days, or weeks means you only pay for what you use, avoiding the sunk cost of idle hardware while still benefiting from the H100’s capabilities.

Learn more about H100 pricing details here

Many providers offer H100 access, but the question often becomes ”Where can I rent an H100 PCIe GPU for deep learning?” or ” Where can I rent an H100 SXM GPU for deep learning?” in a way that is simple, cost-effective, and scalable. Northflank has emerged as a reliable answer to that question.

Northflank abstracts the complexity of running GPU workloads by giving teams a full-stack platform; GPUs, secure runtime, deployments, built-in CI/CD, and observability all in one. You don’t have to manage infra, build orchestration logic, or combine third-party tools.

Everything from model training to inference APIs can be deployed through a Git-based or templated workflow. It supports bring-your-own-cloud (AWS, Azure, GCP, and more), but it works fully managed out of the box.

- Inference APIs with autoscaling and low-latency startup

- Training or fine-tuning jobs (batch, scheduled, or triggered by CI)

- Multi-service AI apps (LLM + frontend + backend + database)

- Hybrid cloud workloads with GPU access in your own VPC

Northflank offers access to 18+ GPU types, including NVIDIA A100, B200, L40S, L4, AMD MI300X, and Habana Gaudi.

The decision to rent H100 GPUs can be the difference between hitting your training goals on time and struggling with limited compute. The H100 is built for demanding workloads, but the platform you choose determines how well it performs for you. Some providers make the process slow and unpredictable, while others hide costs that only appear once your workloads are running.

Northflank makes it simple to rent H100 GPUs with transparent pricing, reliable performance, and all the infrastructure you need in one place. You get compute, storage, and networking configured and production-ready, so you can focus on training, fine-tuning, or running inference at scale. Sign up to deploy your first H100 today or book a short demo to see how it fits into your workflow.

What is the H100 rent price?

As of August 2025, the current rental price for the NVIDIA H100 on Northflank is $2.74/hr for the 80GB VRAM model, one of the cheapest rates in the market. You can learn more about how it compares to other providers here.

Where can I rent an H100 PCIe GPU for deep learning?

You can rent an H100 PCIe GPU for deep learning through platforms like Northflank, which make it simple to deploy without a complex setup. PCIe models are a strong choice for a balance of performance, compatibility, and cost.

Where can I rent an H100 SXM GPU for deep learning?

For the highest performance, you can rent an H100 SXM GPU for deep learning on Northflank. SXM models offer faster interconnect speeds with NVLink and greater thermal headroom, making them ideal for the most demanding large-scale training workloads.