Dragonfly is a fast in-memory store with backends for Redis and Memcached. We will learn how to deploy Dragonfly, with Docker on Northflank in minutes.

-

Create a new service.

-

Select Deployment as the Service type.

- Select external image and

docker.dragonflydb.io/dragonflydb/dragonfly:latestas image path.

-

Port 6379 TCP is automatically detected and exposed to your projects private network.

-

Dragonfly will be only available internally. In case you need to connect to it from your machine, Northflank forward proxy will be required.

-

- Select Plan (vertical scaling) - starting from $2.21 per month with plan sizes to suit your workload. You can even use Northflank's free developer tier.

-

Create and wait for a sandboxed container to deploy in seconds.

-

Logs with live tailing, search and filter by accessing the container.

-

Metrics with real-time compute, network and TCP charts.

-

Start the forwarding of the service through the Northflank CLI.

-

Using the

redis-cli, for a service calleddragonfly, connect to it using the following string:

redis-cli -h dragonfly -

Once connected you can start running commands. For example:

set hello bye

get hello

In order to enable authentication you can rely on the requirepass parameter when starting the server.

-

First let’s create a proper password string. Jump to the

Environmenttab in the Service Dashboard and create a new one through theGenerate secret values. Name the new environment variableDRAGONFLY_PASSWORD. Commit the changes.

-

Now go to the

CMD Overridetab.-

Once there, edit the command through the

Edit commandsbutton. -

Enable the

Use overrideoption. Then, replace the CMD Override text field with the following command:

/bin/sh -c “./dragonfly --requirepass $DRAGONFLY_PASSWORD”-

The

/bin/shis required for the DRAGONFLY_PASSWORD environment variable to be correctly processed as therequirepassargument value.

- Commit the changes through the

Save & restart button.

- Wait for the container to start up again.

-

- You may have to restart the forwarder given that the container was restarted. Once the forwarder is running and using the

redis-cli, use the following arguments to connect to it:

$DRAGONFLY_PASSWORD=<copy value from northflank ui> redis-cli -h dragonfly -a $DRAGONFLY_PASSWORD

-

Check that you’re able to run commands as shown previously.

-

You may notice that when querying the keys created previously they no longer exist. This is because persistence for this container is not enabled.

-

In order to avoid data loss between restarts or outages, we first need to provision persistence storage to our service.

-

Head over to the

Volumestab and add a new volume. -

Select proper storage space according to your requirements. In this case we’re going to use 5GB.

-

The Dragonfly container uses the

/datapath to store all of its data. Make sure to put/dataas theContainer mount path. -

Confirm the changes through

Create & attach volume.

Dragonfly by default will use snapshot persistence, which stores changes on a file after a certain number of commands or a certain amount of time has elapsed.

This is not very durable as the latest changes written to Dragonfly may not get written to the snapshot file. To have a more durable persistence, in KeyDB and Redis append only mode is used, which appends changes on a file each time the data set has been changed. We await for Dragonfly appendonly mode.

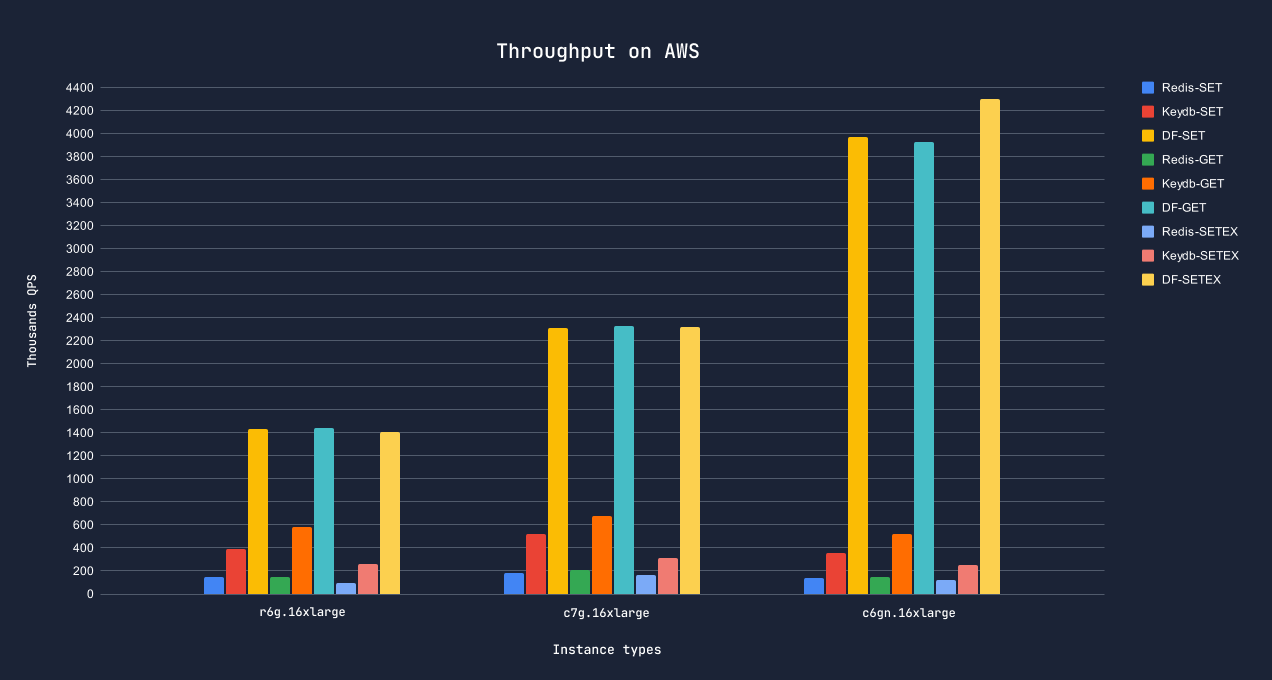

Dragonfly has kindly provided performance benchmarks which we've enriched with compute and cost values from AWS.

| op | r6g.16xlarge | c7g.16xlarge | c6gn.16xlarge |

|---|---|---|---|

| set | 0.8ms | 1ms | 1ms |

| get | 0.9ms | 0.9ms | 0.8ms |

| setex | 0.9ms | 1.1ms | 1.3ms |

| Compute | 64 vCPU, 512GB, 25Gbps | 64 vCPU, 128GB, 30Gbps | 64 vCPU, 128GB, 100Gbps |

| Cost on AWS | $3.22/ph, $2,318.40/pm | $2.32/ph, $1,670.40/pm | $2.76/ph, $1 987.20/pm |

Northflank allows you to deploy your code and databases within minutes. Sign up for a Northflank account and create a free project to get started.

- Deployment of Docker containers

- Create your own stateful workloads

- Persistent volumes

- Observe & monitor with real-time metrics & logs

- Low latency and high performance

- Multiple read and write replicas

- Backup, restore and fork databases