Self-hosting AI models: Complete guide to privacy, control, and cost savings

Self-hosted AI models give you full control over your data privacy, reduce vendor dependency, and can lower long-term costs compared to API services.

Northflank's platform simplifies the complex process of self-hosting AI with one-click deployments, autoscaling, security, and compliance features if you want your business to take control of its AI infrastructure.

Self-hosted AI models are becoming the go-to choice for businesses that want complete control over their data, costs, and AI capabilities without having to rely on third-party API services. Still, there are a lot of questions, reservations, and considerations around it.

That's what we'll cover in this article. You'll understand:

✔️ What it means to self-host an AI model and why you should

✔️ The privacy concerns with cloud AI vendors

✔️ The difference between self-hosting AI and API vendors

✔️ Why your business should invest in self-hosting AI models

✔️ How Northflank helps you self-host AI models (privacy, security, and more)

✔️ How to get started with self-hosting AI

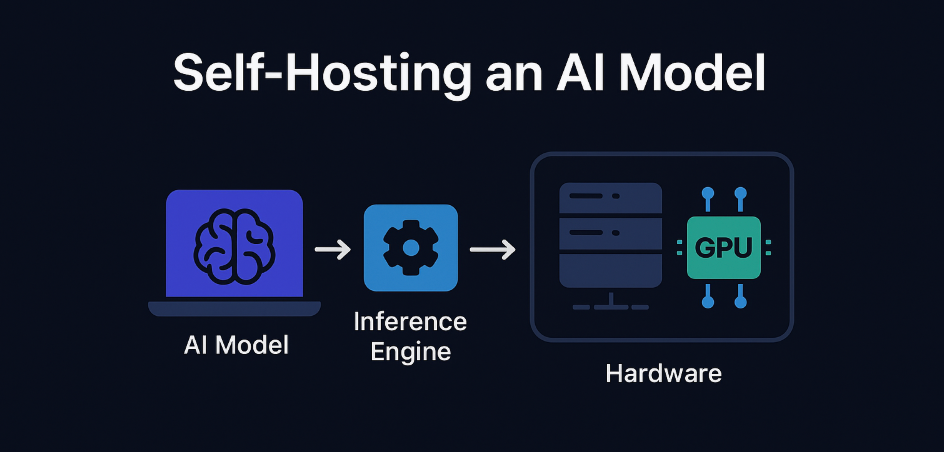

Self-hosting an AI model basically means you're running the AI on your own servers in place of paying someone else to do it for you.

It's like cooking at home versus ordering takeout.

When you self-host, you're responsible for downloading the model, setting up everything on your infrastructure, and managing the whole process yourself.

Now, the win here is that you control everything, meaning that:

- Your data stays with you

- You decide how fast it runs

- Nobody else gets to peek at your information

- You avoid the rate limits that come with API services

Self-hosting an AI model puts you in control - from inference to infrastructure

So, this is what you need to make it work:

- The AI model itself (basically the brain)

- Something to run it (the inference engine)

- The hardware to power it all (servers, GPUs, storage)

Most companies right now send their data to services like ChatGPT or Claude through APIs, but self-hosting flips that around. Why? Because now you're running everything in-house, which means you get to call all the shots.

Now that you know what self-hosting means, let's talk about why you'd want to go through the trouble in the first place.

When you send data to third-party AI services, what you're doing is handing over control to someone else's servers, which can create serious compliance issues.

Let me give you an instance.

If you're either in:

- Healthcare and need to follow HIPAA rules

- Or you're handling EU customer data under GDPR

- Or you're a public company dealing with SOX requirements

In these instances, self-hosting means keeping everything under your roof, where you can prove where your data lives and who has access to it.

Your business conversations, code, strategies, and customer information are gold mines that you probably don't want sitting around on someone else's servers.

For instance, when you use external AI APIs, there's always the risk that your sensitive data could be used to train their models or accidentally exposed.

Self-hosting means your intellectual property stays locked down in your own environment.

Relying on external AI services means you're at the mercy of their pricing changes, service outages, and policy updates.

Remember when OpenAI changed its API pricing or when services went down for hours?

Self-hosting gives you independence from these external factors and lets you switch between different models without rebuilding your entire system. With AI Agent software you gain a stable, self-managed foundation so your automation and AI workflows stay under your control.

When you use API services, you're often hit with rate limits that can slow down your applications or force you to pay premium prices for higher limits.

For example, Claude's rate limits can significantly impact development workflows.

Self-hosting removes these restrictions entirely, so you can process as many requests as your hardware can handle.

If you have cloud credits with AWS, Google Cloud, or Azure, self-hosting lets you put those to work instead of paying separate API fees.

This is especially valuable for startups that can get free AWS credits and want to maximize their runway.

Okay, by now you understand if self-hosting AI fits your business needs, but you might be thinking about the technical complexity we mentioned earlier.

That's where Northflank comes in.

We've built a platform that gives you all the benefits of self-hosting without having to manage servers, configure GPUs, or handle scaling infrastructure yourself.

Let's go over how we make this possible.

Northflank is designed with enterprise security from the ground up.

We help teams become SOC 2 compliant through our enterprise features like role-based access controls, audit logging, and network isolation that meet the strictest enterprise requirements.

If you need HIPAA compliance for healthcare data or GDPR compliance for EU customers, our platform handles the security infrastructure so you can focus on your AI applications rather than managing security certificates and compliance paperwork.

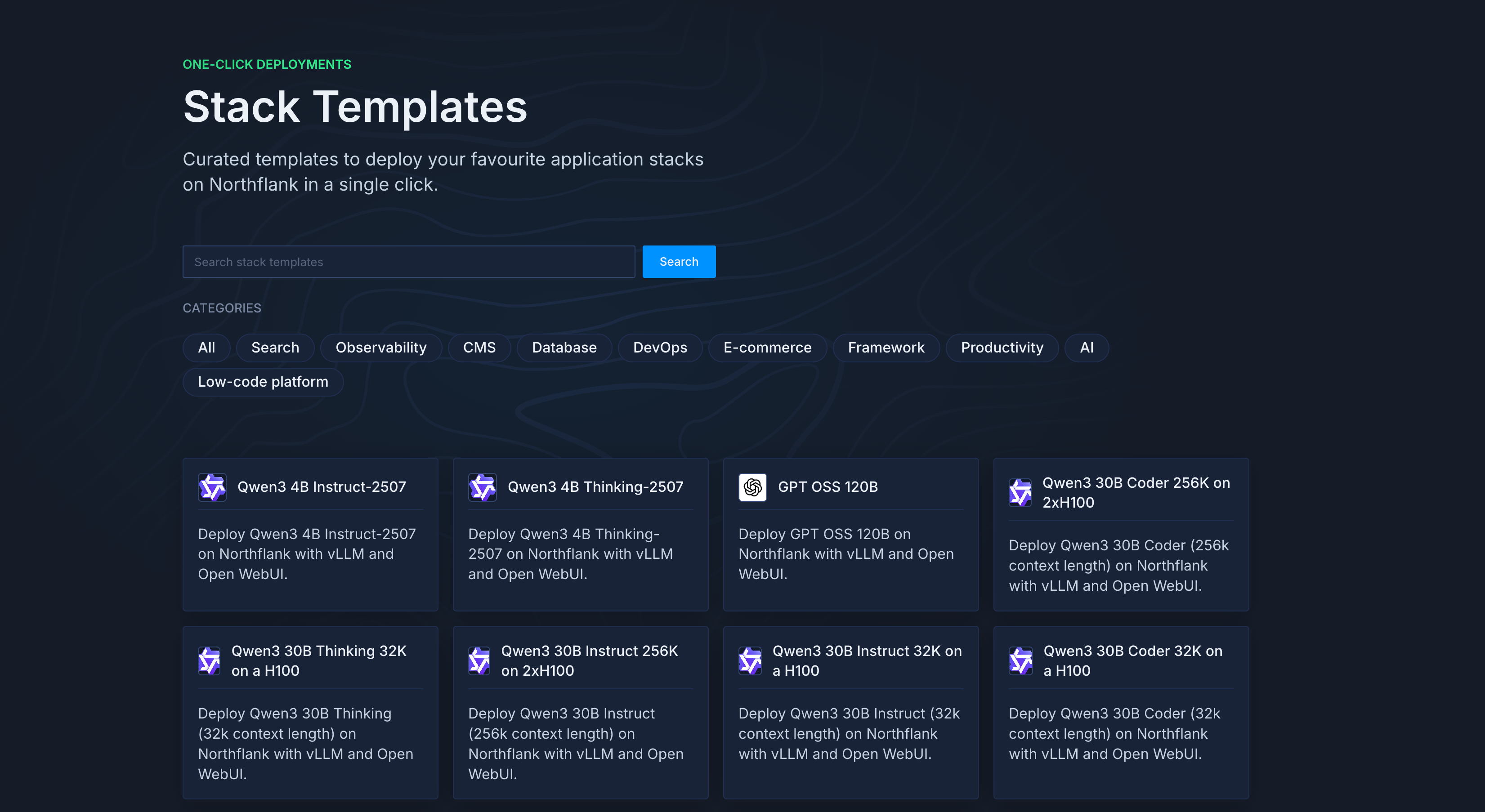

Remember how we said self-hosting usually involves downloading models, setting up inference engines, and configuring infrastructure?

Northflank removes that complexity with pre-built templates.

You can deploy popular models like DeepSeek R1, Qwen3-Coder, or GPT-OSS with literally one click.

Our starter kits include everything configured and ready to go: the model, the serving infrastructure, monitoring, and scaling, so you're running AI in minutes rather than weeks.

This is the solution for privacy-conscious businesses.

You can run Northflank's platform in your own AWS, Google Cloud, or Azure account.

Northflank's one-click deployment guides for popular AI models including GPT-OSS, DeepSeek R1, n8n workflow automation, vLLM, and Qwen3-Coder

This means your data never leaves your cloud environment, you maintain complete control over data residency, and you get transparent billing directly from your cloud provider.

It's like having your cake and eating it too, that is, Northflank's simplicity with your complete data control.

You can see this in action with our guides on:

- self-hosting vLLM in your own cloud account

- deploying Deepseek R1 on AWS, GCP, and Azure

- running OpenAI's GPT-OSS model

- self-hosting Qwen3-Coder

- deploying n8n AI workflow automation

AI applications often need more than the model alone.

You need vector databases for embeddings, caching layers, and data processing pipelines.

Northflank is an all-in-one platform that supports your entire application stack, including both AI and non-AI workloads such as APIs, databases, background jobs, and your frontend applications.

It automatically scales your infrastructure based on demand and integrates everything together.

You can deploy your AI model alongside your vector database and supporting services in one unified platform.

Rather than managing everything yourself, Northflank provides 24/7 support and reliability guarantees.

When something goes wrong (and it always does in complex AI deployments), you have experts to help rather than spending your weekend debugging GPU drivers.

We handle the infrastructure monitoring, automatic failover, and performance optimization so your team can focus on building AI features rather than becoming infrastructure specialists.

We've talked about why protecting your data is important, but let's discuss what happens when you use cloud AI services and why these privacy concerns exist.

Most cloud AI vendors have policies that allow them to use your data to improve their models.

Even if they say they don't train on your specific data, the fine print often includes exceptions or vague language about "service improvement."

For example, OpenAI's data usage policies have changed multiple times, and what's considered private today might not be tomorrow.

When you send data to cloud AI services, it often doesn't stay with that company alone.

Your information might be processed by third-party contractors, stored on shared infrastructure, or accessed by support teams for troubleshooting.

You're trusting the AI company and everyone they work with to handle your sensitive data properly.

Cloud AI vendors operate across multiple jurisdictions, which creates a complex situation for compliance.

Your data might be processed in countries with different privacy laws, making it nearly impossible to ensure you're meeting all regulatory requirements.

If you need to prove data residency for GDPR or show audit trails for SOX compliance, you're dependent on whatever documentation the vendor provides.

Even after you stop using a service, your data might stick around on their servers longer than you'd like.

Many vendors have retention periods that extend well beyond your contract, and deleting data completely can be complicated or impossible.

Self-hosting gives you the power to delete everything immediately when you need to.

Now that you understand the privacy risks with cloud vendors, let's break down the major differences between running your own AI versus using someone else's service.

| Aspect | Self-Hosting AI | API Vendors |

|---|---|---|

| Control | Full customization of model parameters, response times, and system behavior | Limited to whatever options the vendor provides |

| Data flow | Everything stays within your network and infrastructure | Your data travels over the internet to their servers |

| Performance | No network delays, dedicated resources, unlimited requests | Network latency, shared resources, rate limits and token costs |

| Integration | Direct integration with your existing systems and databases | API calls only, limited to their interface |

| Customization | Modify models, fine-tune for your specific use case | Use pre-built models as-is |

| Costs | Upfront hardware investment, predictable ongoing costs | Pay per token/request, costs scale with usage |

So the bottom line is this:

Self-hosting gives you complete control and potentially better performance, but you handle all the technical complexity yourself. API vendors manage the infrastructure for you, but you're limited to their rules, pricing, and capabilities.

We've covered what self-hosting is and how it compares to API vendors, but you might have this question in mind:

“Is it worth the investment for my business?”

Many companies are making the switch for several important reasons. Let’s see some of those reasons.

While self-hosting requires upfront investment, the math often works in your favor long-term.

API services charge per token or request, which can quickly add up when processing large amounts of data.

For instance, a company using ChatGPT API for customer service might pay $500-2000 monthly, but after a year, that's $6000-24000, which is enough to buy quality hardware that you own forever.

Plus, API prices tend to increase over time, while your hardware costs stay fixed.

This is where self-hosting becomes most valuable.

You can train models on your specific data, industry terminology, and business processes.

For instance, a law firm can fine-tune a model to understand legal language, or a healthcare company can adapt it for medical terminology.

Also, API vendors only offer generic models that work reasonably well for general use cases, and while some offer limited fine-tuning options, you're still restricted by their capabilities and policies.

When you self-host, your customized model becomes a competitive asset that gets better with your data.

When everyone uses the same ChatGPT or Claude API, everyone gets similar results.

Self-hosting lets you build unique AI capabilities that set you apart from competitors.

You can create specialized workflows, integrate AI deeply into your products, and develop features that would be difficult to achieve with generic APIs.

Your AI becomes part of your competitive advantage rather than a commodity service.

Relying on external APIs means your business depends on someone else's uptime, pricing decisions, and policy changes.

We've seen API services go down for hours, change pricing overnight, or modify their terms of service.

Self-hosting removes these external dependencies and gives you control over your AI infrastructure, so your business operations aren't at the mercy of another company's decisions.

Now that you understand the benefits and have seen how Northflank simplifies the process, the next step is figuring out how to get started.

The best approach is to begin with a test project that demonstrates value without risking your entire operation.

Choose a specific use case, such as customer support automation or code assistance, and set clear success metrics, like response time improvements or cost savings.

Then, plan for a 30-60 day implementation timeline to prove the concept before scaling up.

Sign up on Northflank to test out these capabilities, or book a demo to speak with one of our engineers about your specific use case.

These step-by-step guides will get you running AI models in your own infrastructure:

- How to self-host Qwen3-Coder on Northflank with vLLM - Ideal for development teams wanting an AI coding assistant

- Self-host Deepseek R1 on AWS, GCP, Azure & K8s - Enterprise-grade deployment in your own cloud

- Run OpenAI's GPT-OSS model on Northflank - Open source alternative to ChatGPT

- Self-host vLLM in your own cloud account with Northflank BYOC - Complete control with Bring Your Own Cloud

- How to self-host n8n AI workflow automation on Northflank - Automate business processes with AI

- An engineer's guide to open source AI models - Technical overview for your development team