5 types of AI workloads and how to deploy them

AI workloads are the computational tasks that artificial intelligence systems perform to process data, learn patterns, and generate outputs.

For example, when you use ChatGPT to write an email, there's an AI workload running in the background that:

- processes your prompt

- understands the context

- and generates a response by performing millions of calculations.

That's just one common example.

You know how, when you deploy a regular web application, you're mainly concerned about handling HTTP requests and database queries, right?

However, with AI workloads, you're dealing with models that might need to process millions of images or generate text responses in real-time. As a result, it requires entirely different infrastructure considerations.

AI workloads are different because they're computationally intensive. What that means is they require specialized hardware, such as GPUs, and dynamic scaling based on demand.

These workloads range from training machine learning models on massive datasets to serving real-time predictions to your users.

We'll go over:

- Types of AI workloads

- Why GPUs are necessary for AI workloads

- How Northflank handles each workload with built-in orchestration

- Best practices for deploying AI workloads

It’s important that you understand the different types of AI workloads to help you choose the right infrastructure and deployment strategy.

Let me walk you through the five main categories you'll encounter when building AI applications:

Training workloads are where your AI models learn by processing massive datasets to identify patterns and adjust parameters.

Let's say you're building a chatbot for customer support.

During training, your model processes thousands of conversations, making predictions, comparing them to correct answers, and adjusting to improve over time.

This is the most resource-intensive AI workload, as it often requires multiple GPUs to run for days or weeks.

The challenge here is that training is experimental - you may need to try different approaches until you achieve the desired results.

Also, you need infrastructure that can spin up resources quickly and scale across multiple GPUs without the complexity that comes with configuration.

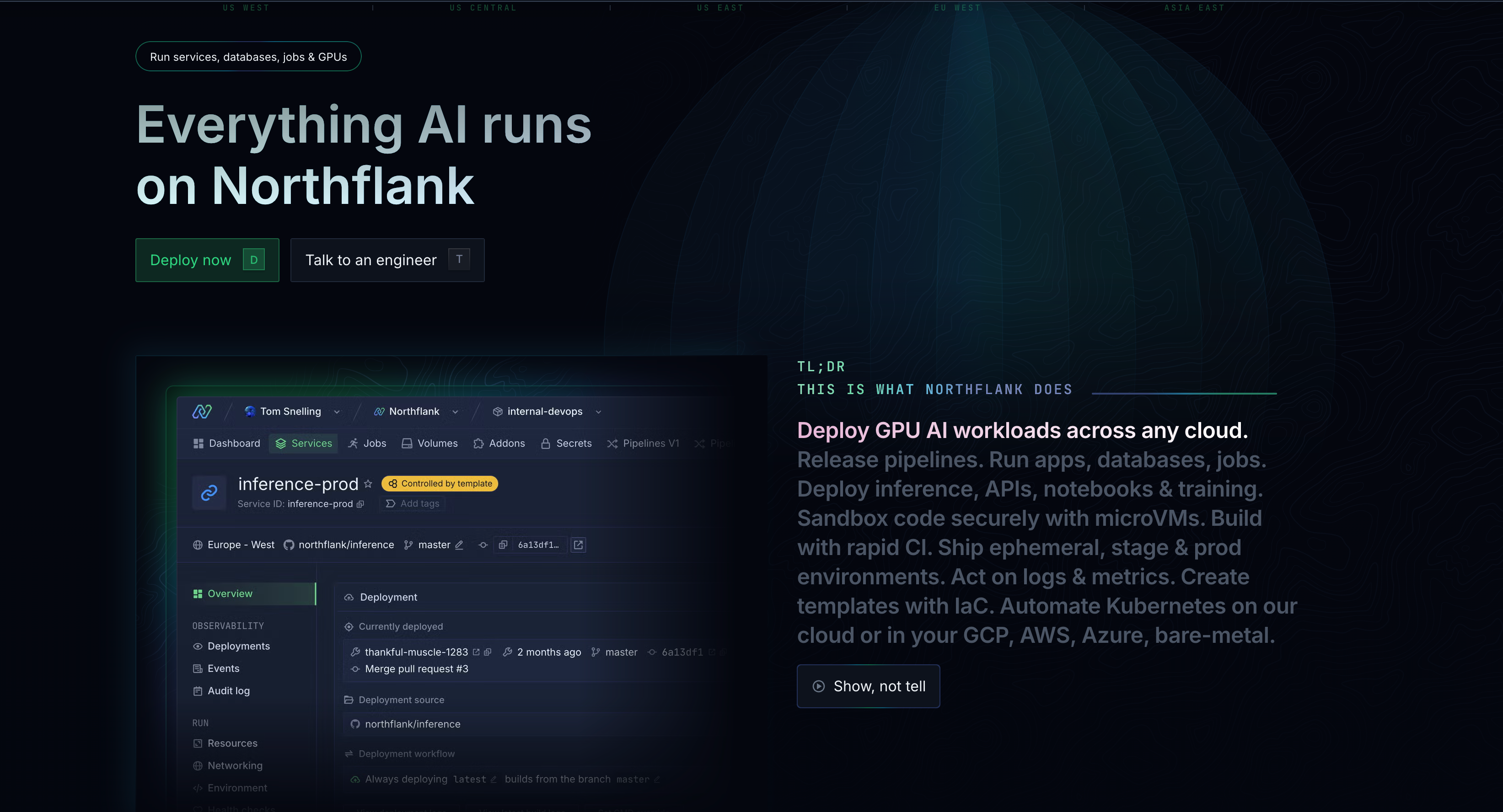

This is where platforms like Northflank come in - you can spin up GPU instances across multiple clouds without worrying about Kubernetes configuration or spot instance management.

The platform handles the orchestration automatically, so you can focus on experimenting with your models rather than managing infrastructure.

Fine-tuning adapts pre-trained models (like GPT or BERT) to your specific use case.

Let's say you want to build a legal document analyzer.

Rather than training from scratch, you'd take GPT and fine-tune it on thousands of contracts and legal briefs.

This teaches the model legal terminology and 'whereas' clauses that a general model wouldn't understand well. For example, training on artificial intelligence texts can help adapt models for finance, remote work, or even copy trading domains.

You're teaching an existing model your domain language.

It's faster than full training, but you still need to balance computational power with cost to avoid overspending on idle GPUs.

You can run fine-tuning jobs using frameworks like Hugging Face Transformers or custom training scripts with platforms like Northflank.

The platform handles GPU provisioning and can automatically terminate resources when your fine-tuning job completes, preventing unnecessary costs from idle instances.

Inference is where your trained models serve users and make predictions or generate responses in milliseconds.

For instance, when you upload a photo using an Instagram post maker to Instagram, it automatically suggests tags for people in the picture.

That's inference in action - your photo gets processed by a computer vision model that identifies faces and matches them to your contacts, all happening in real-time while you wait.

This is completely different from long-running training jobs, because inference handles individual requests that need immediate responses.

Your users won't wait 30 seconds for a photo to be tagged or a chatbot to respond.

The primary requirement here is low latency and automatic scaling.

Your infrastructure must serve models quickly, handle traffic spikes when lots of users are active, and scale down during quiet periods to control costs.

For inference workloads, you need infrastructure that can scale automatically from zero to handle traffic spikes.

Platforms like Northflank offer flexible GPU pricing with per-second billing, so you're not stuck paying for idle compute time when your models aren't actively processing requests.

Most AI applications involve complex workflows where data flows through multiple processing steps like cleaning, feature extraction, model inference, and post-processing.

Let's say you're building an app that analyzes product reviews to determine customer sentiment.

Your pipeline might work like this:

- first, you extract the review text from your database

- then clean it by removing special characters and fixing typos

- next you break it into sentences, run each sentence through a sentiment analysis model

- and finally combine all the scores to get an overall rating for the product.

Pipeline workloads orchestrate these steps to make sure data flows properly from stage to stage.

You need infrastructure that can coordinate all these steps, handle failures gracefully, and ensure each stage has the necessary data when it needs it.

Managing complex pipelines becomes much simpler when you can deploy each step as a separate container service.

With platforms like Northflank, you can orchestrate these workflows without manually configuring communication between services - the platform handles job scheduling, event triggers, and automatic scaling based on queue length.

Before your models can work, you need clean, properly formatted data.

Data processing workloads handle:

- extracting data from sources

- transforming it into the right format

- and loading it where your models can access it.

Let’s say you're training a model to predict which customers might cancel their subscription.

You'd need to gather data from your billing system, support tickets, app usage logs, and customer surveys.

Then you'd clean this messy data by removing duplicates, filling in missing values, standardizing date formats, and converting everything into a format your model can understand.

These workloads often need elastic scaling because you might have massive processing jobs followed by periods of low activity.

For instance, you might process a month's worth of customer data every Sunday night, but then have minimal processing needs during the week.

Your infrastructure must handle these spikes quickly without keeping expensive resources running idle the rest of the time.

This elastic scaling challenge is precisely what modern AI infrastructure platforms are designed to address.

You can process your monthly data dumps with a cluster of containers that automatically spin up, complete the job, and tear down when finished - all without keeping expensive resources running during quiet periods. And this is possible with platforms like Northflank.

If you've looked into AI infrastructure, you've likely heard that GPUs are important.

Your AI workloads need GPUs because they're designed for the massive parallel computations that AI requires.

Let's say you need to check the spelling of 10,000 words.

A CPU checks each word one by one, while a GPU is like having 1,000 people each check 10 words simultaneously, which is much faster.

AI algorithms work the same way.

They perform the same mathematical operation on thousands of data points at once.

So, without GPUs, your training would take weeks instead of hours, and your inference would be too slow for real-time applications.

This is why GPUs have become very important for AI workloads. They're specifically built for the parallel processing that makes AI practical.

Now that you understand the different types of AI workloads, let's talk about how you can deploy and manage them.

This is where having the right platform with built-in orchestration makes all the difference.

Northflank reduces the infrastructure complexity for training workloads.

You can spin up jobs with NVIDIA H100s and B200s across multiple clouds.

The platform handles Kubernetes orchestration, spot instance management, and scaling automatically.

You can start a training job and only pay for actual compute usage. The platform manages scaling and can use spot instances to reduce costs without manual intervention.

Your inference APIs automatically scale from zero to handle traffic spikes, then scale back down.

Northflank supports per-second billing, which means you only pay for the compute time you actually use, not for idle resources.

The platform handles load balancing, health checks, and SSL certificates out of the box. Therefore, there is no need to configure ingress controllers or manage networking complexity.

You can deploy each pipeline step as a separate service with automatic communication handling.

If you need image preprocessing before model inference, you can deploy a preprocessing service that scales based on queue length.

Northflank's job scheduling handles batch processing, periodic model updates, and event-triggered workflows, meaning that all the orchestration complexity is managed for you.

Northflank supports both persistent and ephemeral storage.

For instance, if you process terabytes of data daily, you can spin up processing containers, run jobs, and automatically tear down resources when the jobs are complete.

Northflank also provides multi-cloud support, which enables running processing jobs near your data, thereby reducing transfer costs and latency.

Selecting the right infrastructure for your AI workloads isn't only about finding the cheapest GPUs. There are several key factors you need to keep in mind to make sure your AI projects succeed:

Speed of deployment:

- AI development is iterative

- You need infrastructure that can rapidly spin up experiments without excessive configuration complexity

Cost management:

- Look for spot instance support, automatic scaling, and granular billing

- Pay for compute when it's actively used, not during idle periods

Multi-cloud flexibility:

- Different workloads have different optimal clouds

- Training might be cheaper on one platform, inference better on another

Observability:

- When 12-hour training jobs fail, you need detailed logs and metrics to debug quickly

Security:

- For sensitive data or regulated industries, ensure support for secure multi-tenancy, VPC deployment, and compliance

I get these questions a lot from teams starting their AI journey. Let’s see the most common ones with practical answers:

- What are the requirements for AI workloads? AI workloads need high-performance compute (usually GPUs), fast storage for large datasets, high-bandwidth networking, and orchestration tools for complex workflows. Training needs more compute power; inference needs low latency.

- Which workstation is best for AI workloads? For development, workstations with NVIDIA RTX 4090 or A6000 GPUs work well. For production, cloud-based H100s or B200s offer better scalability and cost efficiency than local workstations.

- How can AI reduce my workload? AI automates repetitive tasks, provides intelligent data processing, and handles routine decisions. Examples include automatically classifying support tickets, generating documentation, or optimizing resource allocation.

- Which edge computing service is best for AI workloads? Choose services supporting containerized AI models with GPU acceleration and automatic model syncing from central infrastructure, based on your latency and deployment requirements.

By now, you should be ready to deploy your first AI workload. I'll show you the quickest way to get up and running.

The fastest path is to connect your Git repository to Northflank and deploy a simple inference API.

The platform automatically detects your AI framework (PyTorch, TensorFlow, Hugging Face Transformers) and configures the runtime environment.

For complex workloads, use Northflank's templates to define reusable infrastructure patterns.

Create a training pipeline template once, and your team can spin up experiments with a single click. See available templates.

Start simple and iterate. Deploy a basic model, get it working in production, then add complexity as you learn your specific requirements. With proper orchestration and scaling, your AI workloads can focus on solving major problems rather than battling infrastructure complexity.

Start now or book a demo with an engineer.